Dominik Kos, Kristina Skufca

DATA ENGINEERS

Introduction

This is the first part of a multi-part series where we will be discussing the topics surrounding Data Catalogs. In this post we will be covering the theoretical background behind Data Catalogs, and how this software helps businesses define all the data currently used in the company, while also giving valuable insight into the intricacies of their data assets.

Data Catalog is represented as an organized collection of data in an organization. A “good” Data Catalog should hold all of the data in one singular place. Together with metadata, it assists organizations in the process of managing their data, as well as improving the current strategies used. It makes the whole ordeal of collecting, organizing, and accessing metadata, a lot easier for data scientists, data engineers, data analysts, and any other employees that work close-knit with data. Through that, the whole process of data discovery can be further improved upon.

Now, after that brief description above, let’s expand upon the definition with a simple analogy.

Definition and Analogy

Data Catalogs are often compared to book catalogs in libraries. If you were to go to a library, and search for a particular book, the longest way to go about that would be to go to each library and search for it in every aisle. That in itself might take a really long time. The more efficient way to tackle this issue would be to use the libraries catalog because it offers information about the book such as availability, number of copies, edition, location, etc.

Now imagine a catalog that covers every branch library of a particular library chain, and you, as a user, have access to find every single library that has a copy of this book, along with all its details, all in one interface. Besides you, there are thousands and thousands of other users using the same catalog.

That is exactly what an enterprise Data Catalog does for your data. It gives you a single, overarching view and deeper visibility into all of the data within your organization.

It represents an organized inventory of data assets in the organization, which can, with the use of metadata, provide more information about the data itself. The users (both IT and non-IT) can understand and find the information they need, quickly and efficiently.

Metadata

Metadata is the driving force of the Data Catalog, which in-essence enables us to create this vast inventory of data. Metadata is data that provides information about other data. It should answer specific questions that should improve understanding of the data across the organization. Simply put, Metadata is data that describes other data. It helps to find, use, and manage data.

In the case of Data Catalog, it handles three types of metadata:

- TECHNICAL METADATA

- Provides information on the structure and format of the data that is needed by source (computer) systems.

- Most important for Data Engineers because it can answer questions like “What will be the impact of a schema change in our CRM application?”.

- Includes information about schemas, tables, columns, file names, report names, and anything else documented by the source system.

- BUSINESS METADATA

- Provides the meaning of data.

- Defines terms in everyday language regardless of technical implementation.

- Focuses largely on the content and condition of the data.

- Most important for Business Analysts because it represents the business knowledge that users have about the assets in the organization.

- Includes information about business descriptions, comments, annotations, classifications, fitness-for-use, rating, and many more.

- OPERATIONAL METADATA

- Describes details of the processing and accessing of data.

- Most important for Data Stewards and Chief Data Officers because it can answer questions about managing the lifecycle of the data itself, concepts and standards used, e.g., “How many times has a table been accessed by users, and by which users?“, and “Are we really improving the quality of our operational data?“.

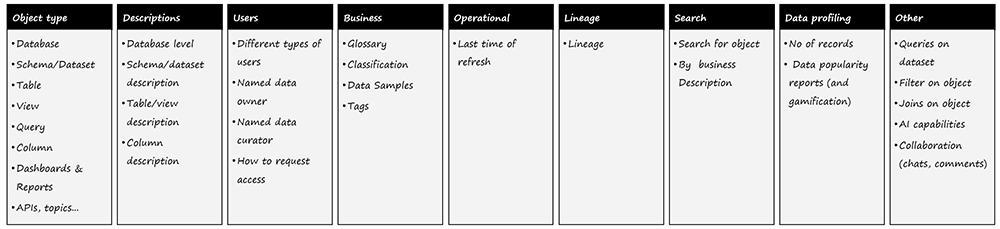

The types of Metadata that we in Syntio think good Data Catalogs should contain can be seen in Figure 1 below.

Figure 1: Types of Expected Metadata

Role of the Data Catalog in the Data Discovery Process

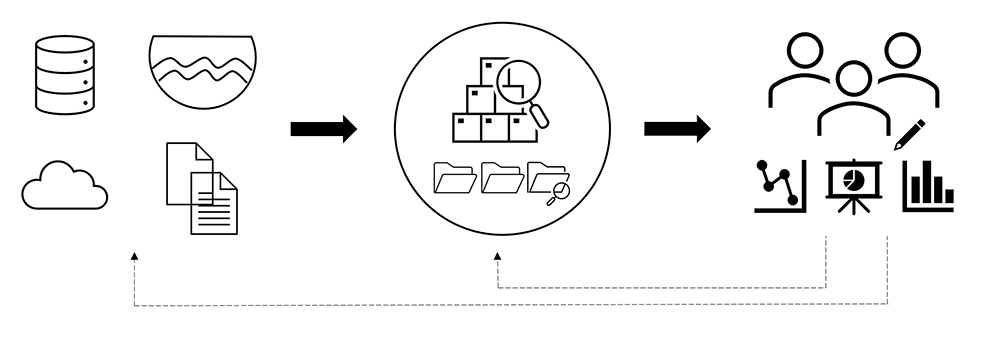

Figure 2: Process of Presenting the End-User with Metadata

In order to better understand where in this process of Data Discovery, Data Catalogs come in, let us take a look at Figure 2. Here we can see that the initial step in Data Discovery is pulling data from multiple sources. In the next step, the Data Catalog identifies and classifies the metadata about that data. This metadata gets passed on to Data Users, who can then access, analyze, and modify the metadata, however they wish. It is important to point out the arrows at the bottom of Figure 2, which represent that at any moment, users can directly propagate actions towards Data Sources, or the Data Catalog itself.

Features

The process of evaluating a Data Catalog can include a long list of features, but we here at Syntio, decided to put an emphasis on the ones mentioned in Figure 1. These can vary in importance depending on the companies needs but here we will cover: Data Discovery (which also includes Connectors), Business Glossary, Data Profiling, Automation, Data Lineage, and Access Management. Each one of these has a different goal in mind, all of which are thoroughly covered in the upcoming paragraphs.

Data Discovery

Data Discovery essentially involves the collection and evaluation of data from various sources and is often used to understand trends and patterns in the data. Usually, Data Discovery requires a progression of steps that organizations use as a framework to understand data.

At its core, it presents the more “technical“ part of the Data Catalog. Amongst other things, it encompasses all the other previously mentioned features: Connectors, Data Profiling, Automation, Data Lineage, and Access Management.

Data Discovery can be achieved in two distinct types of processes:

- Manual Data Discovery

- Manual management of data by a highly technical, human Data Steward.

- Primarily was used before the current advancements in Machine Learning.

- Smart Data Discovery

- Provides an automated experience, e.g., automated data preparation, conceptualization, integration, and presentation of hidden patterns and insights through Data Discovery visualization.

- Currently the more popular option due to the sheer number of advancements in Machine Learning.

Connectors

Connectors represent the process of Data Ingestion. More specifically speaking, Data Ingestion is the process of transporting the data from various sources to a destination which is often a temporary storage medium.

With regards to Data Catalogs, they use the results of this process to store Metadata ingested from a Metadata source. A Metadata source is the place where the Metadata that is being used comes from. A Metadata source can be anything from a database, or a file, to cloud data, or data lake.

In the case of most Data Catalogs, they already have connectors to certain popular services from which they can then ingest Metadata.

Business Glossary

Business Glossary is a list of business terms and their unique definitions that organizations use to ensure the same definitions are used company-wide when analyzing data, for example the term “customer“ can represent a certain individual to one team, and can represent an entire firm to another team within the company.

It produces a common business vocabulary, used by everyone in an organization, and that unified, common language is a key component of Data Governance. It also enables the prevention of incorrect information in company reports and erroneous analytics, which means that businesses can trust, and rely on their data again.

For example, the terms “customer“ and “client” are often used interchangeably but that is not the case in all organizations. Both refer to an individual making a purchase but for some organizations, a “client” is someone who seeks professional services from a business whereas a “customer” buys goods or services from a business. Having that in mind, it is easy to understand that the language within a company will enable consistent and better communication for everyone involved.

Data Profiling

Data Profiling is the process of examining and analyzing the data available, in order to collect statistics or informative summaries about that data, e.g., the mean value of a certain column, frequency of appearance for some entries, key relationships between datasets, etc.

It helps us better understand the structure, content, and interrelationships of a dataset, whilst simultaneously identifying potential for future projects regarding that data. More specifically, it sifts through data to determine its legitimacy and quality. For example, whether the data was recently collected, are the entered values unique, are there any unexpected values (infinites, zeros, negatives), does the range of the resulting data make sense, etc.

There are three main types of Data Profiling:

- Structure discovery – how well the data is structured (e.g., percentage of phone numbers that do not have the correct number of digits).

- Content discovery – which specific inputs contain problems, and systemic issues (e.g., phone numbers with no area code).

- Relationship discovery – how certain parts of the data are interrelated with one another (e.g., key relationships between database tables).

Automation

Generally speaking, automation usually constitutes the use of technology to accomplish a task with as little human interaction as possible. In computing, automation gets accomplished by a program, a script, or by batch processing multiple jobs.

In the case of our Data Catalog, it entails automatically gathering Metadata from across the entire BI landscape, and integrating it into a coherent, unified picture that can be used by both Business and IT departments.

It is of key importance for any successful Data Catalog because Metadata can change at any moment, as new Data Assets are added, removed, and updated frequently. The ideal situation for this Automation process would be to have a scheduled automated Data Catalog platform that will periodically recheck the Metadata of all the Data Assets.

Data Lineage

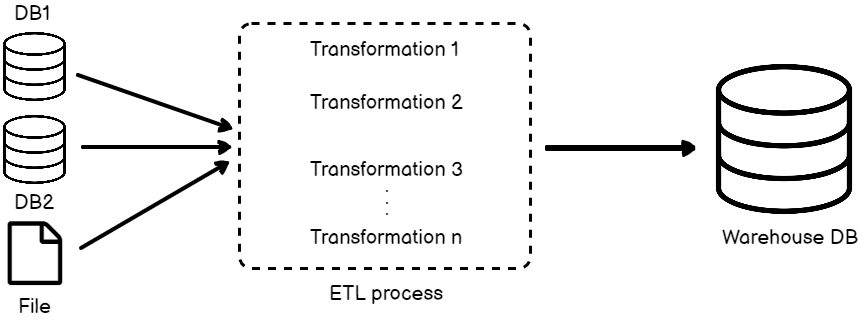

Data Lineage includes the data origin, what happens to it and where it moves over time. Data Lineage gives visibility while greatly simplifying the ability to trace errors back to the root cause in a Data Analytics process. In the case of Data Catalog, it represents a map of the Metadata journey, from the source, through all processes and changes, to storage or consumption.

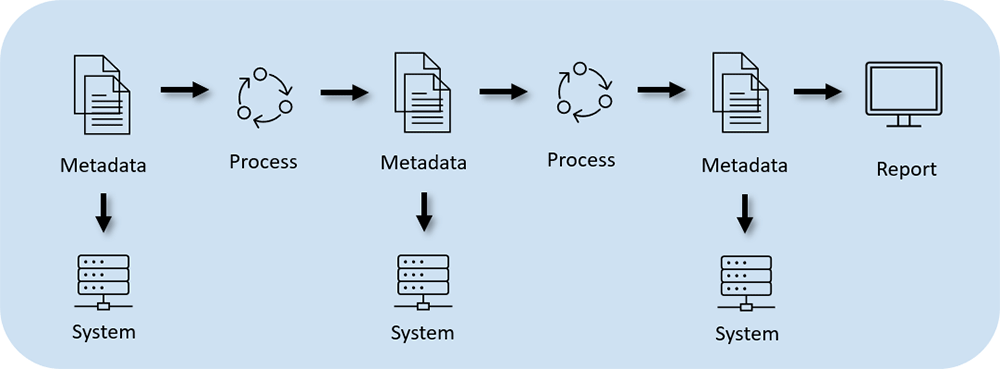

It provides the end user with a stepwise record of how Metadata arrived at its current form. It can be represented visually, usually using graphs. In Figure 3, we can see the diagram of how Data Lineage is created.

Figure 3: Process of creating data lineage

What it represents is essentially the fact that Metadata gets collected every time something changes in the data. So, in order to be able to present someone with the lineage of the data, those changes made have to be documented each time. From these documented changes one can gather all the information and create a graph like the one below on Figure 4, and present someone with the lineage of that data.

Figure 4: Data Lineage made with dummy data

Access Management

Identity and Access Management (IAM) is a very important part of any Data Catalog. Users, systems, and services must have controlled actions within the catalog itself. IAM is about ensuring that entities, such as users, admins, the system, can perform only the task that they are allowed to perform.

Roles present a set of permissions permanently granted to a user or a group of users. They play a key role in IAM because only certain users with a certain role should be able to perform particular actions in the Data Catalog. A good example would be if, let’s say, a certain user of your internal catalog places a description for a particular data asset. You would want to make sure that they are permitted to do so. This would entail that they are familiar with the data and are allowed to change the aspects of the data appropriately. Otherwise, the integrity of that data might be compromised.

These roles can be numerous but the most important ones to remember would be:

- Data Owner

- Solely responsible for all the data of the business.

- It implies control over all of the information, meaning the individual has the ability to access, create, and modify the data, package the data as requested, deriving anything from the data, as well as delete, or sell the data.

- This also includes the unique ability to grant these privileges to others.

- Data Steward (Data Champion)

- Handles data on two different levels, an abstract one, where it focuses on data concepts, and an implementational one, where it focuses on the data elements themselves.

- It implies the responsibility of looking over data assets and managing problems that might arise while they are handled.

- Data Curator

- Handles the process of collecting, creating and managing datasets, such as tables, files, etc.

- It implies the responsibility of connecting data assets with one another so that they could be presented to an end-user, while ensuring that all the value of data is extracted and easily accessible.

Conclusion

By now, many of you might be thinking “This all sounds basically the same”, or maybe “I knew these terms before, why the complicated explanations?”. To that I would just like to point out that even though a lot of this information regarding Data Catalogs can easily be found online, and is pretty simple to understand, it is important to keep it all in one place and be able to tell what each part of the Data Catalog represents within the whole picture. Everybody has their own definition of what a Data Catalog should be, so it is important to take all of these into account and find the links between them.

Through that, one can create and use Data Catalogs to their fullest potential. This will result in creating a ripple effect within the company, where the whole process of governing the use of data becomes much more efficient, whilst making the whole ordeal a whole lot easier as well.

This post also serves as excellent context for the following blog post, where existing Data Catalogs tested by the team will be discussed in more detail.

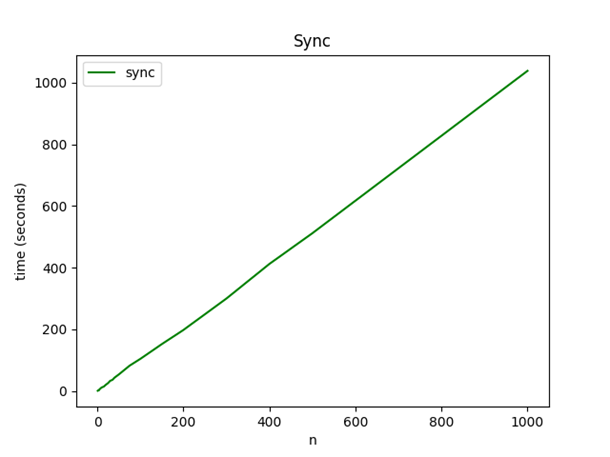

As expected, execution time increases linearly with the number of request (almost perfectly).

We can imagine how poorly this would scale with any real world application and how poorly the hardware is being utilized.

Approach #2

The alternative approach uses concurrency, and what is called a pipeline pattern. I’ve extracted the channel creation logic in other functions since the actual code is not important right now.

func DoAsync(n int, source, destination string) error {

// returns a data channel, and an error channel

inputs, pullErrC := collect(n, source)

// takes the input channel, returns a channel of hashes

hashes := computeHashes(inputs)

// takes the hash channel, sends the hashes to their destination, returns an error channel

pushErrC := push(hashes, destination)

// combines the two error channels into one (fan-in)

mergedErrC := merge(pullErrC, pushErrC)

// collects all errors, returns a value through a channel, once all errors are collected and the channel is closed

errCollector := collectErrors(mergedErrC)

if err := <-errCollector; err != nil {

return errors.Wrap(err, "fetching process completed with errors")

}

return nil

}

First, we call collect, which doesn’t block and immediately returns a data channel, which will eventually be filled with messages, and an error channel which will contain all the errors that might occur.

Each message will be fetched in it’s own goroutine, not waiting for others to send their request.

Then, we pass the data channel to the next function, which computes the hashes and returns a channel which will eventually contain them. The final stage takes the hash channel and sends the hashes to the destination. Again, each hash will be sent in its own goroutine.

At this stage, only the error channel is returned for any error that might happen during the sending process.

At the end, the two error channels are then merged into a single channel. These errors are then we collected through a “collector” channel. The main goroutine then waits for the channel to return something, nil in case everything worked as expected.

One thing to note is that most of the code here is non-blocking and new “stages” of the pipeline can be added, without ever having to look at the rest of the code.

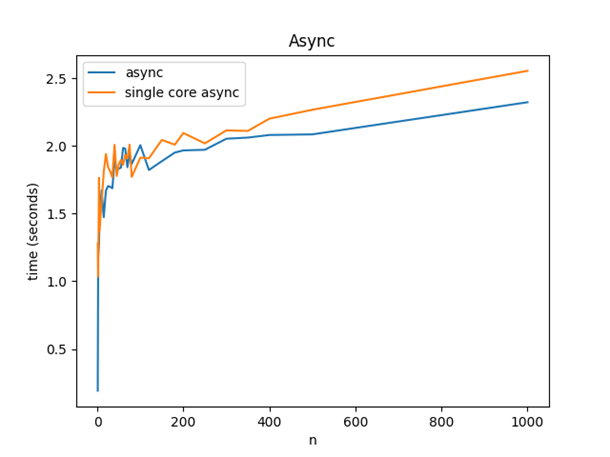

Like the first implementation, this code was ran with a different number of messages, so we can compare them.

As we can see, the execution time of processing 10 messages is about the same as the cost of processing 100s of messages. I’ve also ran this code while forcing the Go runtime to use only one operating system thread, to demonstrate that the number of cores is not important for this to work.

Keep in mind that the previous implementation needed almost 17 minutes for 1000 messages, while this one needs 2 seconds.

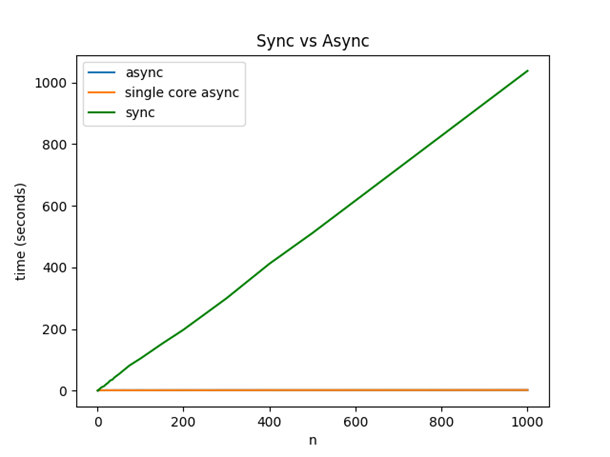

This might not be obvious from this graph, so I made another one to put them at the same scale.

As we can see, the async, concurrent implementation takes almost no time at all in comparison with the synchronous, sequential one.

Things that weren’t mentioned

There’s a couple things that weren’t mentioned here, but are worth pointing out.

Real world applications are probably more complex than the dummy example shown here, so here are some common problems a real application might need to deal with.

Sometimes we want to terminate the entire program if one goroutine encounters a non-recoverable error.

The Go standard library offers an elegant solution through the context package, which can be cleanly integrated with the standard pipeline pattern shown today.

Sometimes we want to limit how many goroutines can be active, and we can easily do that through simple mechanisms of bounded concurrency. It’s common that external dependencies can’t handle the load we can generated in our concurrent programs, and that can also be elegantly fixed through throttling mechanisms, which have great library support.

Sometimes channels aren’t enough and we need to use lower level primitives, which is fine and are also built into the language, but you need to be careful with them because readability and safety can suffer from that.

Goroutines aren’t magic; if you have to a lot of CPU heavy computations, there can never be a faster way than running the same number of threads as CPU cores.

Conclusion

In conclusion, we can see that the entire language is built around concurrency. We learned that goroutines are lightweight threads which abstract a lot of complexity, and that not using them for “embarrassingly parallel” tasks is not an option.

We also learned that Go allows us to write clean, idiomatic code which is resistant to multithreading bugs (if we stick to common concurrency patterns), which, combined with static typing and simple syntax, makes Go the perfect candidate for highly concurrent network-based software.

Next blog posts from Data Catalog series:

Testing Open Source Data Catalogs and Testing Open Source Data Catalogs – part 2