Robert Dakovic, Luka Abramusic

DATA ENGINEERS

In the world of cloud-centric applications, Infrastructure as Code (IaC) has evolved from a convenience to an absolute necessity.

Dataphos is a perfect example. It is a suite of microservices addressing common challenges in Data Engineering. Each component is autonomously crafted to meet specific architectural needs. Together, they form a cohesive ingestion platform, facilitating the transition of data from on-premise systems to the cloud and beyond.

At Syntio, Terraform has been a tested and proven tool not only for the Dataphos infrastructure but also for our company-wide infrastructure. However, recently, Pulumi has captured our attention. In this post, I’ll explain why Pulumi fits better into our long-term vision of how the Dataphos infrastructure should be provisioned.

Pulumi is an open-source Infrastructure as Code (IaC) tool that operates on a state-driven model similar to Terraform. Users articulate the desired infrastructure state, and then the tool takes charge of realizing that state on the cloud.

Unlike traditional IaC tools, Pulumi stands out by empowering users to define infrastructure using familiar programming languages such as TypeScript, JavaScript, Python, Go, C#, and Java, providing a flexible and modern approach to cloud application development without the need for learning additional configuration languages.

These aforementioned qualities enabled three very important things:

In the upcoming section, I’ll delve into the key features that prompted our transition from provisioning infrastructure with Terraform to adopting Pulumi.

The Big Challenge: Writing Terraform Code for Multiple Environments

The following code snippet represents how our product infrastructure repositories used to look like:

├── modules_aks/

├── modules_gke/

└── providers/

├── aks/

│ └── common/

│ ├── main.tf

│ ├── terraform.tfvars

│ └── variables.tf

└── gke/

└── common/

├── main.tf

├── terraform.tfvars

└── variables.tf Every product had its own reusable module scripts and a folder with environment-specific scripts: providers/aks, providers/gke, etc. Each environment may have its own set of resources and configurations. A common practice for creating a new environment was:

- Cherry-pick these scripts

- Copy them in the current working directory

- Adapt some variables to the requirements of development teams or clients

- Deploy

This method was simple, provided isolation of the state and its files, and used modules to encapsulate resources. Yet for us, the drawbacks outweighed the benefits:

- keeping distinct state files and backends for each environment and product took a significant amount of extra work

- results in a substantial amount of redundant code

Our First Attempt to Conquer the Challenge

We were aware that in order to provide a layer of separation between the essential features of the products and the particulars of each cloud provider, we would need to implement some form of abstraction. Here’s an illustration of how we attempted to provide users the option to select from a variety of message brokers within a single cloud provider script:

locals {

counts = {

pubsub = (var.broker_type == "pubsub" ? 1 : 0)

kafka = (var.broker_type == "kafka" ? 1 : 0)

}

}

# Pub/Sub Topics

module "input_topic_pubsub" {

source = "./modules/pubsub_topic"

count = local.counts.pubsub

create_topic = var.create_input_topic

topic_name = var.input_topic_name

}

# Kafka Topics

module "input_topic_kafka" {

source = "./modules/kafka_topic"

count = local.counts.kafka

create_topic = var.create_input_topic

topic_name = var.input_topic_name

} When we attempted to have many instances of a product component, each with a different broker type, the situation became much more convoluted. We reorganized all of the infrastructure repositories and added fixes like these to the scripts, but the code became even more clumsy and unreadable, and we soon concluded Terraform, with its strict HashiCorp Configuration Language (HCL) syntax, is just not suited for this kind of approach.

This is the moment when we started searching for a more flexible Terraform alternative. We found Pulumi.

Meeting the Challenge with Pulumi

We decided to use Python as our primary language in Pulumi. Compared to HCL, Python offers a far higher degree of abstraction, and we made sure to utilize all of its capabilities:

└── infrastructure/

└── broker/

├── kafka/

│ ├── kafka_config.py

│ └── KafkaMessageBroker.py

├── pubsub/

├── service_bus/

├── AbstractMessageBroker.py

└── MessageBroker.pyWe introduced an AbstractMessageBroker interface, a MessageBroker class, and concrete broker implementations, such as KafkaMessageBroker, to address the broker type issue previously discussed. By employing conditional instantiation dependent on the kind of message broker indicated in the broker_config, the problem was resolved in the MessageBroker class constructor:

class MessageBroker(ComponentResource, AbstractMessageBroker):

def __init__(self, broker_id: str, broker_config: dict, platform: Platform) -> None:

resource_type = "marlin:infrastructure:MessageBroker"

resource_name = f"{broker_id}-broker"

workspace = platform.get_workspace(broker_config)

opts = ResourceOptions(parent=workspace)

super().__init__(resource_type, resource_name, None, opts)

broker_type = broker_config["type"]

if broker_type == "servicebus":

self._broker_instance = ServiceBusMessageBroker(broker_id, broker_config, resource_group=workspace, parent=self)

elif broker_type == "pubsub":

self._broker_instance = PubSubMessageBroker(broker_id, broker_config, project=workspace, parent=self)

elif broker_type == "kafka":

self._broker_instance = KafkaMessageBroker(broker_id, broker_config, kubernetes_provider=workspace, parent=self)Because of this, the client can transition between various broker implementations according to the environment configuration that is supplied. Conveniently, this enables the client to concentrate solely on a single Pulumi configuration file.

Single Source of Truth

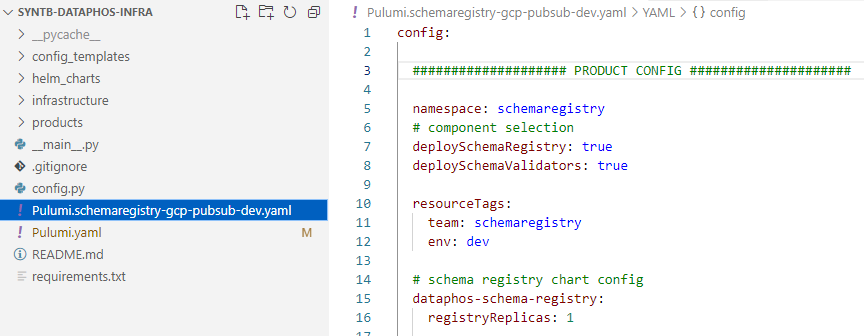

What we really like about the Pulumi project structure is that the user practically only needs to worry about one file: the YAML configuration file – a single source of truth for configuring everything:

The Pulumi.schemaregistry-gcp-pubsub-dev.yaml file used for configuring the schemaregistry-gcp-pubsub-dev stack

This eliminates many cumbersome steps we needed to do in Terraform:

- jumping from one component configuration file to another to check/change something

- checking three files for every component:

main.tf,variables.tfandterraform.tfvars

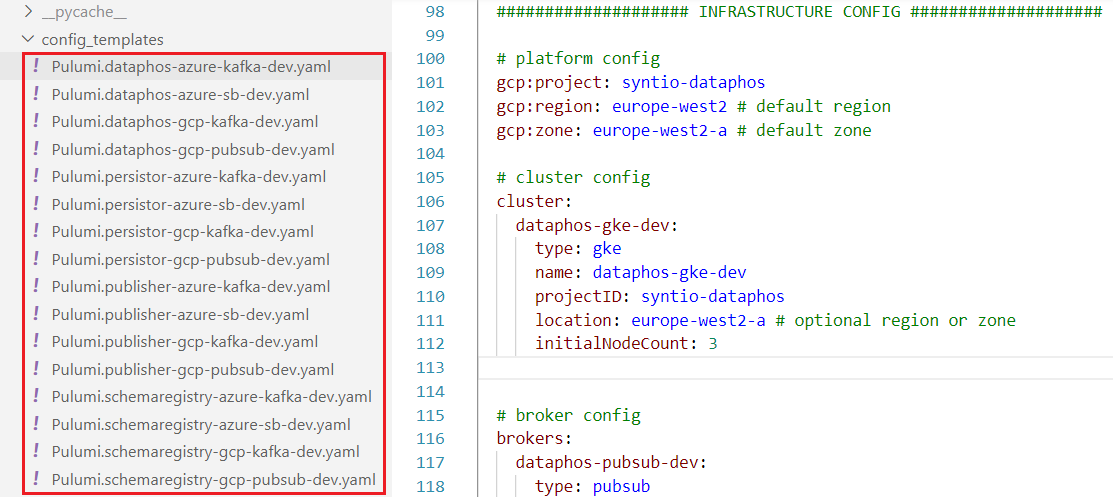

We extracted the maximum from this feature by introducing a central storage for many Dataphos platform configuration combinations a user might want to deploy (different cloud providers, different Dataphos components a user might/might not need) – the config_templates directory:

Prepared instances of product and platform configurations

The user can choose a configuration template that closely resembles what they want to deploy and then change some minor details if needed.

Choosing Open Source with Pulumi

We used the free versions of Terraform and Pulumi, but Pulumi went one step further and decided to use the Apache License Version 2.0 for its free tier. This license encourages collaboration, permits widespread use and distribution of the software, and maintains a balance between legal protections and openness.

This is an important argument for us because adopting open-source software is a wonderful fit with our commitment to flexibility and openness.

Conclusion

For a very long period, Terraform was our platform’s and products’ preferred IaC tool. As a result of our work with clients and desire to keep current with the industry, we have concluded that our platform requires a solution that is more in line with the DevOps philosophy, adaptable, modular, user and developer-friendly, and most importantly, open-source.

As a result, we decided to make Pulumi the main IaC tool for our data platform. Both clients and developers are quite happy with the new way of infrastructure deployment. Since we place a high value on the user experience with our products, we are certain that we made the right decision.