Daniel Sandor, Nikola Tomazin and Vedran Kolka

DATA ENGINEERS

Introduction

In the fast-paced world of modern business, adaptability and efficiency are paramount. Every decision, from choosing the right software to optimizing workflow processes, plays a crucial role in determining an organization’s success. Data management is at the heart of these decisions in this digital age, and the debate over on-premise databases versus cloud databases has never been more relevant.

For years, on-premise databases have been the go-to choice for many organizations. They offered a sense of control and familiarity, allowing companies to store their precious data within the confines of their physical infrastructure. However, as technology continues to evolve, so do the pain points associated with maintaining on-premise databases like opaque costs, overpricing, restricting licensing, and vendor lock-in.

In December 2022 Google announced the general availability of AlloyDB for PostgreSQL, a fully-managed, PostgreSQL-compatible database service that provides a powerful option for modernizing from legacy, proprietary databases and for scaling existing PostgreSQL workloads.

Choosing AlloyDB as your cloud database allows you to:

- Scale your application to millions of users

- Work with open-source technologies that will give you both openness and scalability. AlloyDB joins the best parts of PostgreSQL and Google Cloud to do just that

- Leverage superior performance, scale, and availability

- Tune your database with a transparent and predictable pricing model in mind

- Utilize the same technology and database schemas for your transactional (OLTP) and analytical (OLAP) workloads by using a clever hybrid row-based and columnar query engine

To transfer data to AlloyDB, a good practice would be to use a custom or managed ETL tool that allows you to extract data from the source using SQL or tool-specific syntax.

In 2023, we released one such ETL tool as part of our enterprise-class, real-time, data platform offering. Dataphos Publisher, as the ingestion mechanism of the Dataphos data platform, connects to both JDBC-compliant databases and API sources to extract data.

Along with extracting data, the Publisher does the following:

- Immediately creates business-ready objects at the source

- Serializes business-ready objects to standard data formats for a performant data transfer

- Optionally encrypts these objects for a safe data transfer across the public Internet

- Captures both historical and real-time data

The Dataphos Publisher represents an alternative to the classic approaches of using a CDC (Change Data Capture) solution to capture database activity or to replicate the whole database a few times a day. These solutions can be costly, unstable, and slower.

This article will explore how to migrate data from an on-premises source system to the cloud, i.e., AlloyDB, in a business-ready format. This data extraction process will enable immediate data analysis and swift decision-making. Business object definition is made easy through the Publisher’s configuration. This allows multiple domain teams to easily create different business objects based on their business domain, and send these objects into a decentralized data platform (Data Mesh) for cross-domain data analysis.

In this article, order management in a retail system was chosen as the domain, Google Cloud Pub/Sub as the central intermediary component, and Google Cloud AlloyDB as the destination for the operational/analytical business objects.

Migrating on-premises data to the cloud

On-premises database systems have often been used to store operational data. Reasons for using on-premises systems vary, from security and performance to following standard company practices. Having your application and the database close together, in a private network, does ensure secure and low-latency communication between them, however, it comes with some costs.

On-premises systems are deployed on machines of a certain size and processing power. This configuration is static and difficult to change. Application load however isn’t static. It changes during the application lifecycle. Unexpectedly high application load will cause performance degradation, and lower than expected application load wastes purchased resources. Every system is also prone to errors from time to time. Dealing with these errors can be difficult. Manual intervention is required, and monitoring and administration efforts become cumbersome.

Operational systems are only half the story of a successful business application. With every operational system, there should be a system designated specifically for business analysis, usually an entirely different database schema and storage format. This leaves you with the need for a separate database system.

You could also use a separate on-premises machine for this purpose. This design choice, however, doubles your administrative effort and leaves you with the same scalability and error-handling issues.

Retail systems problem

Successful retailers depend on quick business decision-making and around-the-clock availability to customers. This means, their operational and analytics systems must be scalable to accommodate spikes and dips in sales, always available and fast to allow a good user experience, and have a great deal of security to ensure user information safety.

As the retailer’s business develops, the amount of generated data grows on an exponential scale, and on-premises systems cannot follow this trend.

The optimal design choice for the database system, in that case, would be to use the cloud’s scalable and third-party managed services combined with a database performance high enough to warrant a move from an on-premises system. Also, optimally, you wouldn’t need to manage separate systems for operational and analytical purposes.

Retail systems solution

To solve this problem, you could choose to transfer your operational data to AlloyDB. This will provide you with a high-performance, scalable, and fully managed database system that can act as an operational and analytical data store.

You could use the Dataphos Publisher to transfer your data in a business-ready format in real-time to the cloud. From there you can consume it as you see fit. Store it in your AlloyDB database, store it in a separate database system, persist it for data lineage and archiving reasons, and so on.

After all your historical data is transferred, you could choose to use AlloyDB as your main database system directly. You could also continue transferring data from the on-premises operational system in real time and use AlloyDB only for your analytical needs.

Retail database systems consist of many complex schemas within multiple domains. Warehouses, customers, orders, invoices, and so on. Each domain should be handled separately.

This article will use the order domain to showcase the transfer of data from the on-premises system to AlloyDB.

Architecture

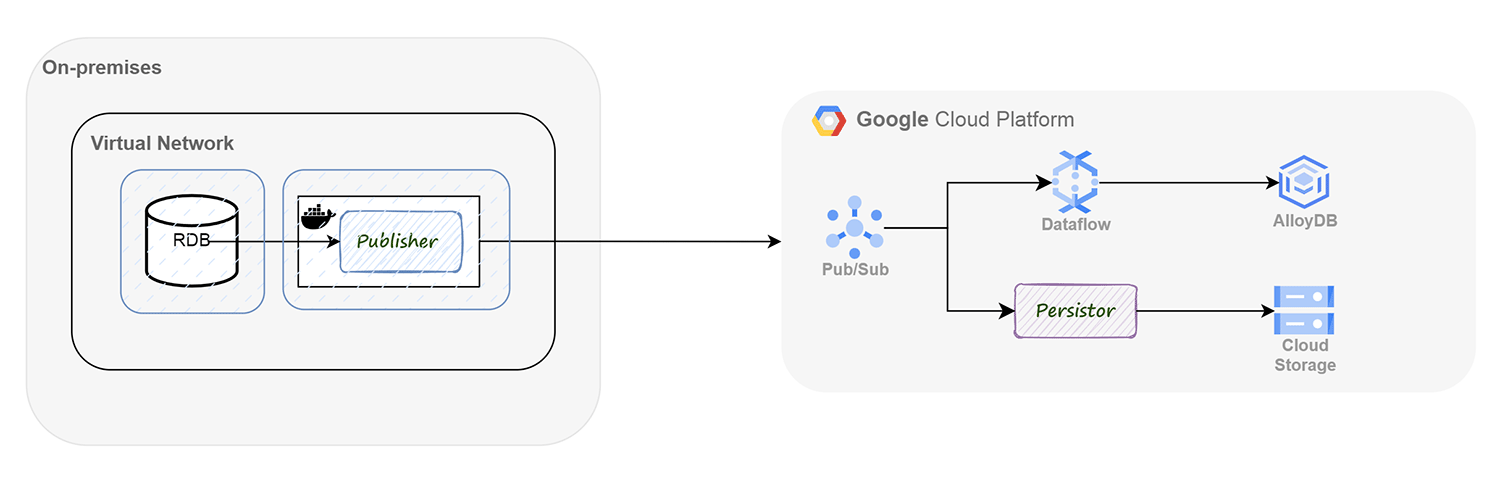

To move data as business-ready objects from an on-premises source to the cloud, we propose the following architecture.

Solution architecture

Dataphos Publisher deployed close to the source database

- Reads data from the source database

- Formats business objects and automatically generates a schema of the data

- Serializes data in Avro format

- (Optional) Encrypts data

- Publishes data to a GCP Pub/Sub topic

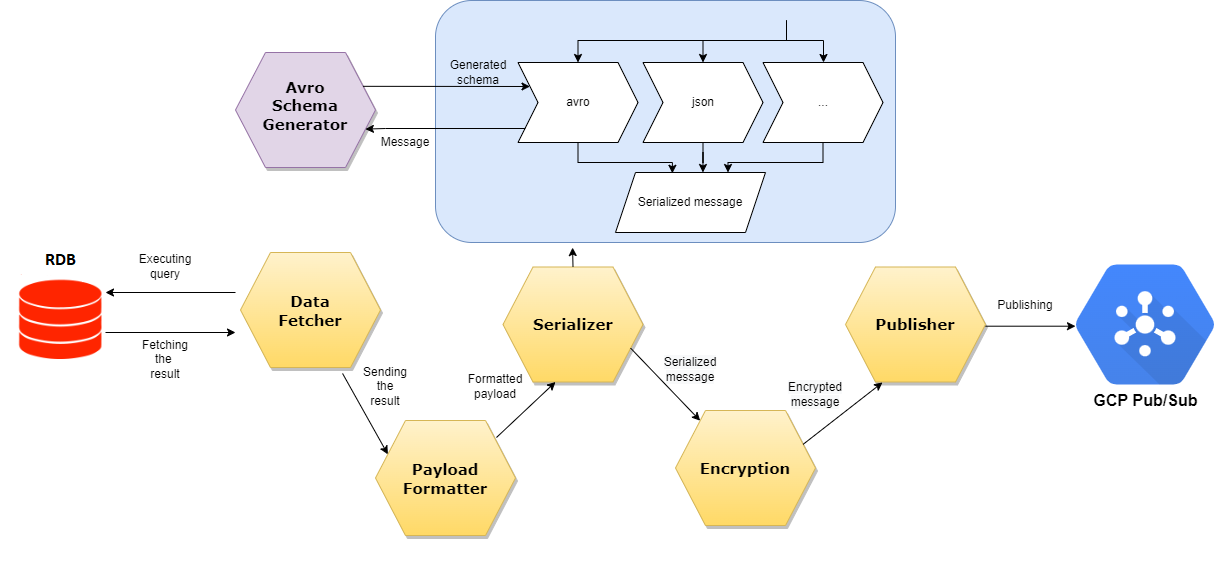

Dataphos Publisher architecture diagram

GCP Pub/Sub

- The message broker that queues the Publisher’s business objects as messages

- The central component where data can be processed in a Publish-Subscribe pattern

GCP Dataflow

- A custom Dataflow job that stores Pub/Sub data to AlloyDB

- Reads data from the Pub/Sub topic

- Parses data according to the Avro schema

- Uses a JDBC connection to store data into pre-created AlloyDB tables

(Optional) Dataphos Persistor

- Another Dataphos product that stores Pub/Sub data as Avro files to a data lake for data retention/resubmission/archiving purposes

AlloyDB

- The highly-available, high-performance database storage for the business objects created by Dataphos Publisher

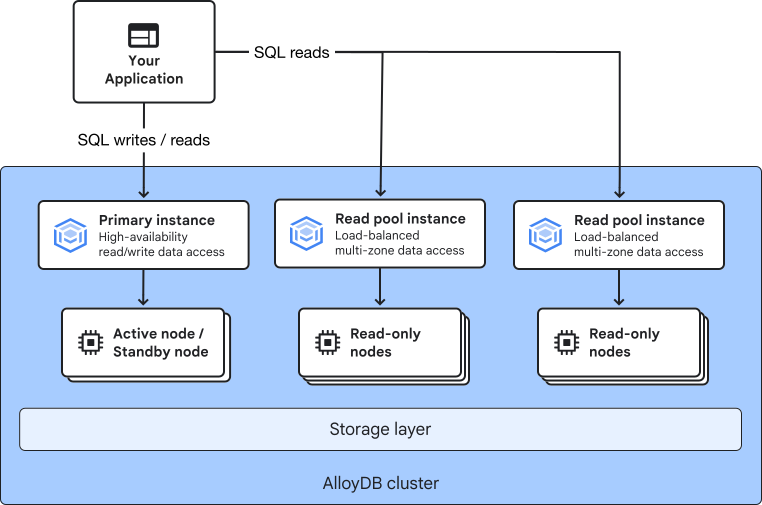

AlloyDB high-level architecture diagram

Implementation details are available at a GitHub repository: GitHub – syntio/poc-publisher-to-alloydb

Component overview

Dataphos Publisher and Persistor

Dataphos Publisher is a component developed for running a constant flow of ready-to-digest data packets across your Cloud infrastructure – sourced directly from a database or an exposed API. Whereas most Change Data Capture (CDC) solutions focus on capturing and transmitting changes on individual tables, Dataphos Publisher focuses on forming well-defined, structured objects directly at the source, publishing them to a distributed message broker of your choice, and allowing a multitude of consumers to independently consume and process the data in their pipelines.

Collectively, this is known as a create-transform-publish pattern. The structured objects generated by the Publisher are what we refer to as business objects – you are no longer pushing technical data on changes in your database, but curated key business information.

As a user, you define the query and how the result of that query should be assembled into a business object with a minimalist and easy-to-understand YAML configuration. Dataphos Publisher takes care of the rest – assembling the data, serializing it, encrypting it, and – finally – publishing it.

Dataphos Publisher is suitable for ingesting historic data and capturing real-time changes in your source systems. You define the starting point for the Publisher’s workers and whether to stop at a certain point or to continuously capture incoming events to the source system.

Dataphos Persistor is a stateless component that efficiently stores messages collected from a message broker topic onto a well-structured data lake. It automatically performs indexation of messages, allowing for their later retrieval and resubmission.

It is designed to eliminate the need for re-submission requests from the original publishers (which is often impossible) and to accelerate the building of well-established data pipelines in general – utilizing the structured storage as a new source of data in itself.

The data can be stored in batches (in Avro format) or as individual messages.

Our use case

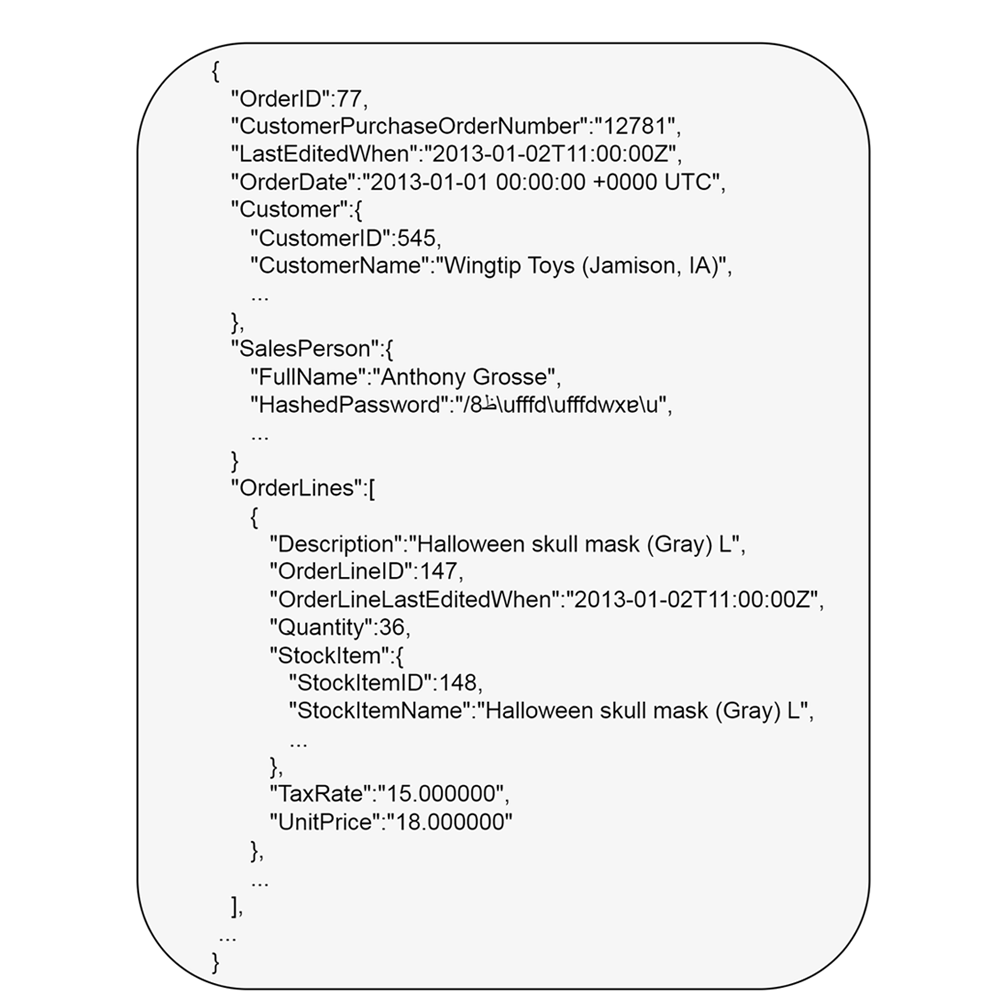

In our example data pipeline, Dataphos Publisher was used to create business objects that represent individual orders placed in a retail operational system.

Dataphos Publisher connected to the source database and formatted a business object containing the order with the salesperson, customer, and delivery information. Each order includes one or more order items. Order items are enriched with the package type and stock item information. The following is an example of a business object formatted by Publisher.

The created business objects were serialized in Avro format and published to a GCP Pub/Sub topic.

Dataphos Publisher business object example

Dataflow

Dataflow is a Google Cloud service that provides unified stream and batch data processing at scale. Dataflow is used to create data pipelines that read from one or more sources, transform the data, and write the data to a destination.

Our use case

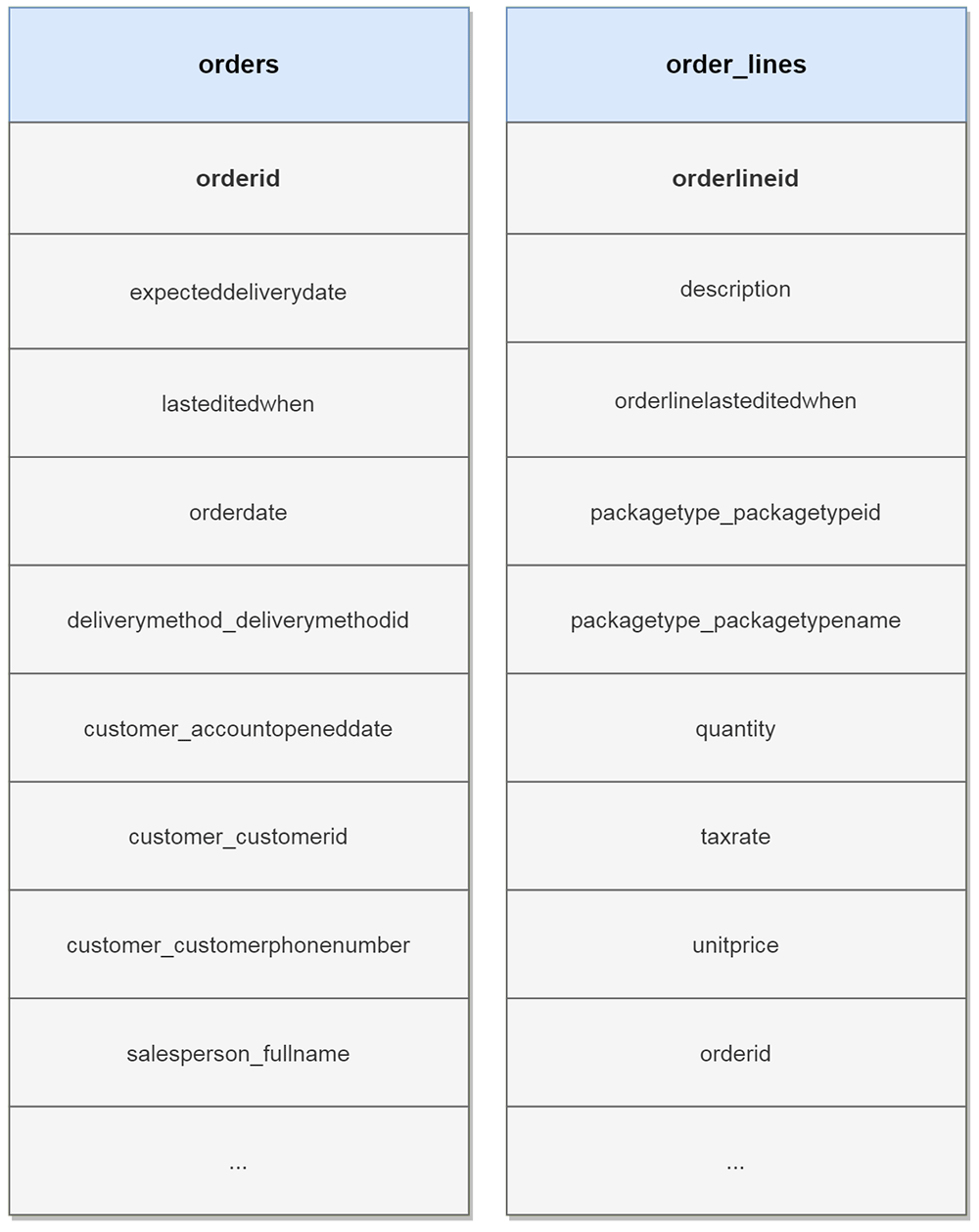

A Dataflow job was used to read order business objects from a GCP Pub/Sub topic, transform them in a structure expected by the destination AlloyDB database, and insert records in the prepared AlloyDB database tables using a JDBC connection. The transformation split orders and order lines (items) into separate objects, and prepared values for the insert into the AlloyDB tables.

AlloyDB

AlloyDB for PostgreSQL is a fully managed, PostgreSQL-compatible database service that’s designed for your most demanding workloads, including hybrid transactional and analytical processing. AlloyDB pairs a Google-built database engine with a cloud-based, multi-node architecture to deliver enterprise-grade performance, reliability, and availability.

Performance

- You can find the transactional and analytics performance metrics here:

- https://services.google.com/fh/files/misc/alloydb_oltp_benchmarking_guide.pdf

- https://services.google.com/fh/files/misc/alloydb_olap_benchmarking_guide.pdf

Reliability → SLA 99.99% availability, including maintenance

Features → all expected PostgreSQL features

- Concurrency

- PostgreSQL’s Multi-Version Concurrency Control that supports all serialization levels (including “serializable”)

- Two-phase locking for safely running distributed transactions

- Foreign keys

- Indexes

- B-tree, Hash, GIST, SP-GIST, GIN, and BRIN index types

- SQL and stored procedures

- Optimized storage architecture

- Write-ahead logs are written synchronously to the storage layer and database blocks are then asynchronously written to storage by the Log Processing Service component

Machine learning and automatic workload adaptation

- AlloyDB uses machine learning techniques for automatic vacuum management, storage and memory management, multi-layered data tiering, and analytical query acceleration

- AlloyDB learns from your workload and adapts for optimal performance

- AlloyDB organizes data across working memory, ultra-fast cache, and durable storage based on column data types, column contents, and common query analysis

Columnar query engine

- AlloyDB populates data in the working memory or cache based on column structure and usage analysis

- Data is stored in row-based and/or columnar format, and the format is continuously refreshed based on running queries

- Interchangeable data formats allow the query engine to choose between a columnar, row-based, or hybrid data representation at the query execution time, on a per-column basis

Ideal choice for:

- Enterprise-class applications → allow the operational and analytical data to reside in the same database, with optimal query performance, high availability, and scalability in any type of application load

Our use case

AlloyDB was used to store business objects formatted by the Dataphos Publisher. Business objects were inserted into denormalized AlloyDB tables. The data could then be used for analytical purposes.

Orders and order items were separated into two tables. The following is a simplified diagram of the two tables.

AlloyDB tables schema

Now that we have the data in AlloyDB, we can test query performance.

Performance

Two performance tests were performed:

1. Latency difference for the Publisher flow (described in the architecture above: Source → Publisher → GCP Pub/Sub → Dataflow → AlloyDB) vs the conventional CDC flow (using the Kafka Connect Debezium source connector and the Confluent JDBC sink connector).

The first performance was tested by comparing times from the moment the Publisher or the Debezium connector caught the changes on the source database and the moment the data was inserted in AlloyDB for 1k, 10k, 50k and 150k rows. The data in source database was generated at around 200 rows per second.

The following table shows the latency of the messages arriving at the destination and the ratio how the Publisher flow performed better than the CDC flow.

| Number of rows | 1000 | 10000 | 50000 | 150000 |

| CDC Flow (seconds) | 4.96 | 4.62 | 3.37 | 3.67 |

| Publisher Flow (seconds) | 4.83 | 2.99 | 2.2 | 2.27 |

| Ratio | 1.026915114 | 1.545150502 | 1.531818182 | 1.616740088 |

| Average ratio | 1.430155971 |

It could be observed that the Publisher flow had a 40% lower latency than the CDC flow.

2. Execution time for normal PostgreSQL vs AlloyDB

The second performance was tested by running analytical queries on the collected data. The performance was compared to a standard PostgreSQL database with the same underlying hardware and the same dataset.

Three analytical SELECT queries of increasing demand levels on the database query engine were executed multiple times (ten times). The average execution duration was recorded for each of the three queries.

The following table shows query execution times and the number of times AlloyDB performed better than non-AlloyDB PostgreSQL.

| Query | Execution time [ms] – PostgreSQL | Execution time [ms] – AlloyDB | Performance increase [ratio] |

| Total amount spent by each customer per month in 2020 | 1372.643 | 409.072 | 3.36 |

| Number of sales per salesperson each year | 1973.080 | 431.572 | 4.57 |

| Most sold products | 827.181 | 23.335 | 35.45 |

It could be observed that AlloyDB handled analytical queries for a dataset of one million orders up to 35 times better than the PostgreSQL.

Benefits

The following are some benefits of using the proposed architecture and/or the mentioned technologies in general.

AlloyDB

The biggest benefit of using AlloyDB is the certainty of consistently good performance and database reliability no matter the workload.

AlloyDB offers superior performance for both transactional and analytical processing compared to standard PostgreSQL. Transactional queries are up to 4 times faster, and analytical queries are up to 100 times faster than standard PostgreSQL, depending on the dataset and SQL queries. Because of machine learning-empowered techniques, AlloyDB is a good choice for both an operational database and an analytical data store. It enables applications to reliably store operational data, and business analysts to make fast, real-time decisions based on the stored data without the need for dimensional modeling.

PostgreSQL is an open standard and a staple in application development across industries. It has an engaged community of developers that add features and extensions. However, production-ready PostgreSQL environments need a lot of maintenance and manual administration. Scaling vertically and horizontally comes at the cost of the database administrator’s time and potential application downtime.

AlloyDB offers the same standard with support for managed scalability, redundancy, and replication for high availability, automatic leader promotion, and much more, by utilizing the power of the cloud. Performance can be optimized by adding database instances dedicated to optimizing read queries (read pools). Machines can easily be scaled vertically to increase available resources. Storage and computing are kept separate, which allows separate scaling of resources based on your needs. The cloud offers virtually unlimited amounts of memory and computing at the click of a button. The cost is straightforward to calculate.

Migrate your on-premises data to AlloyDB to gain on application performance, both operational and analytical, maintainability, performance monitoring, and general simplicity and ease of use.

Dataphos Publisher

The biggest benefit of using Dataphos Publisher is moving the load of business object creation from the database system to the ETL tool/data source. The Publisher uses a simple YAML syntax for defining business object structure. This simplicity and the speed of execution enable fast and cost-effective data transformation, serialization, encryption, and publishing. The Publisher gives you the possibility to access structured business data faster and more frequently, allowing you to make better-informed business decisions.

The Publisher reduces network overhead by serializing data in the Avro format, with JSON also being supported. Generally small data packets can be safely sent over the public Internet because of Publisher’s data encryption, avoiding the need for private network configuration.

The business objects created by the Publisher can be published to most of the popular message broker services (GCP Pub/Sub, Apache Kafka, Apache Pulsar, NATS, …). These technologies natively support the publish-subscribe pattern. Meaning, multiple applications can consume the data that the Publisher creates, and process the data in a domain-specific way. Migrate your data from on-premises systems to AlloyDB, load your data into a powerful search engine like ElasticSearch, capture incoming changes to your source system and publish business objects ready for quick business analysis, and much more.

Compared to the competition, the Publisher is simpler to set up, has better performance (up to 170,000 database rows processed per second, depending on the dataset), and allows easy reprocessing, and processing of both historic and real-time data.

Use Dataphos Publisher to migrate your data from on-premises to the cloud to gain on migration performance, simplicity, transformation ability, and security.

Conclusion

The days of on-premises systems being the optimal solution are gone. The cloud offers managed resources at a manageable cost but with increased simplicity, availability, and scalability.

The process of migrating your on-premises systems to the cloud can be expensive, complicated, and time-intensive. To provide better support for this process, the Dataphos Publisher was developed. It offers migration of data from the on-premises system to the cloud in the form of objects ready for business analytics at a fraction of the cost and time.

If you require a fast, reliable, and scalable database system that can handle operational and analytical data, like the business objects created by Dataphos Publisher, GCP AlloyDB is the right choice for you. Use AlloyDB to migrate your on-premises systems to the cloud, and seamlessly transition to it as your main data store.

If you are familiar with PostgreSQL, you will be on familiar ground. All you need to do to get started is create a simple AlloyDB instance on your GCP project, and you have a GCP-managed high-performance PostgreSQL database at your disposal. Simply scale resources depending on your further needs.

The clever query execution engine implemented by AlloyDB will provide you with the best performance for all your database needs without any further intervention.

References

https://services.google.com/fh/files/misc/alloydb_olap_benchmarking_guide.pdf

https://services.google.com/fh/files/misc/alloydb_oltp_benchmarking_guide.pdf

https://cloud.google.com/alloydb

AlloyDB overview | AlloyDB for PostgreSQL | Google Cloud

New AlloyDB for PostgreSQL frees you from legacy databases | Google Cloud Blog