Dominik Vrbanic

DATA ENGINEER

Introduction

Knative, which stands for Kubernetes plus native, is defined as an open-source project based on the Kubernetes platform for building, deploying, and managing serverless workloads that run in the cloud, on-premises, or in a third-party data center. It combines two very popular concepts: serverless computing and container orchestration in the form of Kubernetes.

The main idea behind Knative is to create a simplified and enhanced version of Kubernetes for end-users. Not as a replacement, but as a higher-level interface to hide the complexities of the Kubernetes platform, and provide users with a better user experience.

Components

Knative itself runs on Kubernetes and has two main components: Knative Serving and Knative Eventing. Knative Serving is an abstraction layer over Kubernetes, which provides most of the benefits people talk about when discussing Knative. On the other hand, Knative Eventing provides an abstraction layer over message brokers.

Along with Serving and Eventing, the earlier versions also had a third component called Knative Build, which was responsible for building the application from source code to container images. Knative Build was the first part to get separated (and became the Tekton project). The reason cited for the deprecation of the Knative Build was the fact that building and pushing an image for a service should not be one of the core responsibilities of Knative, rather this responsibility is shared with any projects capable of building images in Kubernetes.

Knative Serving

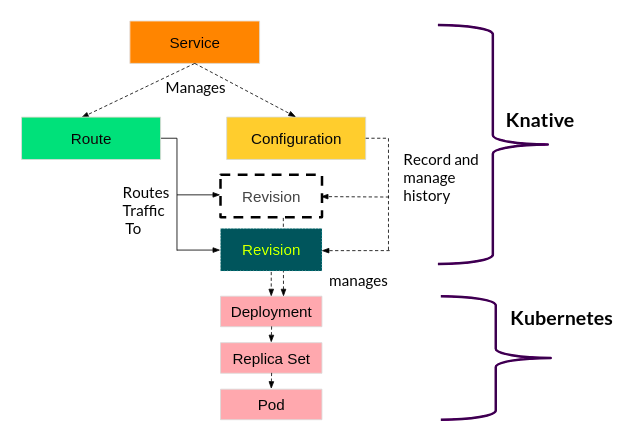

Serving component is responsible for features revolving around deployment and the scaling of applications you plan to deploy. The primary Knative Serving resources are Services, Routes, Configurations and Revisions. Lets breakdown the role of each of these:

-

Services: This resource automatically manages the whole lifecycle of your workload. It controls the creation of other objects to ensure that your application has a route, a configuration and a new revision for each update of the service. A service can be defined to always route traffic to the latest revision or to the pinned revision.

-

Routes: They help developers direct the amount and flow of traffic to one or more revisions. You could e.g. expose only a fraction of users to a recent version, test how they respond and then gradually route more traffic to that new version.

-

Configurations: Configuration resource is used for managing different versions of a service. Every time there is a deployment of a new feature or modification to the existing features of a container, Knative saves the previous version and creates a new version with the latest features and changes. These versions are called revisions.

-

Revisions: A revision represents a point-in-time snapshot of the code and configuration for each modification made to the workload. Revisions are immutable objects and can be retained for as long as they are useful. Knative Serving Revisions can be automatically scaled up and down according to incoming traffic. The other major benefit of revisions is rollbacks, which allow you to jump back to any version of your application that you may want.

Knative Serving resources (How Knative unleashes the power of serverless)

Unlike when using only Kubernetes, where you have to create all the resources yourself (deployments, servicee, ingresses, etc.), Knative allows you to create only one resource – Knative Service – and then Knative creates the objects that are explained above. In addition, defaults are set in such a way that you can run your application and access it externally with just one command or by applying a YAML file that defines a Service, right out of the box. This YAML file specifies metadata about the application, points to the hosted image of the app and allows the Service to be configured. For many practical use cases, you might need to tweak those defaults to your needs, as well as needing to adjust the traffic distribution among the service revisions. By setting the traffic specification, you can split traffic over any number of Revisions (i.e., for testing purposes).

Knative Serving also provides automatic scaling, or autoscaling, for applications to match incoming demand. Knative can autoscale services to thousands if needed or scale down the services to one or even zero. This is unlike Kubernetes, where you need at least one instance of a pod up and running at all times.

If an application or service is not getting any traffic from the users, the Knative pod autoscaler scales down to zero, which means it reduces the replicas of the application on the cloud to zero.

If there is a small amount of traffic, the replicas are scaled down to the minimum number needed to handle the incoming requests. In the same way, one can scale replicas of an application to meet up with any surge in traffic and requests.

You can configure some autoscaling options, such as:

-

type of autoscaler used (e.g., KPA, HPA)

-

types of metrics that it consumes (e.g., concurrency, requests-per-second)

-

its targets (the value that it tries to maintain)

Depending on your preferences, the YAML file can look something like this:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: <your-service-name>

namespace: <your-namespace-name>

spec:

traffic:

- revisionName: <first-revision-name >

percent: 100 # All traffic is routed to the first revision

- revisionName: <second-revision-name>

percent: 0 # 0% of traffic routed to the second revision

tag: v2 # A named route

template:

metadata:

annotations:

autoscaling.knative.dev/class: kpa.autoscaling.knative.dev

autoscaling.knative.dev/metric: concurrency # Knative concurrency-based autoscaling (default)

autoscaling.knative.dev/target: "10" # Target 10 requests in-flight per pod

autoscaling.knative.dev/max-scale: "100" # Limit scaling to 100 pods

spec:

containers:

- image: gcr.io/knative-releases/knative.dev/eventing/cmd/event_displayKnative Eventing

Knative Eventing is a part of Knative that provides features for implementing event-driven architecture in a serverless way. Knative Eventing provides a set of tools and resources that allow developers to route events from various sources to sinks, enabling developers to create event-driven serverless applications.

Developers simply define event triggers and their associated containers, and Knative does the work. When an event occurs, it is sent to Knative using a standard HTTP POST request. Knative then routes these events to the appropriate event sinks (also known as event consumers), which can include serverless functions, containers, or other applications. Once an event is routed to an event sink, the associated container is triggered and the event is processed. These events follow the CloudEvents specification, which enables creating, parsing, sending, and receiving events in any programming language.

Conclusion

In summary, Knative is a tool that has all those best practices that a serverless framework requires, such as ease of deployment, scale-to-zero, traffic splitting, and rollbacks. It has two main parts, Knative Serving and Knative Eventing. Knative Serving helps people manage the lifecycle of their applications and make sure they can handle lots of traffic without slowing down. Knative Eventing helps people manage the way their applications communicate with each other. For developers who already use Kubernetes, Knative is an extension solution that is easily accessible and understandable.

Next blog post fom Knative series:

Handling Kafka Events with Knative