Martina Misic

PRINCIPAL CONSULTANT

BigQuery is just one service that Google Cloud Platform provides, which is a database service, but if your company is using the Cloud mainly for data related purposes like reporting, analytics or data science, you will probably be using BigQuery as your data platform.

I would like to share my experience / cookbook of setting up a BigQuery flat rate pricing model for a multinational company working according to Data Mesh principles, which were explained briefly in our previous blog: https://www.syntio.net/en/labs-musings/data-mesh/

A BigQuery pricing model change for this company saved millions of dollars. In the past year, if this company had been using the default pay-as-you-go (on demand) pricing for BigQuery, they would have spent 18 million dollars on BigQuery data processing. With using the flat rate model and combining it in between teams within the organisation, they only paid 1 million. In one year, instead of paying 18 million, they paid 1 million. That is 94,4% of savings. In the hope that my experience helps your company to do the same, I would like to explain the steps we took to achieve this.

Context first. This company was moving their data analytics to the Cloud. Specifically, to GCP and BigQuery as their main data platform. The company is processing data on TB and PB scale, with 500+ projects using BigQuery in multiple countries, across Europe and in the US. After a couple of months transitioning to Cloud, enterprise administrators noticed a big cost starting to show – which was that BigQuery had the second largest cost from all used cloud services.

Since the team responsible for cloud administration at an organisational level, did not have a resource that would deep dive into BigQuery alone, the task for investigation and optimizing cost for organisation came down to us: a data engineering team who had a lot of experience in using BigQuery on our own projects. Our Team Lead assigned that task to me. I got the appropriate organisation-level permissions from the cloud administrators and so, could start analysing.

But where to even begin with analysis?

First, you need to be introduced to the latest https://cloud.google.com/bigquery/pricing documentation on pricing models and cost fees. Be aware that they can vary from the time this article was written.

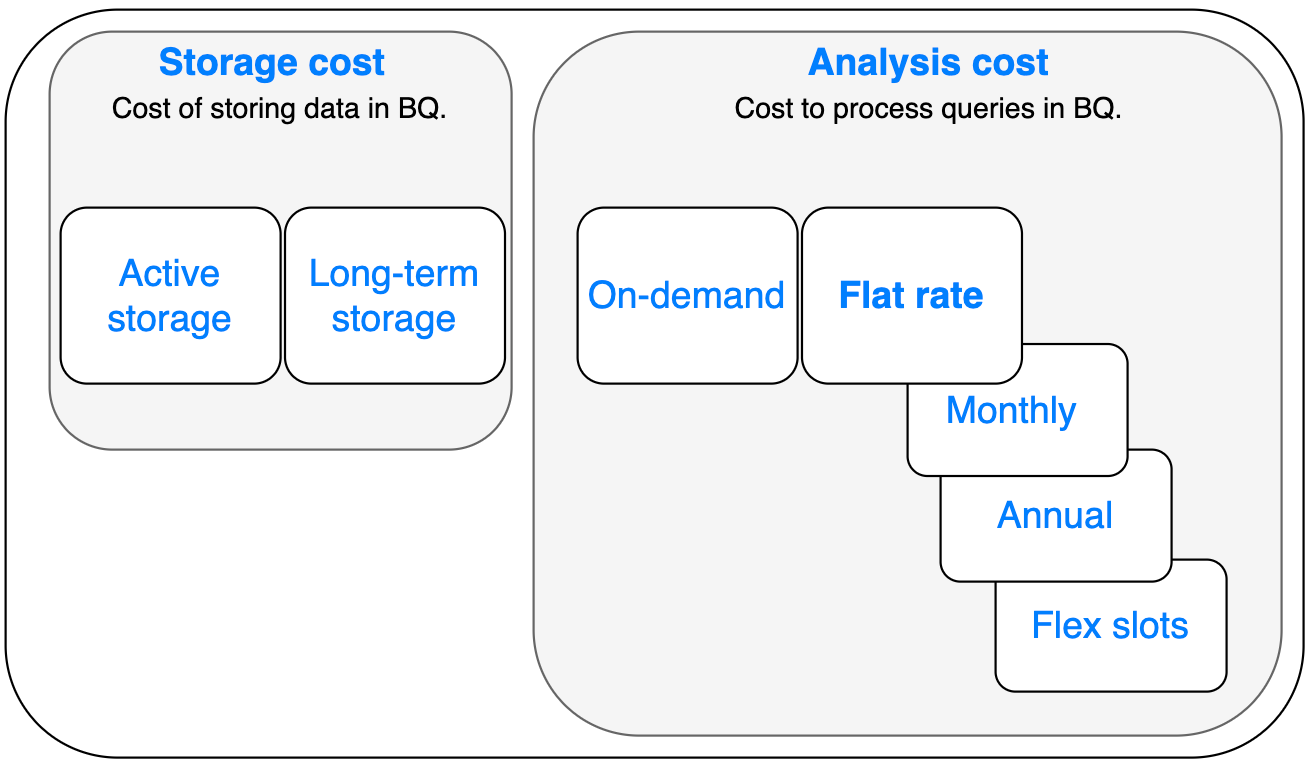

In a nutshell, there are 2 types of charges for BigQuery services – a charge for storage and a charge for processing data.

BigQuery pricing

If you are trying to manage the costs for 500+ projects, where every team does their own thing and are quite independent from one another, you cannot influence much on storage price. But you can write guidelines and advise on the best practices, monitor the data stored, create reports, and finally communicate with the highest spending teams to see if the cost is absolutely necessary. If they do need to store as much data as they do, that is the cost they will be charged for (per GB) and they should include it in their monthly budget. If they don’t need to save data for a longer time – there are retention policies available which they can set on their datasets to automatically and periodically delete the data.

The charge for data processing is where things get interesting and where we managed to make an impact with different pricing models.

- Default pricing is per TB processed (aka On demand) – depending on how many TBs you use, you pay for those TBs.

- Flat rate is the alternative – you reserve BigQuery processing units (called slots) for a flat rate fee and you process as much data as you want, and the price stays fixed.

It’s not possible to predict in advance how much to reserve. You first need to use BigQuery, monitor the workload, optimize it where possible, and then decide which pricing model fits your needs the best.

You have to spend some money in order to start saving it. You don’t want to lose the performance that BigQuery offers, just to have costs remain at the minimum possible. A big benefit of using BigQuery is the speed, so make sure to check how many slots you need.

Who can manage flat rate?

BigQuery has a good console available for overview and monitoring flat rate and on demand projects within an organisation. It is very easy to set up a reservation, and assign/remove projects from it. We performed everything manually through that console, and later implemented programatically with reservation APIs available.

Competencies needed in people managing BigQuery flat rate would be:

- Knowledge of BigQuery – understanding of slots/queries relation and ingestion patterns

- Analysing each teams usage that wants to join flat rate in order to see how they will fit in the setup

- Cost & performance analysis for teams

- Programming skills to switch from manual work as much as possible to using APIs instead

- Query optimization is a desirable skill for helping teams that might need it before committing to more slots than actually needed.

When starting with flat rate and establishing processes and automation, it will require a dedicated full-time person. Later, the work will potentially decrease and be more focused on proactive tasks, such as query optimization, more efficient scheduling, reservation predictions and similar.

How flat rate was implemented

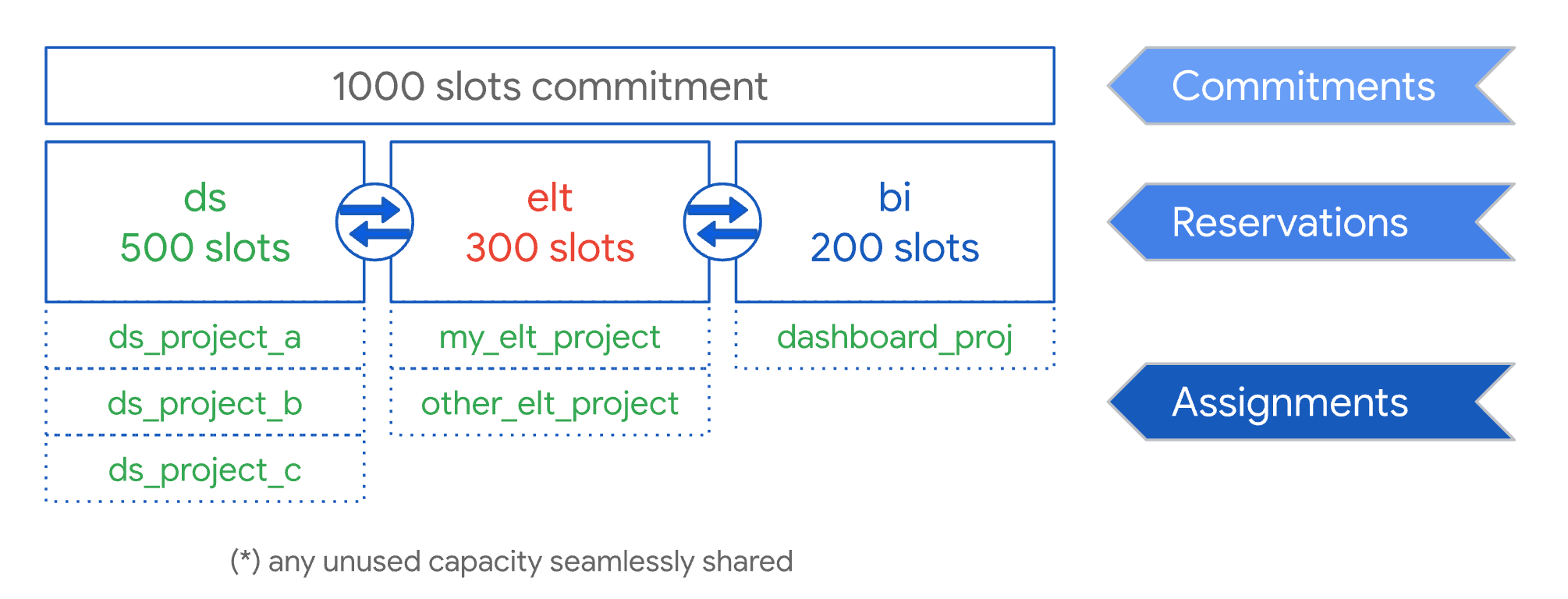

The setup is presented in the following image:

- on one admin project, we bought slots for everyone, under one commitment which can be shared amongst teams

- reservations were created for each team involved, where each team had their minimum number of slots needed

- projects were assigned to appropriate reservations

Flat rate setup

Here are some more detailed steps on how we got there:

Step 1. A new BigQuery project was created that would serve only administrative purposes of handling organisational flat rate.

Meaning that on this project we:

- created one slot commitment for the entire organisation

- purchased slots or removed them

- created reservations for different teams in the organisation

- assigned projects to reservations

- analysed how teams were using flat rate

- analysed the next high on-demand consumers to add them to flat rate

High consuming teams could have their own BigQuery flat rates, but that would not be optimal as the capacity cannot be shared. When you put multiple reservations under same commitment, then if one reservation is not being used, another team can use it if they need more for their query.

Step 2. Next thing decided was using the flat rate option to commit – in our case we chose a MONTHLY commitment. Since we wanted to have flat rate, but didn’t have much experience with it beforehand, we wanted to be able to change our setup on monthly level. Annual commitment is also available, and a bit cheaper, but then you commit to slots purchased for at least a year and you cannot decrease if needed. You can add slots, but then you prolong commitment for another year from last date of that slot purchase. Commitment will be bought on a separate BigQuery project created just for administrative purposes of organisational flat rate (the one mentioned in previous step).

Step 3. After deciding on the type of commitment, we had to see how much capacity (slots) we wanted to reserve. How did we find that out? We made a list of the highest consuming BigQuery projects because they are potential candidates for paying less if they were in flat rate. This data is available from INFORMATION_SCHEMA views on the organisational level. Side note – when you query INFORMATION_SCHEMA to find a summary cost of query jobs, exclude the SCRIPT statement type, otherwise some values might be counted twice. The SCRIPT row includes summary values for all child jobs that were executed as part of this job.

This is the query to find out the highest BigQuery consumers in November 2022 for region EU.

SELECT

SUM(total_bytes_billed)/POWER(2, 40) AS totalTBBilled,

project_id,

CAST (creation_time AS string format 'YYYYMM') AS period,

reservation_id

FROM

`region-eu`.INFORMATION_SCHEMA.JOBS_BY_ORGANIZATION

WHERE

creation_time>=TIMESTAMP("2022-11-01")

AND creation_time<=TIMESTAMP("2022-11-30")

AND statement_type!= 'SCRIPT'

GROUP BY

project_id,

reservation_id,

period

ORDER BY

1 desc;Then we checked how many slots each project is using on average throughout the day, so that we reserved that much. We didn’t want them to lose their performance, so it’s very important to reserve as much as you actually need. A slot used metric is available in BigQuery Monitoring.

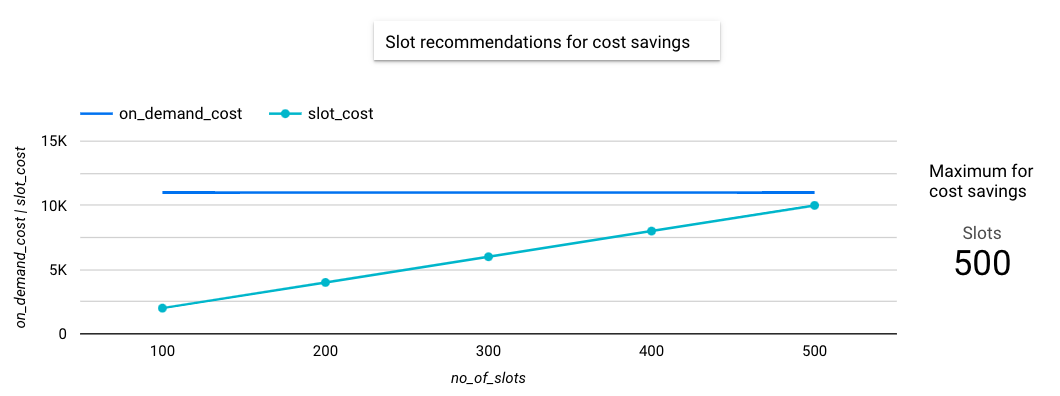

We compared the cost for:

- how much a project is charged per TB vs.

- how many slots they would need to reserve and be charged for

If the price for reserving slots was less than per TB, they would be assigned to flat rate.

Example: If monthly cost for a project is 11K $, it can benefit from flat rate with less then 500 slots

Step 4. When you bring in multiple projects under the same enterprise slot commitment, the cost is only visible on that one admin project. If you want for each team in the organisation to be responsible for their own, you have 2 options. The first, an automatic cost attribution provided from Google. And the second, distribute cost yourself with a custom script from one admin project to all others participating in flat rate.

Reservation cost attribution is a feature that lets you attribute reservation fees back to the specific query usage across any projects that used the reservation. Meaning, if multiple projects are included in a reservation, they will only pay for the portions of reserved instances that they actually used. For us it caused an issue because reservation utilisation was not at 100% all of the time, especially during the initial stages of using reservations. Initially reservation utilisation was closer to 60%. That resulted in 40% reservation left unused, and the cost charged on the main admin project. The amount that was left, also needed to be distributed and could not stay on admin project. Since we couldn’t get the agreement on fair distribution with all teams involved, including finance, we decided to opt out of automatic cost attribution and decided to have a custom script that would distribute the cost to other teams, per reservation. If a team wanted to reserve 400 slots, they would pay exactly that, regardless of the exact usage.

Step 5. Create reports for full transparency. Over time, a lot of team members and owners change within an organisation, and they might not know that their BigQuery project is in flat rate and what it means to be in flat rate. That is why it’s important to create awareness in the organisation that this exists and that it saves a lot of money. You maybe don’t want to grant access to an admin project to other people, but you can grant access to the reports created. Reports that might help include:

- List of teams included in flat rate, with slots purchased and a contact person for each team

- Cost saving report (flat rate price vs. on demand price), in order to show teams what they are gaining with flat rate

- Slot recommendations for On-demand users

- Flat rate usage report

Additional steps. In order to save more money in your organisation, not just with flat rate, there are couple more additional steps you could take for On-demand projects:

- Enforce quota restrictions – decide on a reasonable number of TBs per day that is expected as a maximum for processing and set it as a limit for all non-production projects. That way, you are avoiding development error costs! We had situations where mistakes were made in the code, and then thousands of TBs processed, which cost up to $150,000 in just one day.

- Introduce teams to Flex slots. They are also under the flat rate commitment, but it’s a commitment that can be cancelled after only one minute. It can be used when running a script with a short execution time, but very high slots and TBs. It can be incorporated in SQL scripts with DDL statements for buying slots before running a query, and then dropping them afterwards.

- Introduce teams to all pricing options. It is very important that teams understand what is best for their projects even though flat rate is managed centrally. They can react proactively and approach the team managing flat rate if they expect big data workloads in advance and avoid bigger costs.

- Implement predictions for flat rate candidates. Instead of looking at the metrics manually in the console, you can collect them and create actions on top of them for projects that are still an on-demand pricing model. You can gather daily metrics for TBs scanned per project or even better (if possible), per team. With that, you can also gather metrics for slots used, in minute intervals to get the most precise data. You can compare costs for each option on a monthly level. You can also take average usage per a couple of days and calculate how much would that be for an entire month. If the cost per TB would be higher in comparison to slots, that project might benefit from flat rate. You can enable notifications to alert you in such cases.

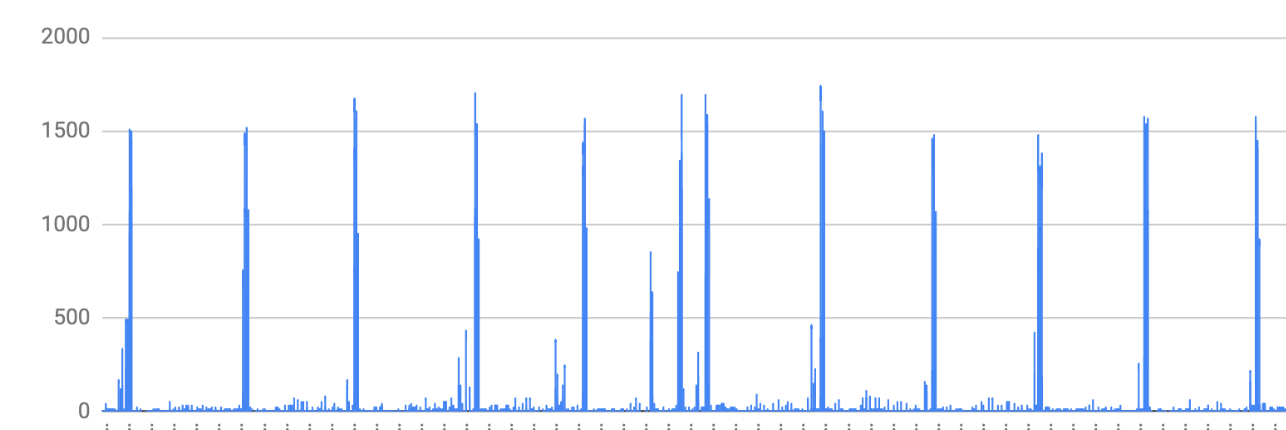

Here are some samples of different pricing model candidates:

Flat rate candidate

Flex slot candidate

On demand candidate

Results

You have one team managing commitment on organisational level. The other teams in the organisation are using reservations and saving money doing so. The other teams that are not using flat rate are well informed of their pricing options, and restricted with quotas where necessary.

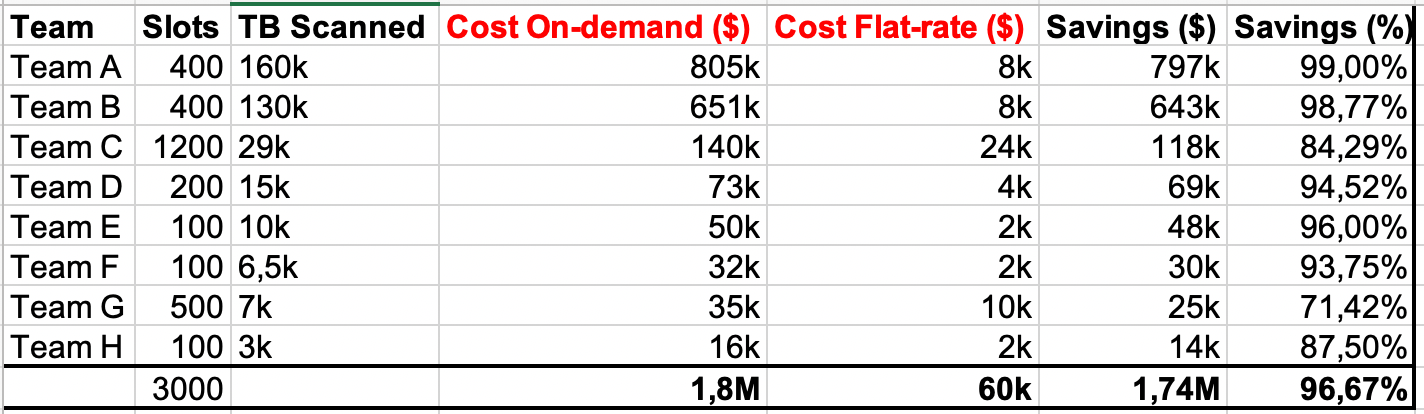

To show you in reality how the comparison for on demand vs. flat rate price looks over one month, the image below shows how it’s almost two million dollars in savings, just for these top 8 teams.

Flat rate savings compared to On demand price per TB (in one month)

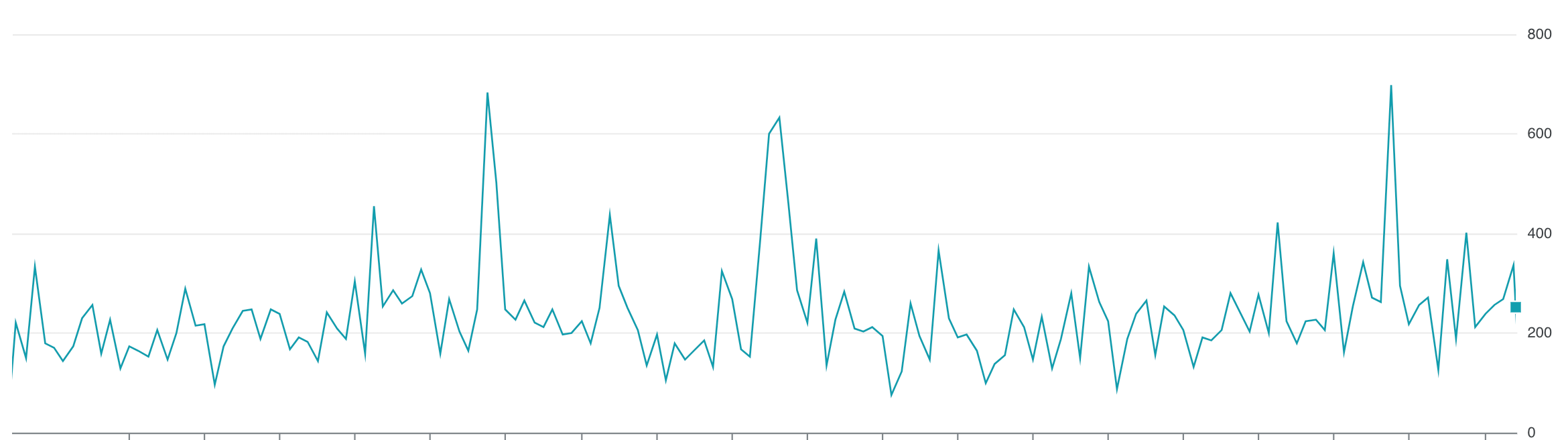

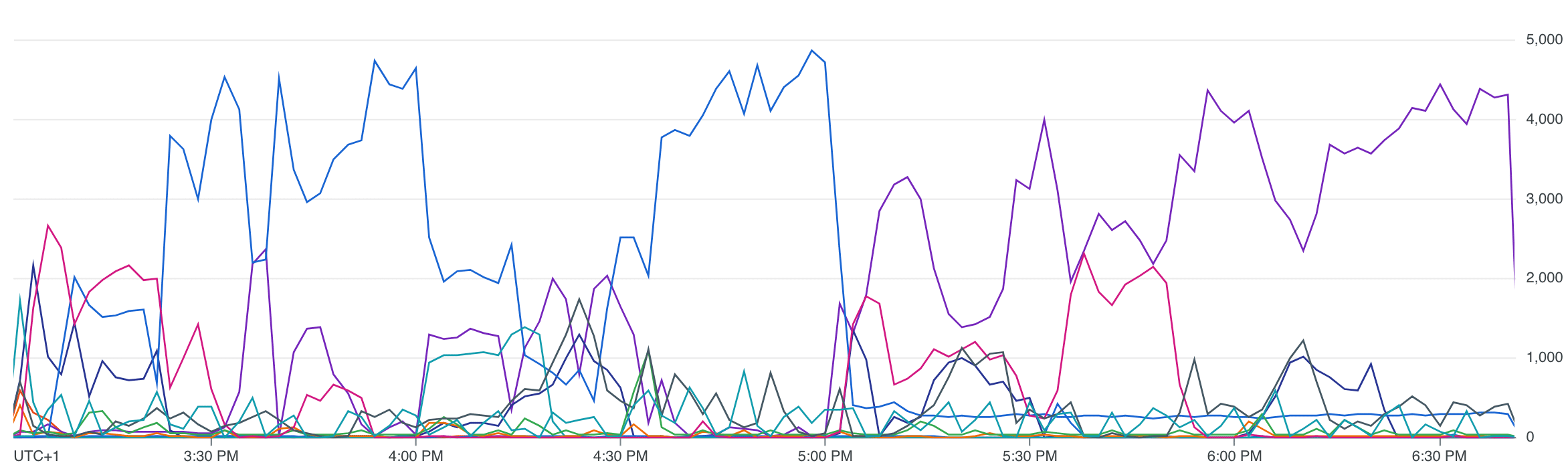

To conclude. Flat rate is a good way to limit cost. Whether we talk about large amounts of data processing in production, or avoiding unexpected costs on development due to incorrect or unoptimised queries. For better cost efficiency more teams/projects must be included, ideally with a better spread of usage over the full 24 hours of each day.

When handling reservation, we need to make sure that there is always some default number of slots available for teams, so that queries don’t wait for slots. Low performance is better than waiting on slots. We also need to make sure that a project with heavier load gets more minimum slots than some other lighter load project. When adjusting flat rate, strive to have continuous, distributed load throughout the whole time.

Flat rate slot usage per team