Borna Katovic, Jelena Metecic

DATA ENGINEERS

Introduction

In the PREVIOUS BLOG POST, we talked about machine learning on Google Cloud Platform using Persistor and Cloud Functions, however, we didn’t talk much about cost. We said that using Google Cloud Functions was cheap, but that’s about it. Well, you don’t have to take our word for it anymore, as we are going to deep dive into pricing for all of the components we used in the previous post, and also do a comparison with an alternative approach – using a tensor processing unit (TPU) – specialized hardware tailored to artificial intelligence and machine learning applications. Keep in mind that Google has free tier usage for new users which bills differently than described in this blog post (some things can be free).

Cloud Functions pricing

We’ve already mentioned what Cloud Functions are in a previous blog post. In this blog post we’ll be more focused on the topic of pricing and how each Google Cloud product contributes to the overall cost of your project.

You pay for Cloud Functions based on your interaction with them. It’s called pay-as-you-go pricing and it means that Google won’t bill you for having functions, but it will bill you for invoking and using them. Google Cloud Functions are basically priced according to the run time of a function, how many times it is invoked, how many resources it uses, and if your project expands throughout other Google Cloud regions, as you may be billed for data transfer fees.

More so, function invocations are charged at a flat rate regardless of the source of the invocation. The first 2 million invocations are free and after that it’s priced at $0.40 per million invocations. If we do some quick math, that means that after 2 million free invocations, one invocation costs $0.0000004. Pretty cheap if you ask us 🙂

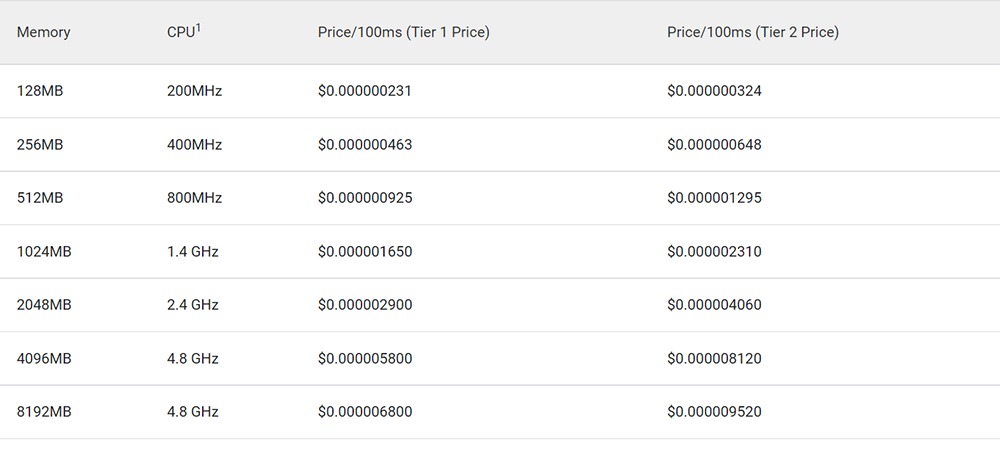

The run time (compute time) of a function is the time from when your function gets invoked, to the time it finishes, hopefully after successfully finishing the job, but also if an error or a timeout occurs. Run time is measured in 100ms increments, rounded up to the nearest increment. Fees for the compute time are variable based on the amount of memory (GB-seconds) and CPU (GHz-seconds) provisioned for the function. GB-seconds are seconds of compute time multiplied with the amount of memory provisioned in gigabytes (e.g. 1 GB-second is 1 second of run time with 1 GB of memory provisioned), and GHz-seconds are seconds of run time multiplied with the clock frequency of the CPU provisioned in gigahertz (e.g. 1 GHz-second is 1 second of run time with a 1 GHz CPU provisioned). The following table shows 7 different types of Google Cloud Function configurations and their associated costs – note that memory and CPU choices are bound together. You can see that there are two different columns with different pricing. More specifically there are two different pricing models named Tier 1 and Tier 2, and they are determined depending on the region.

Cloud TPU pricing

TPU is short for tensor processing unit. A TPU processes tensors which are fancy matrices (MORE ON THIS) which is handy when you have a machine learning problem that you want to train or test. However, Google Cloud TPUs can get pretty expensive, and they require having a Compute Engine Virtual Machine (VM) in order to connect and use them. We are going to break down the costs involved with having and using Google Cloud TPUs and their VMs.

TPUs and VMs are billed even if you are not actively using them. Basically, as long as you have a TPU node you are being charged no matter if you use it or not. There are two ways of charging TPUs. One is called single device pricing (that means you only have one TPU node running and that one node can not be connected to others) and the other is called TPU pod type pricing (for clusters containing multiple TPU devices, accessible only to those who have a 1 or 3 year commitment).

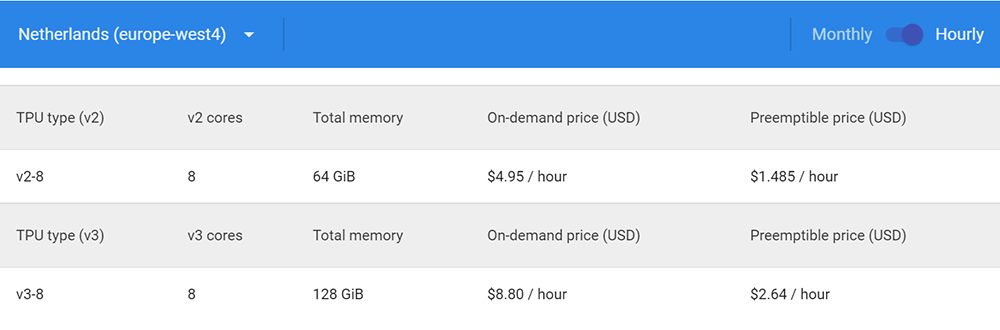

Single device TPU types are billed in one-second increments and are available at either an on-demand or PREEMPTIBLE price. Preemptible means your TPU can shut down at any time if the overall usage is high, which results in a lower price, but also means you have to take those unexpected shutdowns into account when programming your applications. The table below shows the pricing for europe-west4 Netherlands region (we used version 3-8).

Charges of TPUs and VMs are separate so these prices you see are only for TPUs. You can learn more about VM pricing HERE. It’s pretty clear that this kind of solution for machine learning problems can get pretty expensive, fairly quick, which we will look at a little bit later.

VMs vs Containers vs Serverless

Cloud Functions and Cloud TPU (along with its Compute Engine VM) represent two very different approaches to cloud computing in terms of how much control over the resources you have, and how much you pay for it.

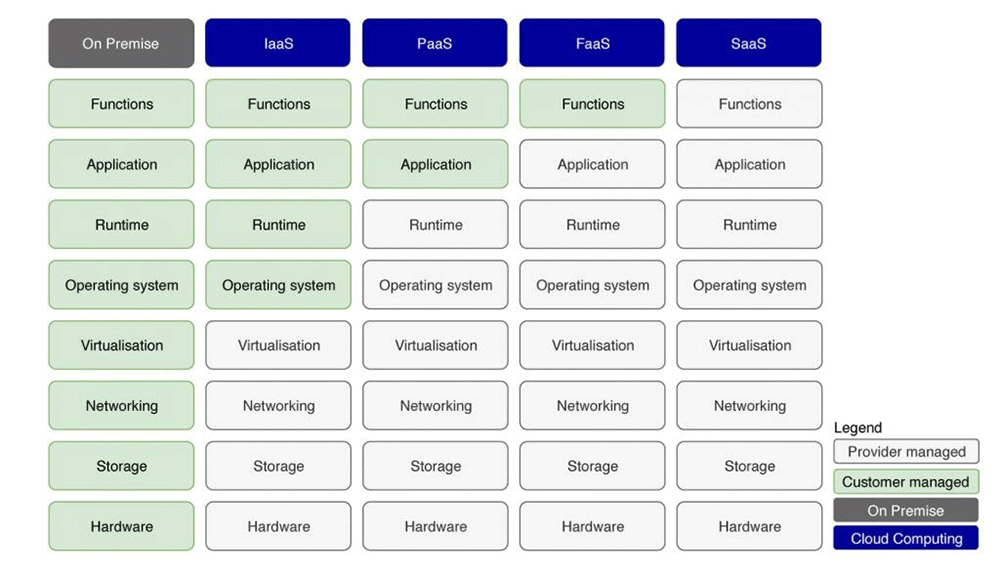

Virtual machines (VMs) are part of Infrastructure as a Service (IaaS). It’s a model which gives you the most control over your resources, but with control also comes responsibility for maintaining and securing the OS running on your VM. VMs also require additional billable resources such as persistent storage and networking.

The basic building blocks of Platform as a Service (PaaS) are containers. Your code along with all of its dependencies is packaged into a container which can be easily run on different operating systems and environments. However, the real power of containers manifests itself in the form of orchestration, which allows you to automatically deploy and scale your application. You can learn more about Kubernetes, the most prominent orchestration software for Docker containers, in ENA’S BLOG POST.

Functions as a Service (FaaS) represent the highest level of abstraction in cloud computing where you can still program the application. FaaS are also called serverless functions, not because they don’t run on a server, but because that server layer is completely hidden from the user and managed by the cloud provider. Resources and execution time are typically much more limited here, for example you can’t have a GPU connected to a Cloud Function on GCP, and one instance can only be run for 9 minutes before it times out.

Just briefly, Software as a Service (SaaS) represents user applications which are billed with a pay-as-you-go model. You cannot make changes to such applications. Examples include Office 365, Salesforce, Slack, and many others.

1.1 On PREMISE VS. SERVERLESS – Customers love solutions (customers-love-solutions.com)

So, which to choose? Well, it depends on what your application is. As people are moving to a component based approach away from big monolithic applications, VMs are largely losing popularity to container based solutions, because of their increased flexibility. Maybe your use case requires a simple enough solution which can be run as a serverless function. Don’t take simple too strongly here, as we’ve proved you can do some pretty complex computation such as training neural networks in serverless functions.

Other costs (Pub/Sub, Storage, SQL)

PUB/SUB PRICING has three components: throughput costs for message publishing and delivery, storage fees when retaining all or acknowledged messages or creating snapshots, and egress costs when crossing a zonal or regional boundary. It is important to mention that storage of unacknowledged messages is free. Google also offers a cheaper PUB/SUB LITE option which can be as much as an order of magnitude less expensive, but offers less features and requires more manual configuration.

In our specific use case we haven’t configured storage for acknowledged messages, as that is taken care of by Persistor because all of our resources were in the same region, no egress fees were incurred. This means we would have paid only for the throughput (message publishing & delivery), however the first 10 GiB in a calendar month are free, so basically Pub/Sub didn’t cost us anything!

Basic CLOUD STORAGE usage is billed according to data storage, network usage, operations usage, and others if using some additional advanced features. Data storage is priced depending on the continent and is measured in gigabytes per month. Network usage pricing is split into egress (data sent from GCS) and ingress (data sent to GCS). Operations are actions that make changes to, or retrieve information about buckets and objects in Cloud Storage. They are separated into classes, and billed in units of 10,000. As part of Always Free usage limits, 5 GB/month, 5,000 Class A Operations, and 50,000 Class B Operations, and 1 GB of network egress are free, but only in three United States regions.

CLOUD SQL PRICING depends on whether you’re using a dedicated or shared CPU. Dedicated instances are charged by the number of cores and memory amount. Shared-core instances are charged for every second that the instance is running. It is important to mention that shared-core instances are not covered by the CLOUD SQL SERVICE LEVEL AGREEMENT (SLA) and are not recommended for production environments. All Cloud SQL instances incur storage and networking costs, with ingress to Cloud SQL being free.

Experiment & Cost Comparison

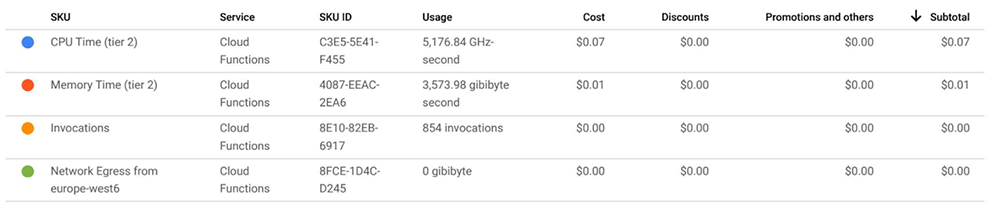

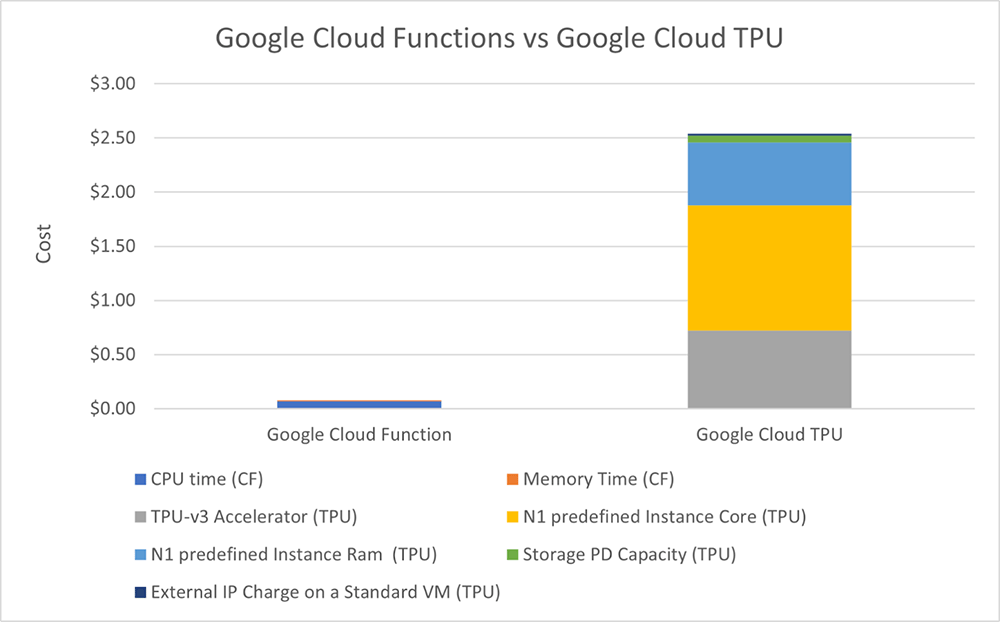

Let’s compare using two totally different cloud solutions – Cloud Functions and Cloud TPU – for solving the same machine learning problem – training a convolutional neural network (CNN) on the MNIST dataset. The training set consists of 60,000 samples of 28×28 monochrome images. When using Cloud Functions, the data was published 6 times, separated into 10 messages with 1,000 samples each. Keep in mind that Persistor is also two Cloud Functions which could also potentially incur costs (hence the high number of invocations). The price for all of this? Eight measly cents ($0.08).

Looking at Cloud TPU, it seems significantly more expensive, and it is, but maybe not as much as this image would first suggest. Cloud TPU incurs costs when it’s in the READY state, so not necessarily only when performing computations. We were following Google’s RUNNING MNIST ON CLOUD TPU tutorial and it took us some time to set everything up correctly. During all of that, Cloud TPU was charged even though it wasn’t doing any neural network training or other kind of computation.

All in all, it takes the TPU less than 5 minutes to train a neural network on the whole MNIST dataset. Even when we look at the price for such a short interval (approx. $0.72), it’s still an order of magnitude higher compared to using Cloud Functions. You also should take into account that Cloud TPU needs an underlying Compute Engine VM to run, which can incur significant costs related to CPU, memory, storage, and networking usage, adding unnecessary overhead costs.

Conclusion

“Choose the right tool for the job” would be a good takeaway from this whole story. But what does right mean in cloud computing? Maybe it’s easier to eliminate what would be wrong in this context. Taking a powerful solution like Cloud TPU to solve a relatively simple problem like MNIST would be a big no-no. Just setting up and testing this solution in half a day cost us almost 40 bucks! It would take you a significant amount of time and effort to set up the TPU and its VM in a scalable way which doesn’t incur costs when not used. All of that scalability and flexibility is already built into Cloud Functions, so why not leverage that to achieve our goals for the minimal possible cost?

Along with how much power we want from our cloud resources, we also have to decide how much control we want to have over them. Solutions which give users more control like Virtual Machines may provide better configurability, but creating and maintaining an ideal configuration takes time, skill, and labour – all of which costs. Often it’s better to use pre configured patterns, but perhaps in unexpected ways, just like we showed you 😉