Mia Tadic, Darija Strmecki

DATA ENGINEERS

As a second part of the blog series about HPC, we test performances of a Slurmcluster deployed on AWS cloud platform, to compare the setup and the results with GCP’s deployment from the previous post in the series.

In this blog post, we will describe the cluster setup on AWS, show the results of training the same machine learning model as in the previous blog post, and describe the cluster cleanup. To see the environment setup and detailed description ofthe model, please check out the previous blog post. In short, the model classifies images of handwritten digits 0-9.

Setting Up aSlurmCluster on AWS

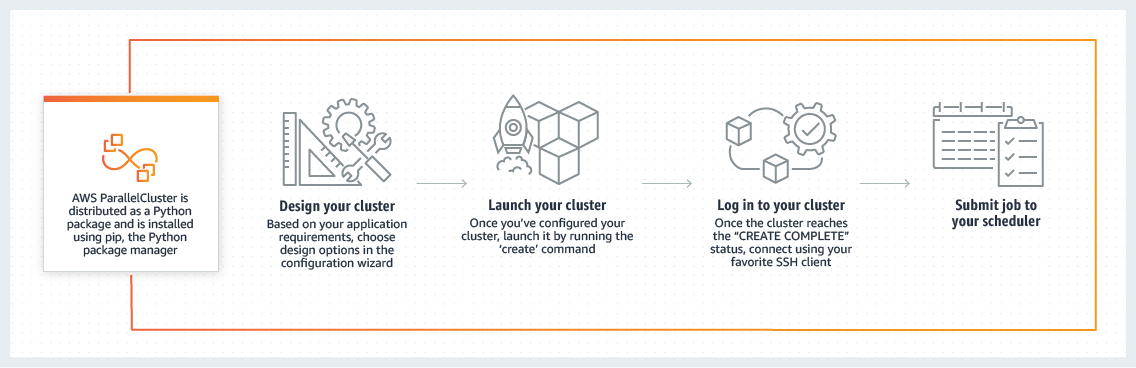

We will use AWS Parallel Clusterto set up an HPC cluster. We will do so by using AWS CLI from the local Windows machine, i.e. from the Command Prompt. As AWS says, AWS Parallel Cluster is an AWS-supported open-source cluster management tool that makes it easy for you to deploy and manage High Performance Computing (HPC) clusters on AWS. It provides all the resources needed for your HPC applications and supports a variety of job schedulers such as AWS Batch, SGE, Torque, andSlurm.

AWS provides quite an automatic setup, since all you have to do is to install Parallel Cluster locally as a Python package and then just start the interactive cluster configuration which will propose a series of question for you to answer and set it al lup for you. So, let’s start:

1. Create virtual environment and activate it:

> virtualenv hpc-venv > hpc-venv/Scripts/activate.bat

2. Install AWS CLI:

(hpc-venv) > pip install --upgradeawscli (hpc-venv) >awsconfigure

This step is for connecting your local machine to your AWS account. For that purpose, you will have to set your access key, secret access key, region,and output format.

Access key and secret access key can be created in AWS console → Services → Security, Identity, & Compliance → IAM→ Access management → Users → choose your user → Security credentials → Create access key. Save the secret key locally because you will not be able to reach it again.

3.InstallParallelCluster:

(hpc-venv) > pip installaws-parallelcluster

4. Start configuring the cluster to suit your needs with pclusterconfigure. It will output the mentioned series of questions with possible options and you just have to choose from them. It even offers you different HPC job managers/schedulers which will then be installed and prepared for you without your effort.

Here are someof the informationthat wefilled in:

AWS Region ID: eu-central-1 Scheduler:slurm Operating System: centos7 Minimum cluster size (instances): 0 Maximum cluster size (instances): 10 Master instance type: (we tested both m4.large and c4.2xlarge) Compute instance type: (we tested both m4.large and c4.2xlarge) Network Configuration: Master in a public subnet and compute fleet in a private subnet

It willalsoask you for anEC2 key pairsinceit is needed to SSH into your master instance and run Slurm jobs. It can be created in AWS console → Services → Compute → EC2 → Network & Security → Key Pairs → Create key pair. Save the key locally because you will not be able to reach it any more.

Pay attention to the minimum cluster size. It implies how many compute instances will be alive all the time. This is a great feature if you will not keep the cluster alive for a longer period.For example,if you terminate your master and compute nstances over night, and still see them running in the morning –wrongly adjusted minimum cluster size parameter is the reason why it happened.Parallel Cluster will constantly be allocating them and then terminating them (around every 13 minutes). We kept our cluster alive only for a few days,so we have set the minimum cluster size to 0.We have also set the maintain_initial_sizeparameter to false in the config file.

Finally,command pclusterconfigure created a config file inC:\Users\YOUR-USER-NAME\.parallelcluster\, and you can further edit it if you need to before the next step.

5. CreateParallelCluster:

(hpc-venv)> pcluster create -c C:\Users\YOUR-USER-NAME\.parallelcluster\config slurmCPU-parallelcluster

6.SSH intothemasterinstance from local CMD:

(hpc-venv)> ssh -i (EC2-key) centos@MASTER-INSTANCE-PUBLIC-IP

Note:YoucannotSSH to computenodes from your local machineif you have put them in aprivate subnet during pclusterconfigure. You can SSH to compute nodes only from your master instance.

The stepsfrom abovewill createthefollowing resources:

- CloudFormation Stacks (for deploying the whole cluster with its dependent AWS resources)

- EC2 Instances (masterandcomputeinstances)

- EC2 Auto Scaling Group (for quick scaling of the nodes)

- EC2 Launch Template (for specifying EC2 instance configuration,e.g.AMI, instance type)

- EBS Volume (attached storage to EC2 instances)

- DynamoDB table (for storing some metadata)

Tip:you can find cluster’s events/logs in: EC2 → Auto Scaling Groups → Activity → Activity history.

Training Distributed ML Model withSlurm

We are training our model on CPUs.As mentioned, the model and the environment are described in detail in the previous blog post. In short, it is a Keras machine learning model that classifies 60,000 MNIST images of handwritten digits. It is a simple convolutional neural network algorithm. We are using Tensor flow’s Multi Worker Mirrored Strategy for synchronous distribution oftraining with data parallelism across multiple machines.

Two machine types that we tested are:

- m4.large(general purpose) → because it is the most similar to GCP’sn1-standard-2which we used for testing on GCPin the previous blog post

- c4.2xlarge (compute optimized) → recommended for HPC

| Instance | vCPU* | Mem (GiB) | Dedicated EBS Bandwidth (Mbps) | Network Performance | Linux/UNIX Usage | Processor |

| m4.large | 2 | 8 | 450 | Moderate | $0.12 per Hour | 2.3 GHz Intel Xeon® E5-2686 v4 (Broadwell) |

| c4.2xlarge | 8 | 15GiB | 1,000 | High | $0.454 per Hour | High frequency (2.9 GHz) Intel Xeon E5-2666 v3 (Haswell) |

Results: Comparison of Training Distributed Model on Different Numbers of Nodes

- m4.large

| Number of nodes | Code execution time | Loss | Accuracy |

| 1 | 381.350 seconds | 0.0035 | 0.9993 |

| 2 | 750.046 seconds | 0.0156 | 0.9958 |

| 3 | 666.984 seconds | 0.0282 | 0.9914 |

| 4 | 563.375 seconds | 0.0369 | 0.9890 |

| 6 | 418.380 seconds | 0.0541 | 0.9833 |

| 8 | 335.298 seconds | 0.0599 | 0.9823 |

| 10 | 272.900 seconds | 0.0712 | 0.9789 |

- c4.2xlarge

| Number of nodes | Code execution time | Loss | Accuracy |

| 1 | 112.338 seconds | 0.0029 | 0.9994 |

| 2 | 158.687 seconds | 0.0139 | 0.9963 |

| 3 | 140.527 seconds | 0.0291 | 0.9911 |

| 4 | 110.211 seconds | 0.0383 | 0.9883 |

| 6 | 84.259 seconds | 0.0545 | 0.9838 |

| 8 | 65.279 seconds | 0.0761 | 0.9774 |

| 10 | 55.647 seconds | 0.0710 | 0.9781 |

In the tables, we show three factors that we use for comparison: time, loss, and accuracy. Loss and accuracy are model’s metrics. Lossis a number indicating model’s error inprediction.Accuracy is the ratio of correctly classified images to the whole set of images. Loss is desired to beas close to number zero as possible, and accuracy is desired to be as close to number one as possible.

When comparing GCP’s and AWS’ results on similar machines (n1-standard-2 on GCPin previous blog post,and m4.largeon AWS), we notice that GCP has significantly better time results. AWS starts better off with 1 node, but with all the other nodes time is almost three times better on GCP. The time duration peak with training on two nodes remains on AWS as it was on GCP (only bigger). Regarding the accuracy and the loss, those are slightly better on AWS’s m4.large.

When comparing AWS’m4.large and c4.2xlarge, c4.2xlarge is obviously a much better solution for high performance computing regarding the execution time, while loss and accuracy are almost the same.

Cluster Cleanup

Delete the cluster and along created dependent resources from the virtual environment on your local machine (created at the beginning of this blog post):

(hpc-venv) > pcluster delete YOUR-CLUSTER-NAME

If you do not remember the cluster name, you can find it by:

(hpc-venv) >pclusterlist

If you chose to create VPC during pcluster configure, you should additionally delete created VPC and its belonging CloudFormation Stack.

Click here for part 1 in the series: High Performance Computing with Slurm on GCP