Andrija Dukic

DATA ENGINEER

This post attempts to demonstrate the power of utilizing concurrency, specifically the power of Go’s concurrency model, which is, in my opinion, one of the main reasons we would want to use Go.

What is concurrency?

First off, we need to talk about what are we actually talking about when we talk about concurrency.

It can be described as the ability to run multiple tasks (or computations) “at the same time”, where we don’t have control on the order of execution of these tasks. Obviously, we want the end result to be the same as if run one by one.

There’s a common misconception that concurrency and paralellism are synonyms, but that’s not actually true. I’ve selected two quotes which attempt to explain the difference:

“Concurrency is about dealing with lots of things at once but parallelism is about doing lots of things at once”

“Parallelism is about execution, concurrency provides a way to structure a solution to solve a problem that may (but not necessarily) be parallelizable”

This basically means that, in a way, concurrency is a design choice, and parallelism is something that may be a byproduct of that design.

So why do we need concurrency?

A lot of code in microservices consists of making some number of network calls and waiting on the response, which might also branch out into more network calls.

It’s important to notice that in these kind of programs, very little computation is actually being done;

the CPU spends most of of the time asleep, waiting on the network to return a response, so, why shouldn’t it do some valuable work while it waits? After all, cloud providers are gonna charge us just the same.

These types of programs are often called “embarassingly parallel”, and are obviously the focus when we talk about concurrent design.

Visualizing concurrency

Since concurrency can be hard to deal with, it’s important to have a good, simple visualization of it.

That’s why I’ve chosen a few animations which were extracted from actual Go programs using a really cool library. The library is called GOTRACE (divan: Concurrency tracer and visualizer for Go (Golang) programming language), while the animations are taken from an article made by the creator of the library, titled “VISUALIZING CONCURRENCY IN GO”.

In these visualizations, threads are shown as a straight line running down through time. Interactions between the threads are shown as red arrows, going from the sender to the receiver.

Example #1

In the first example, things are pretty straightforward; a thread sends some data to another one and the program terminates.

Example #2

This example is basically the first example on steroids; each thread sends the data it received from the previous one to the next one, forming a loop.

Example #3

This one is more complex, the main thread starts five “worker pools” which then delegate some task to their own workers, resulting in 15 workers in total processing some data concurrently.

Example #4

The last one is a really cool program; it is a concurrent implementation of a prime sieve. The source code for this example is incredibly elegant and can be found at GO PLAYGROUND – The Go Programming Language .

As you can see, all the values which get passed around are prime numbers, and each thread acts as a filter of sorts.

Go’s Concurrency Model

Now we can talk about how Go handles concurrency.

First thing to note is that Go doesn’t allow you to create real operating system threads. This is because real threads are expensive to make, and constantly switching between them has significant overhead. Instead, Go offers an abstraction called goroutines. This abstraction allows us to pretend we are using real threads, but without the overhead and the scaling problems.

For example, you never want more active operating system threads than there are CPU cores, while it’s perfectly reasonable to have 100s of thousand of active goroutines. Goroutines are Go’s implementation of green threads, also called M:N scheduling.

That basically means that N goroutines are scheduled onto M operating system threads. As i said, N is usually bigger than M, and M is usually set to the number of CPU cores (the default value in Go), because it allows for maximum paralellization. One very important thing to realize, which is the whole basis of this concept, is that a sleeping (blocked) goroutine doesn’t waste CPU time.

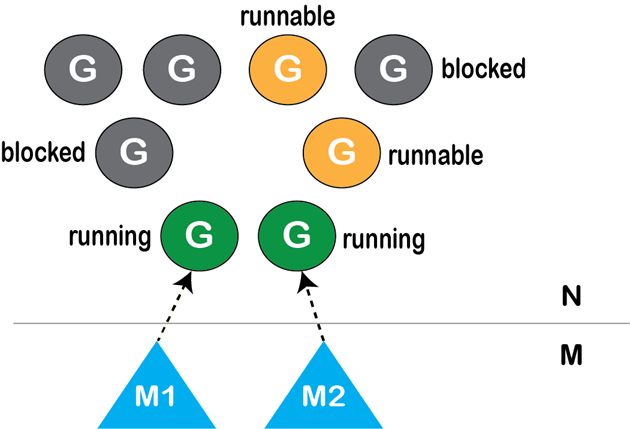

Here we can see a very simplified illustration of what the go scheduler does for us.

The two triangles are operating system threads which pick goroutines to run. The goroutines which are runnable, as well as the currently running goroutines, are candidates for execution, while the blocked ones aren’t.

Disclaimer

The Go runtime is really efficient when it comes to managing goroutines, and even though green threads are an amazing idea, that doesn’t mean directly handling operating system threads is obsolete; systems programming and software that operates on a very low level, and/or communicates with C code, most likely doesn’t want green threads, as they come with additional overhead and takes control away from the programmer. When looking at typical Go use cases however, this tradeoff is worth it.

Channels

Channels are a built in data structure in Go, which is the preferred way of sharing data between goroutines. You can send values into channels from one goroutine and receive those values into another goroutine. However, if the channel is at capacity, sends and receives block until both the sender and receiver are ready.

Here we can see a small code snippet which demonstrates goroutines and channels:

func main() {

messages := make(chan string)

go func() {

messages <- "ping"

}()

msg :=

We first create a messages channel. Then, using the “go” keyword we spawn a new goroutine which sends the message “ping” to the channel, using the special channel syntax. The main goroutine waits for the messages channel to contain a message, and then takes it off of it.

Channels drastically reduce the chance for most common multithreading bugs, because not a lot of state is shared between goroutines. This makes concurrent code a lot safer, because channels are guaranteed to be synchronized, and that is really the only way you can be sure that synchronization is done correctly; by not leaving it up to anyone to remember to synchronize.

That is why the moto of Go’s concurrency is this quote you might have seen before: “don’t communicate by sharing memory; share memory by communicating”.

Also, channels and goroutines allow for some common idioms and patterns, further decreasing the chance for bugs, while keeping code readability and maintainability at a very high level.

Example

To better illustrate the point about the power of concurrency in general, especially in Go, I thought of a simple, but relevant example.

The problem

Lets imagine we have a simple ETL process;

- extract some number of messages from some source

- compute hashes from those messages

- send them to some remote destination

However, the source and destination servers are pretty slow; it takes up to a second for them to return a single response.

The goal is to mitigate the effect this slowness has on our system.

Possible approaches

There’s two approaches we can have; the first one, which I hope is clearly not a good idea, is to implement the process through a loop, retrieving the data, hashing it and sending it one by one.

The other approach is to utilize concurrent design.

Approach #1

The code of the first approach is really straightforward, we call the get function, which retrieves some data, then we hash that data and send the hash to the destination, while also keeping track of any error which might occur.

func DoSync(n int, source, destination string) error {

var errors []error

for i := 0; i < n; i++ {

// get the raw data

body, err := get(source)

if err != nil {

errors = append(errors, err)

continue

}

// hash the data

hashResult := hash(body)

// send the data

if err = post(hashResult, destination); err != nil {

errors = append(errors, err)

}

}

// return only the first error, so it's easier to read

if len(errors) > 0 {

return errors[0]

}

return nil

}}

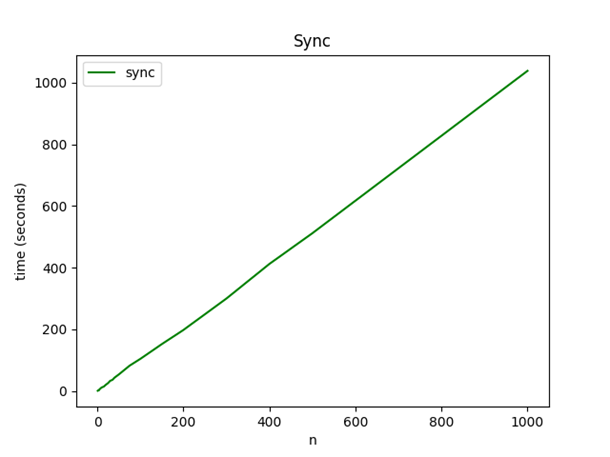

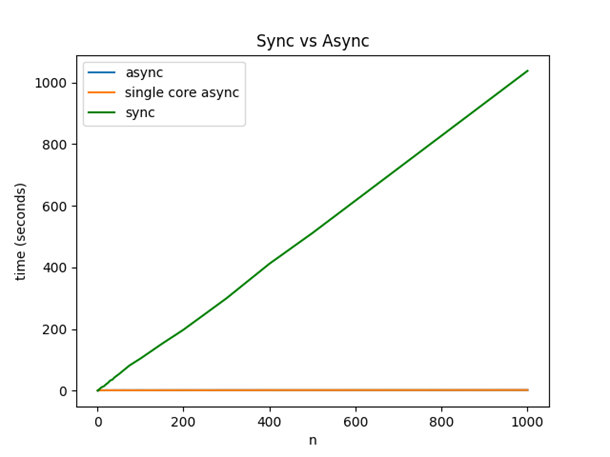

I ran this code a bunch of times with different number of messages/requests, while keeping track of the execution time, which we can see on the following graph:

As expected, execution time increases linearly with the number of request (almost perfectly).

We can imagine how poorly this would scale with any real world application and how poorly the hardware is being utilized.

Approach #2

The alternative approach uses concurrency, and what is called a pipeline pattern. I’ve extracted the channel creation logic in other functions since the actual code is not important right now.

func DoAsync(n int, source, destination string) error {

// returns a data channel, and an error channel

inputs, pullErrC := collect(n, source)

// takes the input channel, returns a channel of hashes

hashes := computeHashes(inputs)

// takes the hash channel, sends the hashes to their destination, returns an error channel

pushErrC := push(hashes, destination)

// combines the two error channels into one (fan-in)

mergedErrC := merge(pullErrC, pushErrC)

// collects all errors, returns a value through a channel, once all errors are collected and the channel is closed

errCollector := collectErrors(mergedErrC)

if err := <-errCollector; err != nil {

return errors.Wrap(err, "fetching process completed with errors")

}

return nil

}

First, we call collect, which doesn’t block and immediately returns a data channel, which will eventually be filled with messages, and an error channel which will contain all the errors that might occur.

Each message will be fetched in it’s own goroutine, not waiting for others to send their request.

Then, we pass the data channel to the next function, which computes the hashes and returns a channel which will eventually contain them. The final stage takes the hash channel and sends the hashes to the destination. Again, each hash will be sent in its own goroutine.

At this stage, only the error channel is returned for any error that might happen during the sending process.

At the end, the two error channels are then merged into a single channel. These errors are then we collected through a “collector” channel. The main goroutine then waits for the channel to return something, nil in case everything worked as expected.

One thing to note is that most of the code here is non-blocking and new “stages” of the pipeline can be added, without ever having to look at the rest of the code.

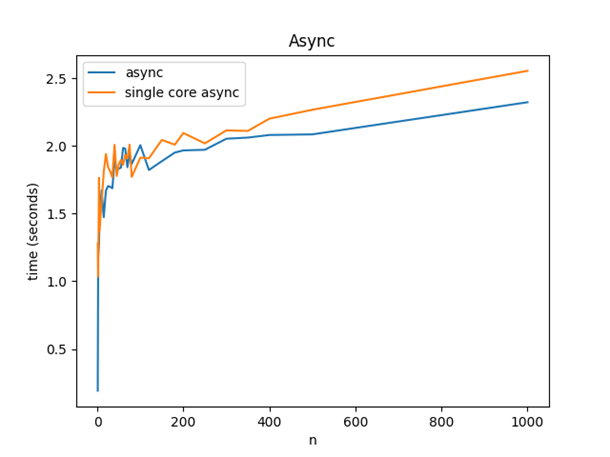

Like the first implementation, this code was ran with a different number of messages, so we can compare them.

As we can see, the execution time of processing 10 messages is about the same as the cost of processing 100s of messages. I’ve also ran this code while forcing the Go runtime to use only one operating system thread, to demonstrate that the number of cores is not important for this to work.

Keep in mind that the previous implementation needed almost 17 minutes for 1000 messages, while this one needs 2 seconds.

This might not be obvious from this graph, so I made another one to put them at the same scale.

As we can see, the async, concurrent implementation takes almost no time at all in comparison with the synchronous, sequential one.

Things that weren’t mentioned

There’s a couple things that weren’t mentioned here, but are worth pointing out.

Real world applications are probably more complex than the dummy example shown here, so here are some common problems a real application might need to deal with.

Sometimes we want to terminate the entire program if one goroutine encounters a non-recoverable error.

The Go standard library offers an elegant solution through the context package, which can be cleanly integrated with the standard pipeline pattern shown today.

Sometimes we want to limit how many goroutines can be active, and we can easily do that through simple mechanisms of bounded concurrency. It’s common that external dependencies can’t handle the load we can generated in our concurrent programs, and that can also be elegantly fixed through throttling mechanisms, which have great library support.

Sometimes channels aren’t enough and we need to use lower level primitives, which is fine and are also built into the language, but you need to be careful with them because readability and safety can suffer from that.

Goroutines aren’t magic; if you have to a lot of CPU heavy computations, there can never be a faster way than running the same number of threads as CPU cores.

Conclusion

In conclusion, we can see that the entire language is built around concurrency. We learned that goroutines are lightweight threads which abstract a lot of complexity, and that not using them for “embarrassingly parallel” tasks is not an option.

We also learned that Go allows us to write clean, idiomatic code which is resistant to multithreading bugs (if we stick to common concurrency patterns), which, combined with static typing and simple syntax, makes Go the perfect candidate for highly concurrent network-based software.