Marko Jeftimija

SENIOR DATA ENGINEER

Introduction

Performance and scalability of data warehousing are key concerns for businesses working with high volumes of streaming and historical analytics. Firebolt is a cloud-native solution that offers reliable, scalable performance at high speeds for all types of analytics operations.

One of the key benefits of Firebolt is its lightning-fast speed. With Firebolt, data can be loaded, processed, and analyzed within a matter of seconds, even for the largest data sets. This is achieved through a combination of advanced data structures and algorithms optimized for high performance and low latency.

In this blog post, we’ll explore the features that make Firebolt so effective in tackling today’s big data challenges and try to gauge its advertised strengths against the main competitors in the field.

Another major advantage of Firebolt is its ease of use. Unlike other data warehousing solutions that require extensive technical knowledge, Firebolt has a user-friendly interface that makes it simple for both technical and non-technical users to access and analyze data. This means that organizations can quickly and easily gain insights into their data without having to rely on specialized IT teams.

Firebolt also integrates seamlessly with a wide range of tools and technologies, including data lake solutions, analytics platforms, and business intelligence tools. This means that organizations can easily connect their data to the tools they use every day, making it easier to derive insights and make data-driven decisions.

Setting up a Firebolt database

Setting up a database instance is simple enough and consists of two steps:

- Creating a Firebolt account

- Subscribing to Firebolt on the AWS marketplace

Creating a Firebolt account

Super simple: go to https://app.firebolt.io/login and enter your email and desired password. After receiving the welcome email (which takes about half an hour), you can proceed to the next step.

Subscribe to Firebolt on the AWS marketplace

Find Firebolt on the AWS marketplace and click “Subscribe”.

Create a database

Return to https://app.firebolt.io/login and under Databases click “new database”.

Firebolt vs BigQuery vs Redshift

The best way to evaluate a product is to really try it out, pit it against competitors and see how it performs.

We chose Redshift for the comparison because Firebolt is primarily aimed at the AWS ecosystem, as it is (for now) only available in AWS. Redshift being the AWS resident data warehouse, it seemed like a no-brainer.

On the other hand, we picked BigQuery because it is fast. In theory, it chews everything you throw at it and scales fast and seamlessly. We didn’t expect Firebolt’s engine to compete with BigQuery because Firebolt is limited by the machine you choose for your server, while BigQuery does not have this limitation. The result kind of surprised us. But let’s get into the testing…

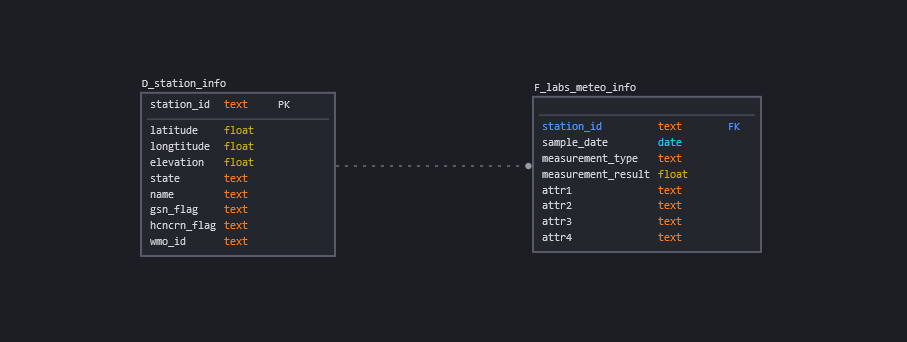

Test dataset

Since we needed tons of data to have a solid grasp on Firebolt’s performance, we decided on the Global Historical Climatology Network’s Daily Data which has key meteorological data for over 100k weather stations. The data set is available directly on AWS’s registry of open data: https://registry.opendata.aws/noaa-ghcn/

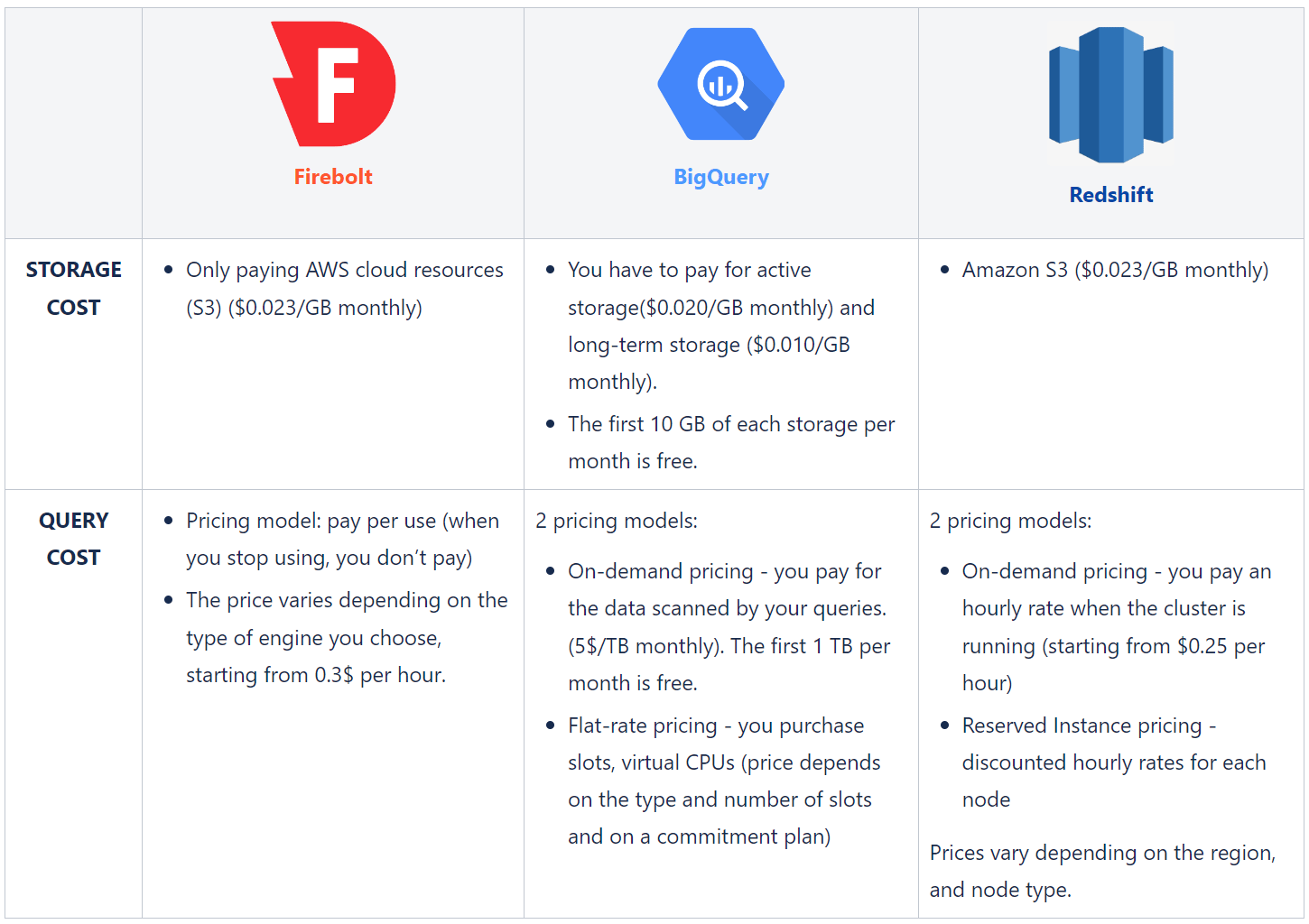

Pricing

Firebolt: https://www.firebolt.io/pricing

BigQuery: https://cloud.google.com/bigquery/pricing

Redshift: https://aws.amazon.com/redshift/pricing/

Performance

To test the difference in performance between Firebolt, BigQuery, and Redshift, 4 different queries were run on the test dataset. Since we wanted to make sure that those queries finished as soon as possible, we created some keys, indexes, partitions and we clustered the tables. More precisely, table f_labs_meteo_info was partitioned by sample_date and clustered by measurement_type in BigQuery. In Firebolt, aggregating and join index were created and the table f_labs_meteo_info was partitioned by sample_date. In Redshift, sort and distribution keys were used.

For Firebolt, general purpose engine was used (size: 2x r5d.4xlarge). In Redshift, node type wasra3.4xlarge.

Query 1 – Inserting data into a table.

Firebolt:

INSERT INTO

f_labs_meteo_info

SELECT

station,

CAST(concat(SUBSTRING(sample_date, 1, 4),'-',SUBSTRING(sample_date, 5, 2),'-',SUBSTRING(sample_date, 6, 2)) AS date),

measurement_type,

CAST(measurement_result AS FLOAT),

attr1,

attr2,

attr3,

attr4

FROM

stg_labs_meteo_info

BigQuery:

INSERT INTO

syntio-labs-research.labs_firebolt.meteo_info

SELECT

station_id,

PARSE_DATE('%Y%m%d', sample_date),

measurement_type,

CAST(measurement_result AS FLOAT64),

attr1,

attr2,

attr3,

attr4

FROM

`syntio-labs-research.labs_firebolt.f_labs_meteo_info`

Redshift:

CREATE TABLE f_meteo_info (

station_id varchar,

sample_date DATE,

measurement_type varchar,

measurement_result FLOAT,

attr1 varchar,

attr2 varchar,

attr3 varchar,

attr4 varchar

)

DISTSTYLE key DISTKEY (station_id)

SORTKEY (sample_date, measurement_result);

COPY d_station_info FROM 's3://noaa-ghcn-pds/csv/'

IAM_ROLE default

FORMAT AS csv

ACCEPTINVCHARS

DATEFORMAT AS 'YYYYMMDD';

Query 2 – Joining 2 tables.

Firebolt:

SELECT

s.latitude,

s.longtitude,

i.measurement_type,

i.measurement_result

FROM

f_labs_meteo_info i

INNER JOIN d_meteo_station_join s ON s.station_id = i.station_id

WHERE

s.elevation > 100

BigQuery:

(Because the result of this query is too big it has to be inserted into a new table)

CREATE TABLE syntio-labs-research.labs_firebolt.destination_table

AS SELECT

s.latitude,

s.longtitude,

i.measurement_type,

i.measurement_result

FROM

syntio-labs-research.labs_firebolt.meteo_info i

INNER JOIN syntio-labs-research.labs_firebolt.station_info s ON s.station_id = i.station_id

WHERE

s.elevation > 100

Redshift:

(Because the result of this query is too big it has to be inserted into a new table)

SELECT

s.latitude,

s.longtitude,

i.measurement_type,

i.measurement_result

INTO table join_result

FROM

"dev"."public"."f_meteo_info" i

INNER JOIN "dev"."public"."d_station_info" s ON s.station_id = i.station_id

WHERE

s.elevation > 100

Query 3 – Finding out by stations: number of days when snow was falling, maximum amount of snowfall in a day and total amount of snow in the winter of 2020.

Firebolt:

SELECT

station_id,

SUM(measurement_result) as total_snow_amount,

COUNT(measurement_result) as num_snow_days,

MAX(measurement_result) as max_snow

FROM

f_labs_meteo_info_partition

WHERE

measurement_type = 'SNOW'

AND sample_date BETWEEN '2020-12-21' AND '2021-03-21'

AND measurement_result > 0

GROUP BY

station_id

BigQuery:

SELECT

station_id,

SUM(measurement_result) as total_snow_amount,

COUNT(measurement_result) as num_snow_days,

MAX(measurement_result) as max_snow

FROM

syntio-labs-research.labs_firebolt.meteo_info

WHERE

measurement_type = 'SNOW'

AND sample_date BETWEEN '2020-12-21' AND '2021-03-21'

AND measurement_result > 0

GROUP BY

station_id

Redshift:

SELECT

station_id,

SUM(measurement_result) as total_snow_amount,

COUNT(measurement_result) as num_snow_days,

MAX(measurement_result) as max_snow

FROM

"dev"."public"."f_meteo_info"

WHERE

measurement_type = 'SNOW'

AND sample_date BETWEEN '2020-12-21' AND '2021-03-21'

AND measurement_result > 0

GROUP BY

station_id

Query 4 – Determining the average temperature of stations in January 2021.

Firebolt:

SELECT

station_id,

measurement_result,

sample_date

FROM

f_labs_meteo_info_partition

WHERE

measurement_type = 'TAVG'

AND EXTRACT(YEAR FROM sample_date) = '2021'

AND EXTRACT(MONTH FROM sample_date) = '01'

BigQuery:

SELECT

station_id,

measurement_result,

sample_date

FROM

syntio-labs-research.labs_firebolt.meteo_info

WHERE

measurement_type = 'TAVG'

AND EXTRACT(YEAR FROM sample_date) = 2021

AND EXTRACT(MONTH FROM sample_date) = 01

Redshift:

SELECT

station_id,

measurement_result,

sample_date

FROM

"dev"."public"."f_meteo_info"

WHERE

measurement_type = 'TAVG'

AND EXTRACT(YEAR FROM sample_date) = 2021

AND EXTRACT(MONTH FROM sample_date) = 01

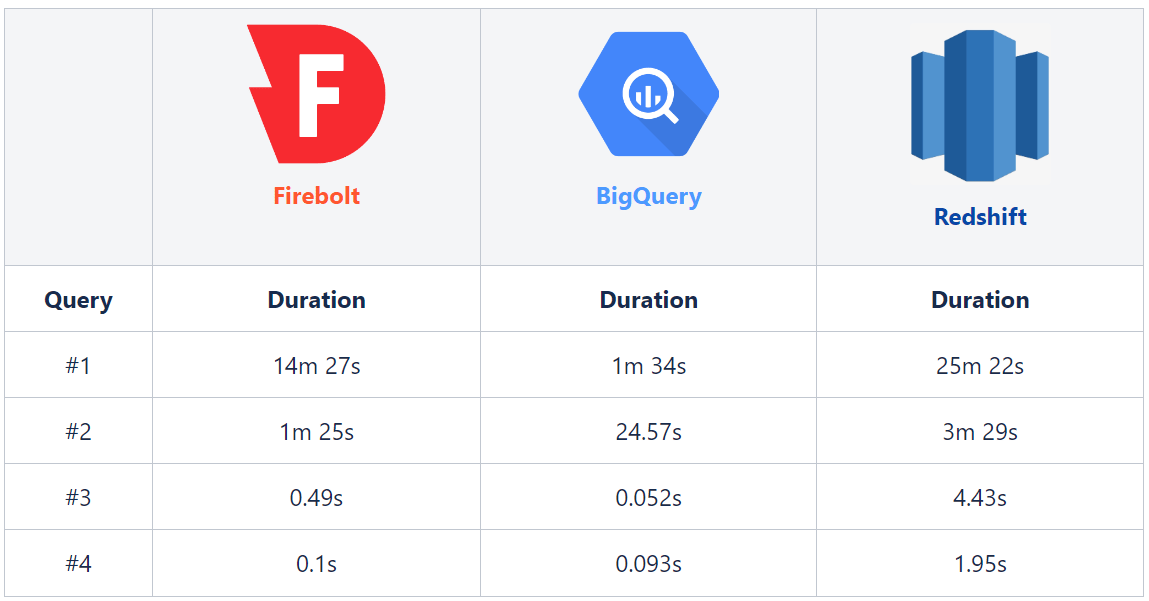

Test results

You can find the result in the following table:

Test summary

The main takeaway from our test is that Firebolt’s speed ramps up as you start to use its features and, as expected, with query optimization.

Because Firebolt’s target audience is primarily the AWS ecosystem as it is (for the time being) only available on AWS, it outperforms Redshift by an order of magnitude in all our tests using an equivalent server for less money.

It even comes super close to BigQuery’s performance, even though, as mentioned, BigQuery does not have a limited resource pool. At least not a hard capped one like the other two warehouses.

Conclusion

In conclusion, the biggest strength of Firebolt is its simplicity and ease of use. It took all of 10 minutes to set it up and start running queries through its nice and intuitive GUI.

Coupling that with impressive speed and affordability, it becomes a strong option for any business that has petabytes of data stored in AWS.

Whether you are looking to process data in real-time, perform complex analytics tasks, or simply gain insights into your data more quickly, Firebolt has you covered. So, if you’re looking for a data warehouse solution that can help you take your data management and analysis to the next level, be sure to check out Firebolt.