Goran Dumic

PRINCIPAL CONSULTANT

For those who develop applications that deal with data fetching and processing, you will know that there are a lot of challenges down that path. Nowadays, applications will sometimes have to deal with large-scale datasets, so every part of the application workflow needs to be well designed and tuned. Customers shouldn’t experience a slowdown in the application before data even reaches our application, so let’s deal with how to efficiently fetch the data first.

APPROACHING THE PROBLEM

For testing purposes, we needed a dataset of a respectable size, even in the early stages of development. There are a lot of publicly available datasets that you could use, for example:

HTTPS://GROUPLENS.ORG/DATASETS/MOVIELENS/

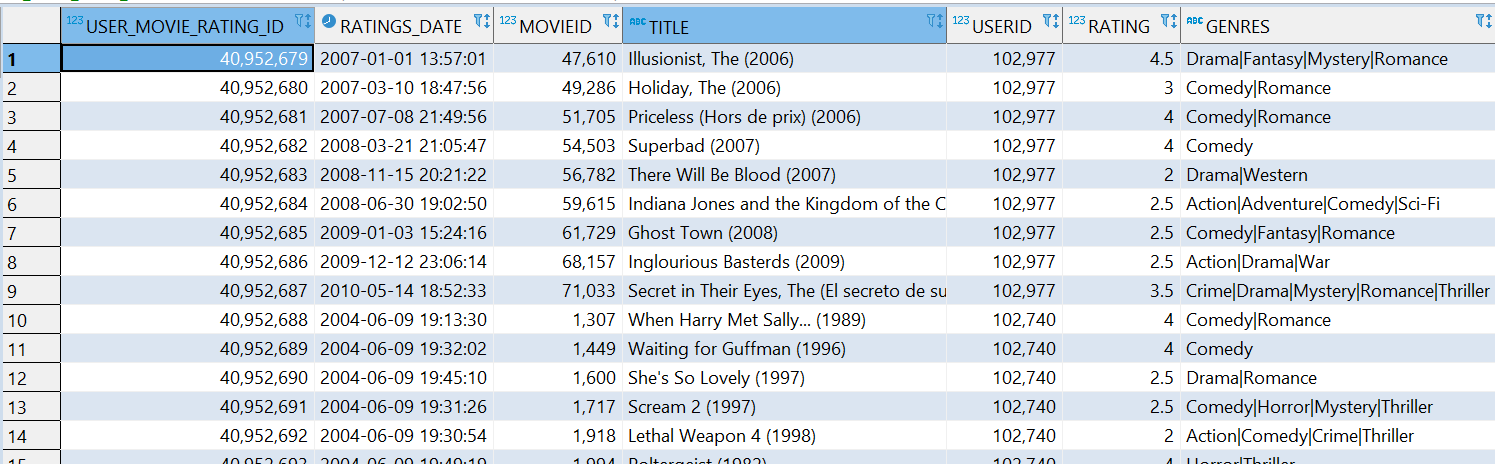

We chose the 25 million record dataset which contains movie ratings from real users. The structure is very simple, here’s the joined column output from several tables:

Figure 1. Publicly available dataset that was used

We wanted to consume that data using Go, mainly because Go is designed to be a fast and efficient programming language. Due to its lightweight architecture, concurrency superiority, and ease of deployment, it is predominantly used in Syntio product development. However, we had limited experience in connecting it to relational database sources and fetching a respectable amount of data from it.

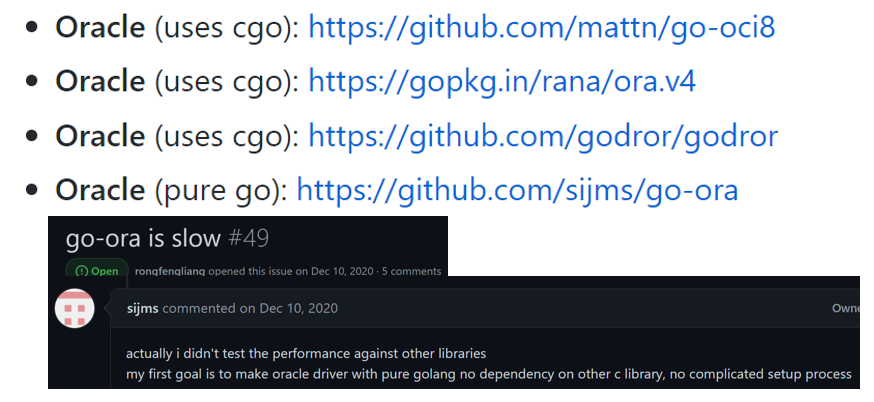

Go is still considered to be relatively new and the list of drivers for a specific database is not that long, as currently for Oracle there is:

Figure 2. Go drivers for Oracle

As we can see, there are two types of drivers listed here. Pure Go and non-pure Go drivers, based on CGO libraries that are basically wrappers around existing drivers and modified to be used for Go. Even the pure go driver is listed as a slow-performant (at the time of writing), on their official GitHub page. The question is though, how well do they perform, really?

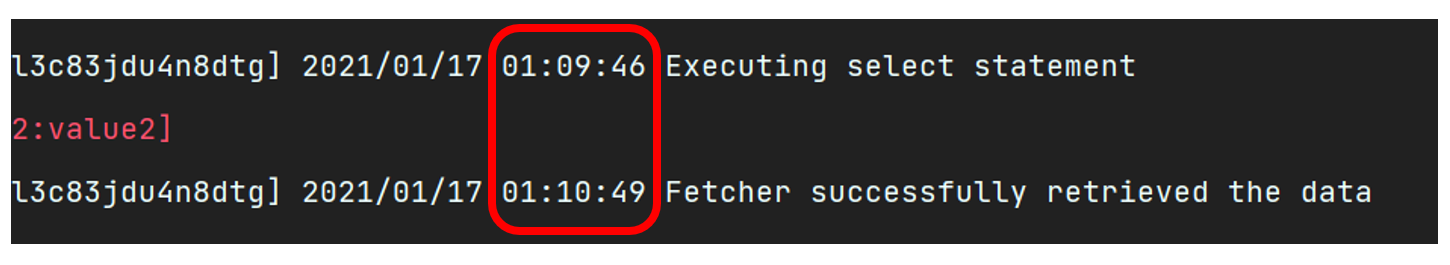

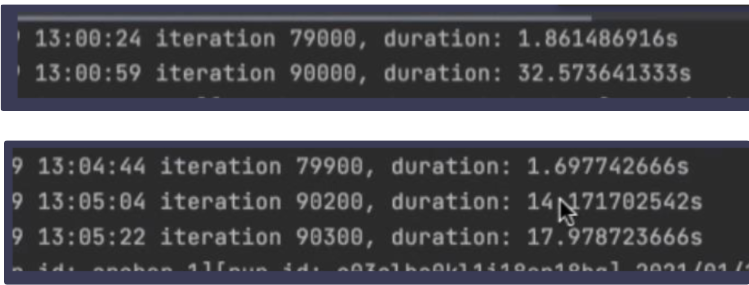

We tested a simple 90k fetch of small column-recordset and it wasn’t a top-notch performance. One minute for a 90k record dataset? Not good.

Figure 3. Fetching 90k recordset

What’s worse, we are dealing with large-scale datasets here. If we wanted to scale this performance up to millions and hundreds of millions of rows, one image from the old days comes to mind…

Figure 4. Blast from the past

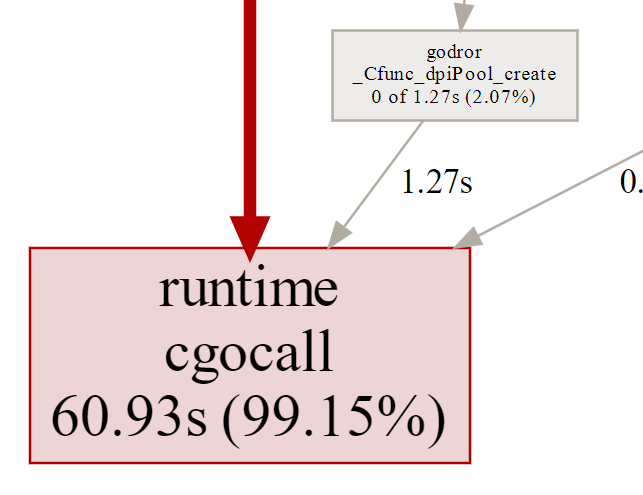

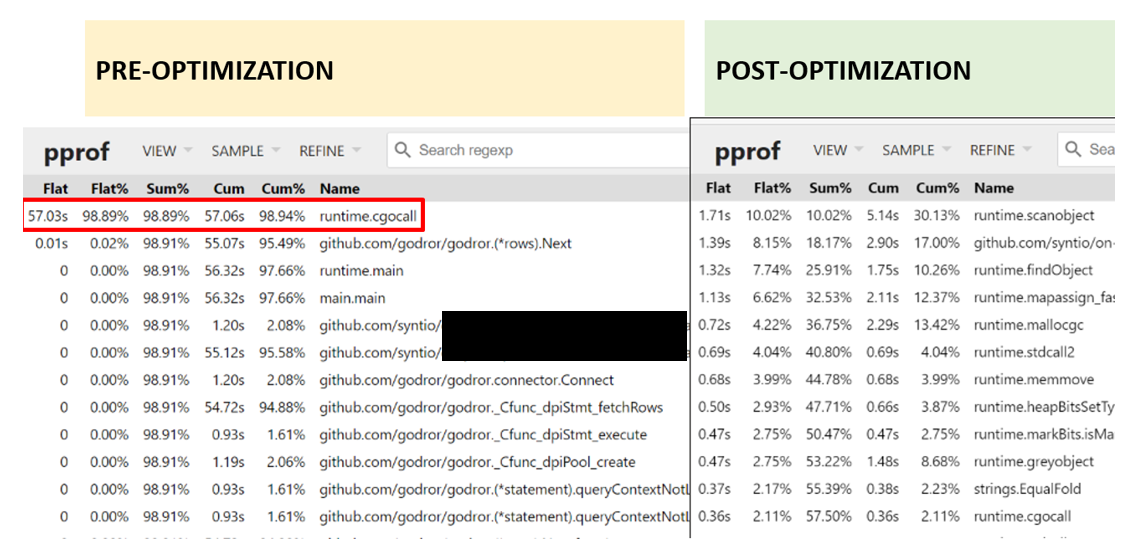

Ok, we need to get to the bottom of this. Let’s use the profiler. The CGO-based drivers seem to have a common issue. When profiling, you’ll get the output that just tells you the CGO blackbox takes about 99% of the execution (CPU) time:

Figure 5. The CGO blackbox in profiling output

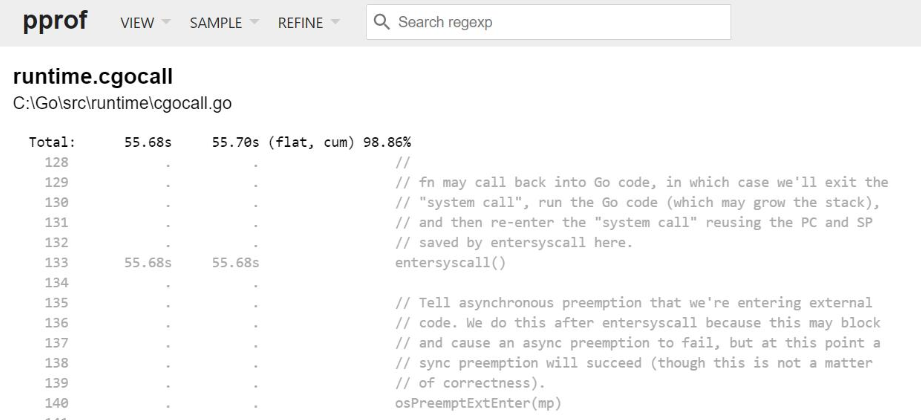

After that, if we dig some more and profile on a statement level, the blackbox effect is even more obvious:

Figure 6. Profiling deeper on statement level

Adding a progress print in interations will reveal that fetching simply stops after a few interations but after a period of time, so some processing is done in the background.

Figure 7. Profiling deeper on statement level

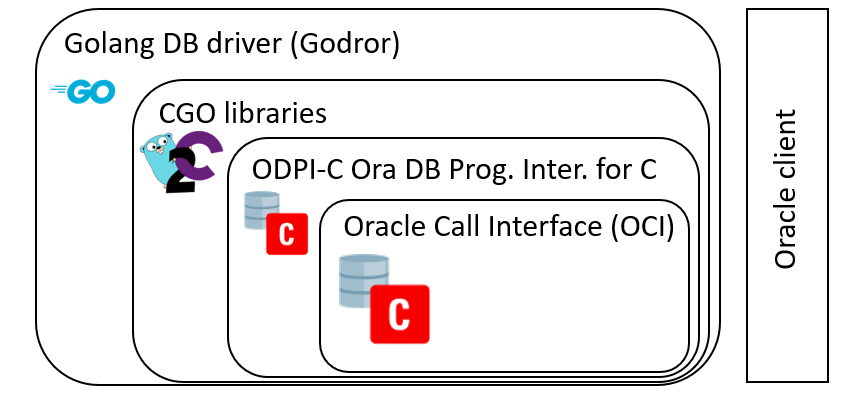

This is no surprise. Drivers based on CGO have a complex architecture, they wrap around an existing library which may also be another wrapper or technology. If we take a look at the Godror Golang driver, we’ll see that it’s a 4-level architecture driver:

Figure 8. Architecture breakdown of Golang CGO drivers

Also, you do need to have the Oracle client installed which takes a toll on your application size or worse – if the proprietary client libraries cannot be shipped with your application, the deployment itself cannot be automated, a manual step would be something like:

Step 3: Download and install the official database client from this website

CHANGE OF MINDSET

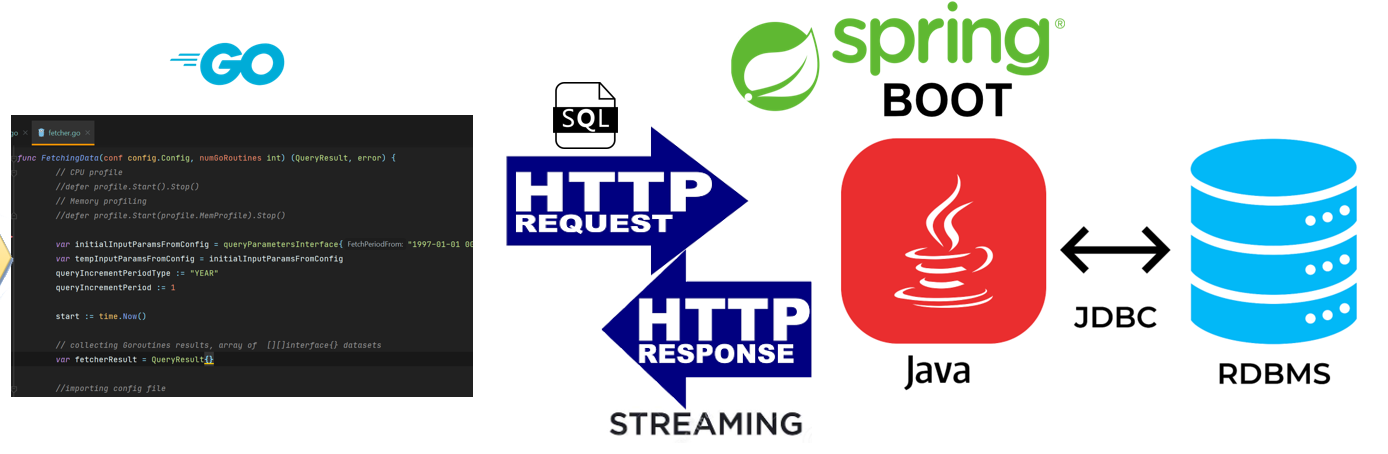

Can we do better using existing drivers in Go? Not unless the Pure Go Oracle drivers improve. Will we ever be able to reach the performance and stability that for example, Java drivers have? Speaking of which, we then wondered how much better JDBC performs. JDBC has been on the market for over 20 years now, the JDBC API type IV is an industry-standard, all dependencies are packed into one .jar file and in many top-level data-fetching pieces of software the drivers themselves are used… So it has to be good. The initial testing went great, so we chose to change the architecture by introducing a separate component which was exclusively responsible for fetching the data. It would execute the query, fetch and pack the results and send them using HTTP streaming back to the originating request application.

Figure 9. Modified fetching architecture

Best of all, we are not limited to existing Go database drivers anymore. The JDBC Drivers for any relational database can be easily found and packed within our application.

THE RESULTS

Was any performance benefit achieved?

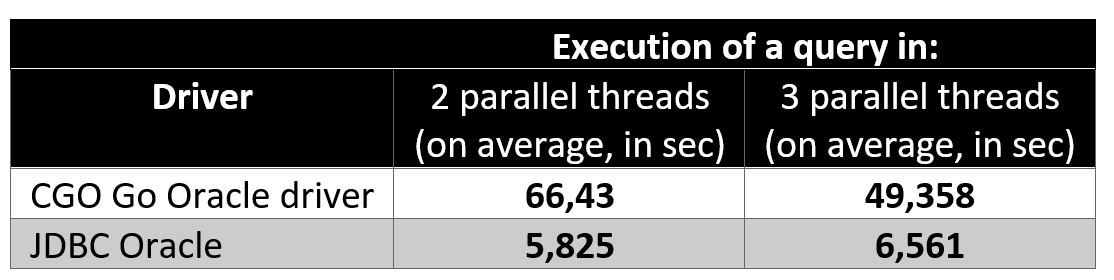

We used a 1,3mil. recordset and fetching was tested in 4 scenarios, two of which being the most important ones.

Figure 10. Fetching data from remote database

Figure 11. Fetching data from local database

The execution times we got are listed below:

Note: we used the same optimization parameters like FetchSize for both drivers

Talk about a huge improvement! It would seem JDBC is up to 10-11x faster than the Go drivers, but what about the profiling?

It seems the CPU load is now equally spread across the code, meaning more stable and consistent performance, which is a feature any application would take, every time.

To summarise, don’t be afraid to mix technologies, they can work together.

Happy coding!