Ivan Jakas

DATA ENGINEER

Introduction

In this blog post, the focus will be on the process of deploying OpenMetadata on a Kubernetes cluster hosted on Azure. To find out more about OpenMetadata’s capabilities, check out this review by our data engineers, Kristina and Dominik. Keep in mind that the review was written prior to the 1.0.0 release, which contained some interesting new features.

All three major cloud providers offer the option of using their managed versions of Kubernetes clusters:

- Google Kubernetes Engine

- Elastic Kubernetes Engine

- Azure Kubernetes Service

OpenMetadata currently provides EKS and GKE-specific deployment instructions on their official page, but there are no instructions when it comes to deployments on AKS. This might change in the near future, as stated in this GitHub request by one of the OpenMetadata developers.

The main reason for cloud-specific instructions are the inner workings of Airflow. OpenMetadata helm charts depend on Airflow, and Airflow expects a persistent disk that supports ReadWriteMany access mode (the volume can be mounted as read-write by many nodes). On every cloud-managed cluster, you have to manually configure the persistent volume to work in the desired access mode because the default mode is ReadWriteOnce, and the same applies to Azure and its Kubernetes service, AKS.

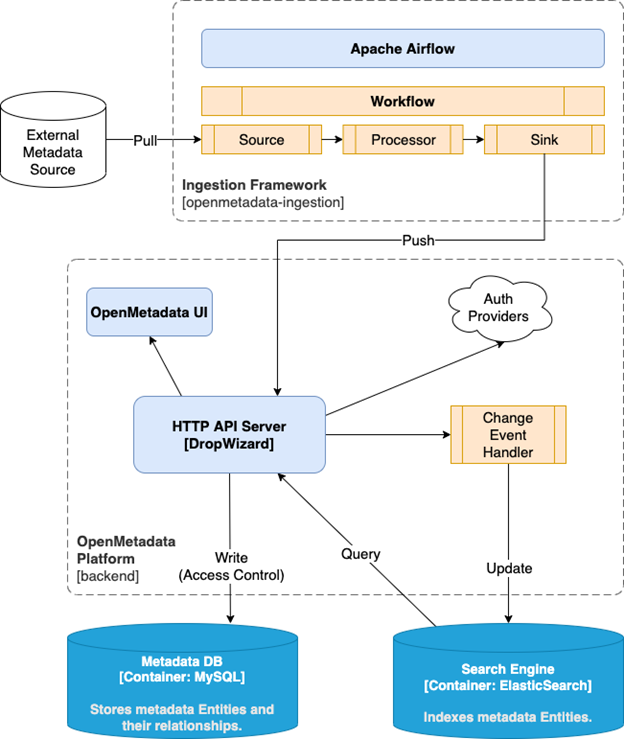

OpenMetadata deployment components

The next image showcases all of the OpenMetadata dependencies: Elasticsearch, MySQL, and Airflow, and their positions in the OpenMetadata end-to-end metadata platform. OpenMetadata uses MySQL for the metadata catalog and Elasticsearch to store the Entity change events and makes them searchable by search index. These three components need to be set up before the deployment of the OpenMetadata resource.

Image taken from the OpenMetadata Arcitecture page

Cluster configuration

Since Kubernetes version 1.21 (on Azure Kubernetes Service), you can use the new CSI – Container Storage Interface implementation, which is available for Azure Disk and Azure Files. It comes with many features, but the most important one for this use case is the ReadWriteMany access mode for Persistent Volumes.

Kubernetes volumes are an abstraction of directories that allow you to have persistent file storage that can be shared between the pods.

First, you will need to spin up your Azure Kubernetes cluster with the CSI driver feature enabled. This driver allows Kubernetes to use a certain volume type.

New cluster

This is an example of a CLI command that spins up a new AKS cluster with the drivers enabled.

az aks create --name aks-1-22 \

--resource-group aks-1-22 \

--location westeurope \

--node-count 3 \

--node-vm-size Standard_B2ms \

--node-zones 1 2 3 \

--aks-custom-headers EnableAzureDiskFileCSIDriver=true

Existing cluster

To enable CSI storage drivers on an existing cluster, you need to update it using the following command:

az aks update -n myAKSCluster -g myResourceGroup --enable-disk-driver --enable-file-driver

It will take some time for the cluster configuration to update. Once that is over, you can connect to your cluster and start working on the actual deployment.

Namespace

A namespace is a mechanism for isolating a group of resources within a single cluster. It’s advisable to use namespaces in environments with many users. Create a new namespace where your OpenMetadata and related deployments will be logically separated from the rest of the cluster using the following command:

kubectl create namespace <your-namespace>

Storage

With CSI storage drivers enabled, you can choose between several Storage Classes for your Persistent Volume Claims to provide the ReadWriteMany volume for the Airflow deployment. In the following example, azurefile class is used.

# # logs_dags_pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: openmetadata-dependencies-dags-pvc

namespace:

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: azurefile

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: openmetadata-dependencies-logs-pvc

namespace:

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

storageClassName: azurefile

Apply the Persistent Volume Claims to your cluster with this command:

kubectl apply -f logs_dags_pvc.yaml

Change the owner and permissions for volumes

Since Airflow pods run as non-root users, they do not have write access to your volumes. In order to fix the permissions, spin up a Job with Persistent Volumes attached and run it. A Job creates one or more pods and will continue to retry execution of the pods until a specified number of them successfully terminate. As pods successfully complete, the Job tracks the successful completions.

The command chown, an abbreviation of change owner, is used on Unix and Unix-like operating systems to change the owner of file system files and directories.

# permissions_pod.yaml

apiVersion: batch/v1

kind: Job

metadata:

creationTimestamp: null

labels:

run: my-permission-pod

name: my-permission-pod

namespace: openmetadata

spec:

template:

spec:

containers:

- image: busybox

name: my-permission-pod

volumeMounts:

- name: airflow-dags

mountPath: /airflow-dags

- name: airflow-logs

mountPath: /airflow-logs

command: ["/bin/sh", "-c", "chown -R 50000 /airflow-dags /airflow-logs", "chmod -R a+rwx /airflow-dags"]

restartPolicy: Never

volumes:

- name: airflow-logs

persistentVolumeClaim:

claimName: openmetadata-dependencies-logs-pvc

- name: airflow-dags

persistentVolumeClaim:

claimName: openmetadata-dependencies-dags-pvc

Apply the Job to your cluster with this command:

kubectl apply -f permissions_pod.yaml

Airflow pods have the name “airflow“ and linux user id “50000“.

OpenMetadata

Now that you have the cluster and all the storage options set up, you can start deploying all of the necessary components.

Deployment

The first step in installing the OpenMetadata dependencies is to add the OpenMetadata Helm repository with the following command:

helm repo add open-metadata https://helm.open-metadata.org/

Deploy the necessary secrets containing the MySQL and Airflow passwords:

kubectl create secret generic mysql-secrets --namespace <your-namespace> \ --from-literal=openmetadata-mysql-password=openmetadata_password kubectl create secret generic airflow-secrets --namespace <your-namespace> \ --from-literal=openmetadata-airflow-password=admin kubectl create secret generic airflow-mysql-secrets --namespace <your-namespace> \ --from-literal=airflow-mysql-password=airflow_pass

You then need to override the OpenMetadata dependencies helm values to bind the Azure persistent volumes for DAGs and logs:

# values-dependencies.yaml

airflow:

airflow:

extraVolumeMounts:

- mountPath: /airflow-logs

name: aks-airflow-logs

- mountPath: /airflow-dags/dags

name: aks-airflow-dags

extraVolumes:

- name: aks-airflow-logs

persistentVolumeClaim:

claimName: openmetadata-dependencies-logs-pvc

- name: aks-airflow-dags

persistentVolumeClaim:

claimName: openmetadata-dependencies-dags-pvc

config:

AIRFLOW__OPENMETADATA_AIRFLOW_APIS__DAG_GENERATED_CONFIGS: "/airflow-dags/dags"

dags:

path: /airflow-dags/dags

persistence:

enabled: false

logs:

path: /airflow-logs

persistence:

enabled: false

externalDatabase:

type: mysql

host: mysql..svc.cluster.local

port: 3306

database: airflow_db

user: airflow_user

passwordSecret: airflow-mysql-secrets

passwordSecretKey: airflow-mysql-password

For more information on airflow helm chart values, please refer to airflow-helm.

To deploy OpenMetadata dependencies, use this command:

helm install openmetadata-dependencies open-metadata/openmetadata-dependencies --values values-dependencies.yaml --namespace <your-namespace>

Run kubectl get pods to check whether all the pods for the dependencies are running. You should get a result similar to the one shown here:

NAME READY STATUS RESTARTS elasticsearch-0 1/1 Running 0 mysql-0 1/1 Running 0 openmetadata-dependencies-db-migrations-5984f795bc-t46wh 1/1 Running 0 openmetadata-dependencies-scheduler-5b574858b6-75clt 1/1 Running 0 openmetadata-dependencies-sync-users-654b7d58b5-2z5sf 1/1 Running 0 openmetadata-dependencies-triggerer-8d498cc85-wjn69 1/1 Running 0 openmetadata-dependencies-web-64bc79d7c6-7n6v2 1/1 Running 0

Please note that the pods whose names contain the prefix “openmetadata-dependencies” are part of airflow deployments.

OpenMetadata recently released a new version, v1.0.0, and with that came some changes to the deployment process. The most important one for us was the name change of the Airflow configuration to pipelineServiceClientConfiguration . The list of default values can be found here. Here are the values that need to be set for everything to work properly:

# values.yaml

global:

pipelineServiceClientConfig:

apiEndpoint: http://openmetadata-dependencies-web..svc.cluster.local:8080

metadataApiEndpoint: http://openmetadata..svc.cluster.local:8585/apii

Next, deploy the OpenMetadata component by running the following command:

helm install openmetadata open-metadata/openmetadata --namespace <your-namespace> --values values.yaml

Exposing the OpenMetadata UI

To expose the OpenMetadata UI you can connect to your cluster locally and run this command:

#opemetadata_lb.yaml

apiVersion: v1

kind: Service

metadata:

name: open-metadata-lb

namespace:

spec:

selector:

app.kubernetes.io/instance: openmetadata

app.kubernetes.io/name: openmetadata

ports:

- port: 8585

type: LoadBalancer

With this approach, Azure will provide a publicly exposed address which you can use to access your UI on the port 8585.

And that is it! You now have a fully functional OpenMetadata instance installed on your cluster.