Benoit Boucher

DATA ENGINEER

Part I. How datacentres nevertheless impact the environment

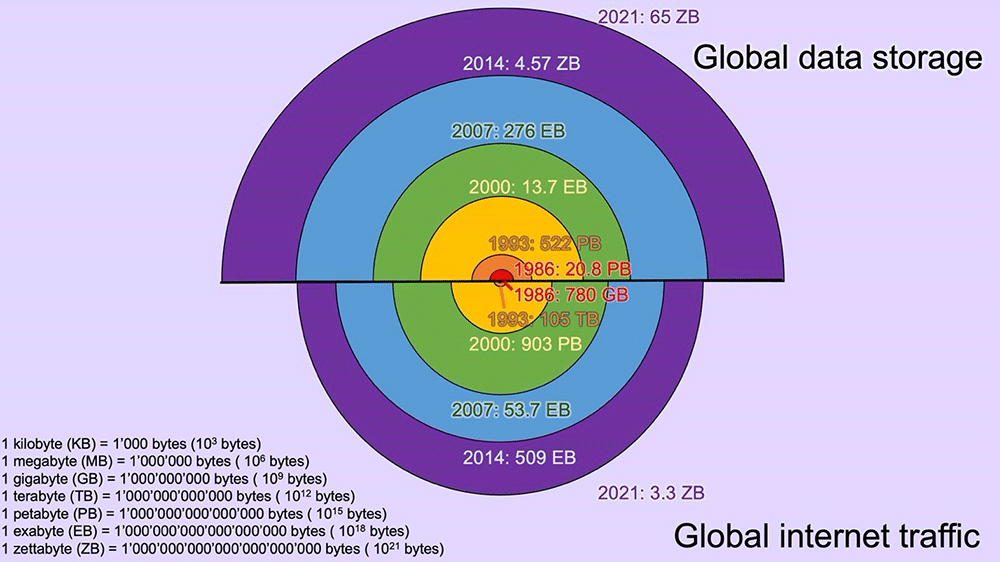

« Quest’è il mio regno. Lo ingrandirò. » (this is my kingdom. I will enlarge it) Falstaff in the eponymous opera by Verdi, recites this whilst touching his abdomen. The internet has become the new Falstaff. To give you an idea of what we mean, while DATA IS QUANTIFIED IN BYTES (1 line of text is approximately 70 bytes, 1 very short mail is approximately 1 kilobyte (1000 bytes), a 3-minute song is approximately 5 megabytes (5000000 bytes) global data storage is expected to reach 200 ZETTABYTES (200’000’000’000’000’000’000’000) BY 2025. This is still in constant and rapid increase, in comparison with just a few decades ago. (see Figure 1, top) Most of this data is stored in datacentres. Companies or organizations can have their own datacentre on-premises, but it can also be on-remote in a dedicated infrastructure, away from the company or organization’s physical premises, such as stored within a private cloud. It can also be on-remote to somebody else’s datacentre, such as a third-party company who handles the maintenance of the infrastructure, and this would then be a public cloud.

As you can imagine, global internet traffic is also increasing rapidly and constantly, (see Figure 1, bottom). Global internet traffic is made possible thanks to a vast undersea network of cables, see the following interactive map: HTTPS://WWW.SUBMARINECABLEMAP.COM. We are sorry to ruin the illusion, but those kinds of clouds are not in the sky but actually somewhere on the earth or in the oceans.

Figure 1 – Estimation of the global data storage for 1986, 1993, 2000, 2007, 2014 and 2021. Estimation of the global internet traffic for 1986, 1993, 2000, 2007, 2014 and 2021.

Our internet is obese and unfortunately, this excess weight results in an incredible increase of electricity consumption each year. Production of electricity results in more greenhouse gas emissions (GHG) through the use of coal, oil, natural gas, or less if using hydro, wind, or nuclear power. This increase in electricity consumption is expected to have a strong environmental impact, depending on how far along the world is when thinking about energy production and the transition to more sustainable sources. According to Françoise Berthoud – a computer scientist at the Gricad, Grenoble, France, and founder of EcoInfo – said in a CNRS NEWS ARTICLE IN 2018: “According to estimates, new technologies alone account for 6 to 10% of the world’s electrical consumption, and thus nearly 4% of greenhouse gas emissions.” Around 30% of this electricity is dedicated to power terminal equipment (computers, mobile phones, and other smart devices) another 30% is used for the datacentres that host our services, and 40% supplies the networks.

Data engineers – using laptops, phones, tablets (terminal equipment), accompanying companies migrating to the cloud (datacentre), listening to music online while working and watching videos on demand at home (network) – check all the boxes listed above. Through this article, we would like to focus on the environmental impact of information and communications technologies (ICT) from a data engineer’s point of view, notably due to their electricity consumption. Datacentres are examined first and foremost, but they are not the only cause.

In this article we will start by talking about the notable positive achievements related to datacentres regarding their environmental impact. In the next article, we will raise a few concerns regarding the datacentres, before talking briefly about what other actors (such as policy makers, companies, data engineers) are doing or can do to limit the environmental impact of ICT.

Datacentre’s energy consumption

DATACENTRES CURRENTLY CONSUME about 2% of the world’s electricity, but that figure is expected to reach 8% by 2030.

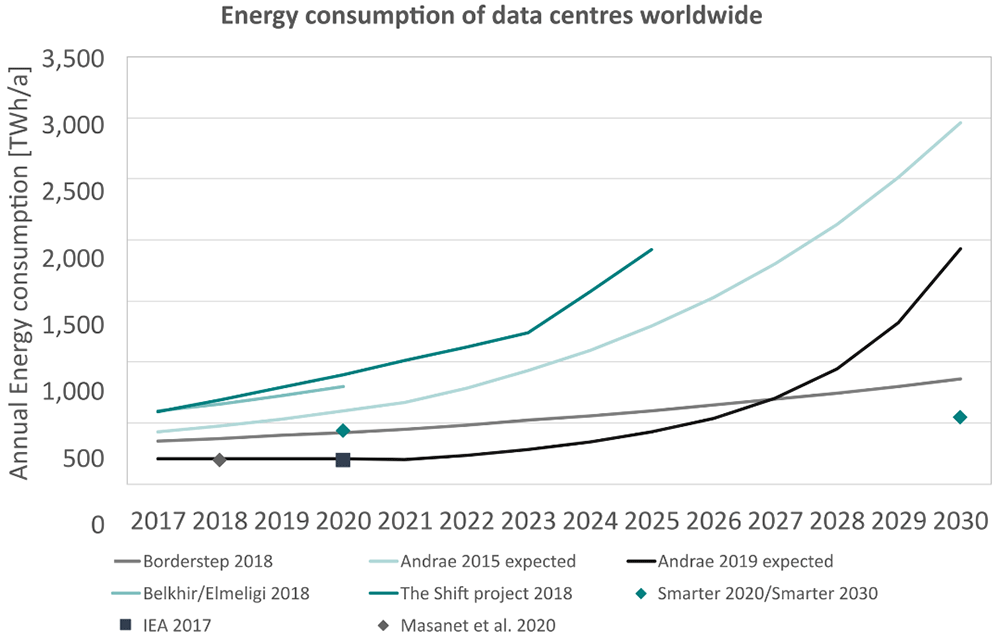

Energy is measured in Watt per hour (Wh). A traditional incandescent light bulb consumes 60 Watts in an hour (60 Wh). Nowadays, they have beenREPLACED BY COMPACT FLUORESCENT LAMPS (CFL) which consume 15 Watts in an hour (15 Wh). Nearly 1 kWh (1000 Wh) is needed to POWER A LAPTOP for one day and a half. Not far away from the Syntio headquarters, here in Zagreb, Croatia, along the Adriatic coast, the Velebit pumped storage power plant (RHE VELEBIT) produces an average of 430 GWh (430’000’000’000 Wh) in a year, whereas the NUCLEAR PLANT OF KRŠKO, in Slovenia, produces an average of 5.53 TWh (5’530’000’000’000 Wh) in a year. With Figure 2, from the E.U. REPORT “Energy-efficient Cloud Computing Technologies and Policies for an Eco-friendly Cloud Market“, we can get an idea of what the worldwide energy consumption predictions from datacentres will be over the next couple of years. Although predictions for 2030 – using different approaches – vary from alarmist to more modest scenarios, the trend is definitely upward.

One main difference between the approaches is the consideration (for more modest) or non-consideration (for alarmist) of the improvement of server’s capacities. In nearly 20 years – from 2000 to 2018 – THE ENERGY EFFICIENCY OF SERVERS HAS BEEN MULTIPLIED BY 4. That is to say that the same server delivers 4 times more calculations for the same electricity consumption. On the other hand, the IMPROVEMENT OF SERVER’S CAPACITIES is not expected to continue indefinitely.

To get a very rough estimation, considering an annual global electricity demand of approximately 30,000 TWh in 2030 as predicted by the INTERNATIONAL ENERGY AGENCY (IEA), and taking the prediction for 2030 as depicted in Figure 2 of ~3 000 TWh/a for Andrae 2015, ~2000 TWh/a for Andrae 2019, ~900 TWh/a for Borderstep 2018 and ~500 for Smarter 2020/Smarter 2030, this gives approximately 10%, 6.7%, 3% and 1.7%, respectively. The last two scenarios being more reassuring than the first two.

Figure 2: Overview of studies on the energy demand of datacentres worldwide up to the year 2030 from the E.U. REPORT: Energy-efficient Cloud Computing Technologies and Policies for an Eco-friendly Cloud Market.

From datacentres to public clouds

This trend is partially explained by the emergence and success of public clouds. As mentioned earlier, these new kind of datacentres are provided by third-party companies who handle the maintenance of these infrastructures.

To understand the advantages of public clouds, we can make a parallel with public transportation: when we use our own car (infrastructure), most of the time, the maximum number of available seats (resources) are not used (we do not drive formula 1 cars unfortunately). We have to pay for insurance and gas (cost), and we have to take it once in a while for a technical check up (maintenance)

Whereas when we use public transportation, we share the infrastructure (bus, tram, train, etc.). We use the resources that we need (1 seat usually, possibly an additional spot for luggage), we minimize the cost (we pay for only our ticket) while the public transportation provider (the bus, tram or train company) is responsible for the rest of the cost (gas, electricity, insurance) and deals with the maintenance (technical checks of the vehicles). Hence, there is an abstraction of part of the whole infrastructure. We pay only for what we use and when we do not use it, it makes more space for others.

Similarly, when a user (a company or an organization for example) uses their own infrastructure (datacentres), most of the time, the maximum number of available resources (servers) are not used although there are some costs for the electricity and the maintenance. For example, a retail company needs to support a workload for some events like Black Friday, Christmas, or the Singles’ Day (11 November). Such events, however, do not occur every day and for the most part the datacentres are not used to their maximum capacity.

Whereas when users use public cloud, they are making an abstraction of part of the whole infrastructure (the maintenance of the datacentre, costs, etc.). They pay only for what they use and when they use less resources in the cloud, the resources that are released can be used by the other users.

This, by definition, has an impact on the environmental footprint of the users. Indeed, it has been shown that, for the same server performance, companies MOVING TO PUBLIC CLOUD found that their carbon footprint reduced by 88% and this gap is expected to increase in the future. On average those companies had a utilization rate of their on-premises datacentre of only about 18%.

Hyperscale datacentres

Another advantage of public clouds is the notion of hyperscale datacentres. What are hyperscale datacentres? Hyperscale Datacentres are datacentres that are always maintained and updated with the newest technologies. Here are a few characteristics of hyperscale datacentres:

- REPLACING MACHINES after a only few years and sometimes replacing them with some machines that are not even on the market yet.

- GETTING RID OF COMATOSE SERVERS (also termed zombies, servers sucking power without doing any useful work). It was observed that nearly 30% of servers were comatose servers in some datacentres in 2015. This can also lead to security risks as those servers are not updated.

- Using state-of-the-art technologies sometimes to create more efficient datacentres. For example:

- RE-USING its own water that is used for cooling.

- Using OIL SUBMERSION COOLING with non-conductive and non-corrosive oils. Some oils can absorb up to 1,500 times more heat than air.

- Using MACHINE LEARNING to optimize the cooling in datacentres.

- USING COLD ENERGY released during the re-gasification process for liquified natural gas (LNG) to cool datacentres

- Using HYDROGEN FUEL CELLS

- Using virtualization to enable datacentre operators to deliver higher work outputs with fewer servers (see Box 1).

- Having a low power usage effectiveness (PUE). The PUE is often used to measure the energy efficiency of datacentres (see Box 2).

– – – – – – – – – – – –

Box 1 – Virtualization

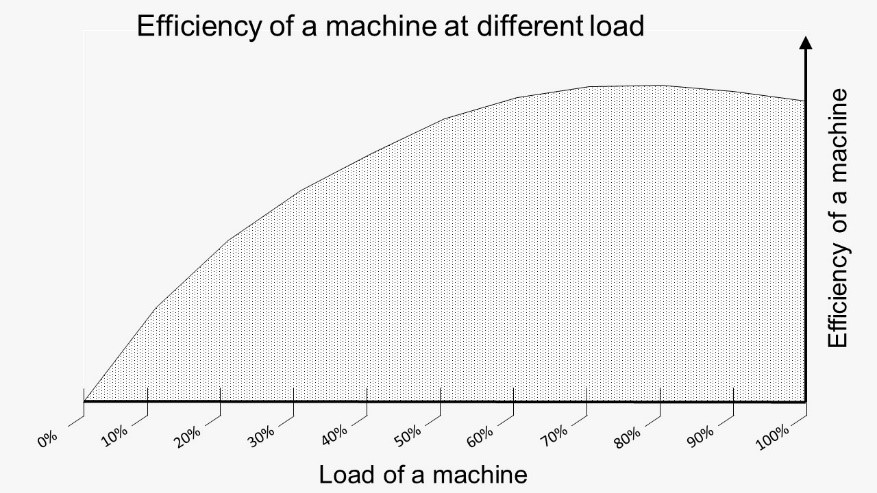

Computer VIRTUALIZATION refers to the abstraction of computer resources, such as the process of running two or more logical computer systems on one set of physical hardware. Are there good reasons to do so? As schematized in the Figure, a machine will have its maximal efficiency when running at 70-80% of its load. A machine running at only 20% will be twice less efficient. It is common in some traditional datacentres that dozens of machines are running at only 20% of their capacity, so that they can accommodate sporadic load peaks. However, a 20% loaded server consumes as much energy as a 90% loaded server and grouping several servers on the same machine is both energy and cost efficient if their load peaks do not systematically coincide. In conclusion, yes there are good reasons to do so.

Figure: Efficiency of a machine at different load.

– – – – – – – – – – – –

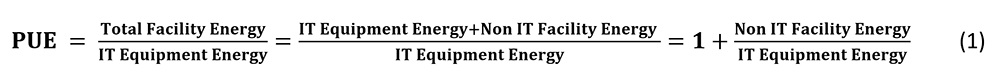

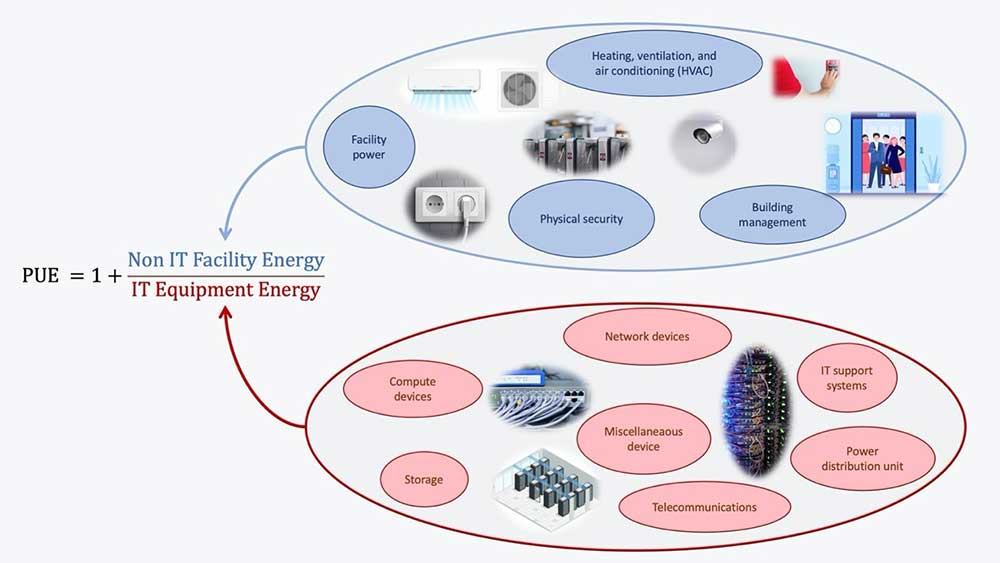

Box 2 – The Power Usage Effectiveness (PUE)

The PUE was brought forth by the Green Grid in 2007 as a short-term proposal to measure the energy efficiency of a datacentre. The PUE is the total amount of energy used by a datacentre (Total Facility Energy) divided by the energy used by their IT equipment (IT Equipment Energy). See Equation (1). The Total Facility Energy is the sum of the IT Equipment Energy and the Non IT Facility Energy. The following Figure gives an idea of what falls in both the IT Equipment Energy and Non IT Facility Energy categories:

Figure: Overview of the components of IT Equipment Energy and Non IT Facility Energy that can be found in a datacentre.

– – – – – – – – – – – –

Being a ratio of two energies, the PUE itself is dimensionless. The ideal value is 1.0 and means that all energy delivered to the datacentre is strictly used for IT equipment. A value above 1.0 means that some energy delivered to the datacentre is used for something else other than the IT equipment, such as cooling, building management, utility plugs, lighting, etc. (when the PUE arose, cooling used to account for a large part – up to 50% – of the NON IT FACILITY ENERGY CONSUMPTION. For example, if a PUE is determined to be 3.0, this indicates that the datacentre demand is three times greater than the energy necessary, needed to power the IT equipment.

Besides this, datacentres are still consuming a large amount of electricity. Since the last decade, the different actors of datacentre industry are encouraging each other to reduce their environmental footprint:

- By sometimes using renewable sources of energy for electricity such as:

- SOLAR ENERGY LIKE IN CLARKSVILLE, Tennessee, LIKE IN SINGAPORE

- GEOTHERMAL ENERGY LIKE IN ICELAND

- WIND ENERGY LIKE IN ESK OR DONEGAL in Ireland, LIKE IN HAMINA, Finland, LIKE IN VIBORG, Denmark, LIKE IN DELFZIJL and Wieringermeer, Netherland

- BIOGAS ENERGY LIKE IN CHEYENNE, Wyoming

- HYDROPOWER LIKE IN LULEA, Sweden

- By sometimes using renewable sources of energy for cooling the datacentres such as:

- USING GROUNDWATER LIKE IN GRENOBLE, France

- USING GEOTHERMAL LIKE IN IOWA CITY, Iowa

- USING SEA WATER LIKE THE NATICK PROJECT ORKNEY ISLANDS in the North of Scotland, UK, or LIKE THE AFOREMENTIONED HAMINA, Finland

- USING COLD AIR LIKE IN THE AFOREMENTIONED LULEÅ, Sweden

- By sometimes reusing energy waste:

- reusing the heat waste to warm up houses or swimming pools LIKE IN ODENSE, Denmark, LIKE IN UITIKON, Switzerland, LIKE IN AALSMEER, Netherland, LIKE IN BORDEAUX, France, LIKE IN OSLO, Norway.

Cloud providers commitments

Following the Paris agreements in 2015, some cloud providers announced their ambition to become CARBON NEUTRAL or NET ZERO CARBON (or NET ZERO GHG) within the next few decades. Some cloud providers even went further in the one-upmanship with their announcements of becoming CARBON-FREE or even CARBON NEGATIVE. And they can count on the SUPPORT OF THEIR EMPLOYEES to meet their objectives. Being carbon negative, however, does not mean that datacentres will start to absorb carbon. What does that mean? When we plug our phone or our laptop, we cannot choose to use exclusively renewable energy. It is the same for datacentres. Instead, they offset their carbon footprint by investing in the renewable production of energy. If the cloud provider delivers as much renewable energy to the grid (or more) as it consumes from the grid, in general we talk about NET ZERO EMISSION (or carbon negative), although the terms are lacking clear definition and are interpreted according to one’s own criteria. In contrast, CARBON NEUTRAL arose after the Kyoto protocol in 1997 and means that the company is counterbalancing their carbon emissions via projects to capture carbons from the atmosphere, by planting trees, buying carbon offset or carbon credits etc. Carbon free – and here as well, this is not a definition provided in any dictionary at the moment – would mean that exclusively non-carbon energies (hydropower, nuclear, solar, wind, etc.) are consumed at any moment of the day. In any case, it is noteworthy to mention that ICT companies have been responsible for more than half of the total corporate RENEWABLE ENERGY POWER PURCHASE AGREEMENTS in the recent years.

In direction of the edge computing

Finally, a word about EDGE COMPUTING (also termed fog computing) which can be seen as the next level of abstraction of the infrastructure, i.e., computing, storage, etc. are happening on the end user location (on the mobile phones of users for example). It is noteworthy to mention that a completely distributed architecture (all computing, storage, etc. are happening exclusively on the end user location) is nearly 14% more energy efficient when used as fully centralized architecture (all computing, storage, etc. are happening to a central datacentre and users connect to it) notably because the former architecture is not using large size cooling systems. This kind of architecture is gaining in popularity with the emergence of the IoT, and could provide another way to reduce the environmental footprint of datacentres in the future.

To be continued…

As we can see, everything is for the best, in the best of all possible worlds. But is it really like this? In the next article, we will try to list some criticisms concerning the environmental impact of the datacentres.