Emil Huzjak, Josip Skender

DATA ENGINEERS

Introduction

Data pipelines can get pretty complex, and when you’ve got a bunch of moving parts, something’s bound to break sooner or later. Even if your data system is solid and can handle hiccups, there are times when you need to extract specific info from data packets, or maybe you’ve got new data consumers on the scene. The catch? The original data source might not have that info anymore. Sometimes, for privacy or speed reasons, it makes sense to store data in the cloud instead of re-initiating the transfer from the source. Whether you’re new to the cloud or a seasoned data pro, having a simple way to back up and resend your data is a game-changer.

Enter Persistor. It’s tailor-made for cloud setups, letting you store all your messages from a select broker, no matter the domain and topic of interest. The beauty? You can easily resend any subset of them to a different broker whenever you need. It’s super flexible, no constant tweaking is required. Instead of juggling dedicated services for every situation, Persistor supports your preferred broker—just set it up once and forget about it. Navigating large quantities of data is easy when it’s mindfully stored on the data lake, indexed and resendable, and that’s exactly what Persistor offers. This is especially obvious with large quantities of data, such as in the IoT domain, where other storage arrangements just aren’t fast and spacious enough to accommodate years-old information.

Chances are, you’re dealing with data related to different projects or teams. Persistor lets you filter out messages tied to specific concepts, even from a particular time, and resend them for analysis elsewhere, or to the same topic as before. How? By cleverly using metadata attached to each message. Simple, efficient, and a real lifesaver for anyone dealing with the intricacies of data pipelines.

Our solution

Persistor is a lightweight cloud data backup and resubmission system. It can persist and send data from message brokers, from the lesser known to the mainstream ones, such as Apache Kafka and Google Cloud PubSub, and store it in a wide array of storage providers. It has four modular components which allow it to back up any number of topics at once.

The Persistor is essentially a subscriber to a topic that stores messages and sends the information about them to another topic. That other topic is backed by the Indexer component of Persistor, which stores all the metadata about your messages. Stowing away your data safely is fine, but at high volumes, it becomes unmanageable if you can’t read it selectively. With a log of the metadata, you can make use of filtering by any value you want – further encouraging data versioning and descriptive thematic separation at the source end.

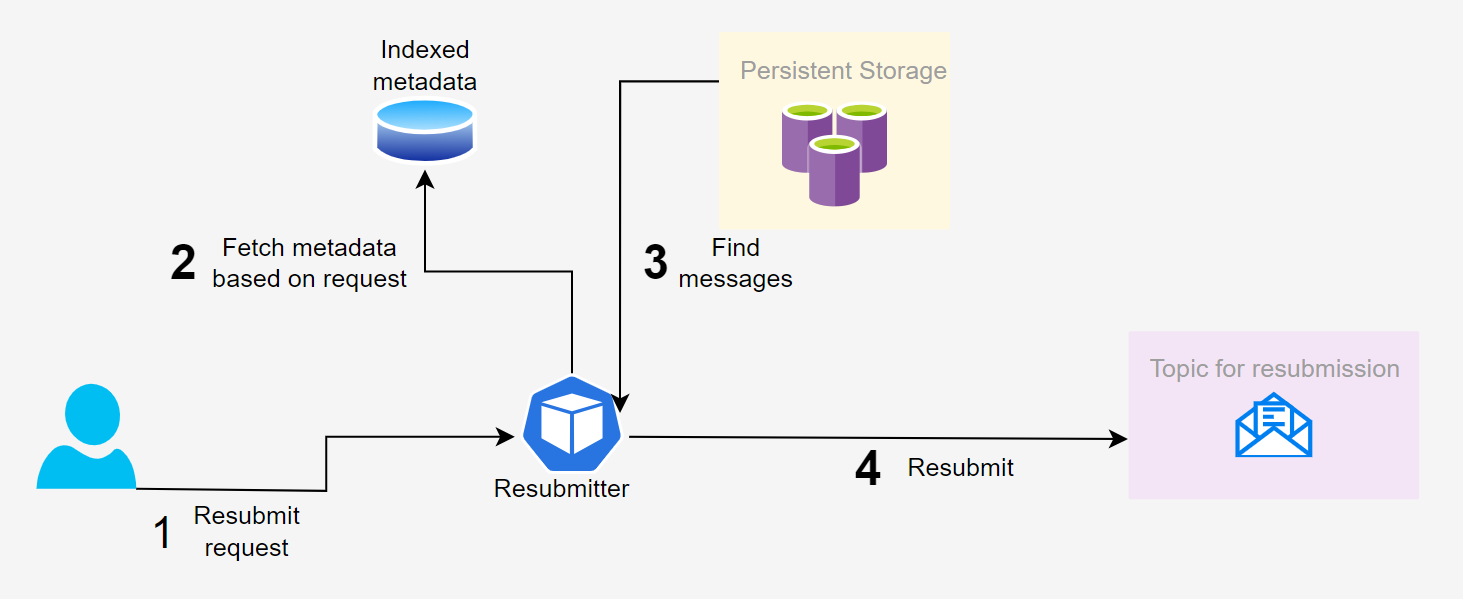

The Resubmitter component is a small but powerful API server that receives requests for resubmission and forwards the messages to any other topic. It can be used to resubmit messages from many topics without having to change anything about its configuration. Messages that arrive carrying data that you need are often fleeting and temporary, and the Resubmitter enables reliable repetition of data streams not just along one direction but along multiple. If you have many data sources connected to various topics and multiple endpoints from which you’d like to receive the information, Resubmitter is a centralized hub to orchestrate all of that with just a couple of requests.

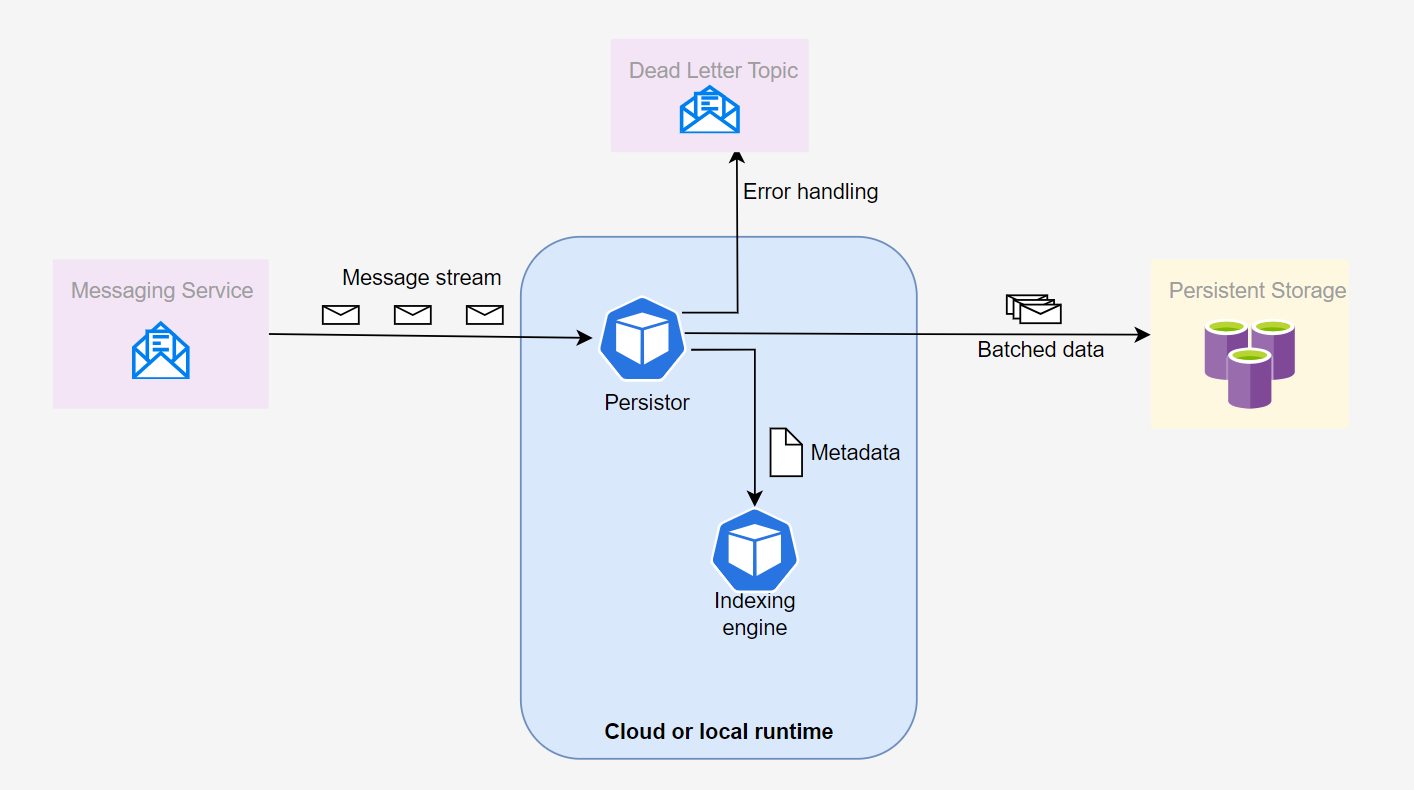

The workflow is super simple. Messages that arrive at a topic of your choice are stored by Persistor on a desired storage. The metadata is sent to an additional topic where the Indexer comes into play and stores it in a Mongo database. Take a look at this diagram of Persistor and its indexing engine. It should be clear that Persistor is the glue that holds your data brokers and backup destinations together. The message source is pictured on the left and the storage on the right. Indexer works with Persistor to keep the metadata indexed and cataloged.

As for mishaps like connection errors and server downtime, don’t worry – a dead letter topic can provide a safe place to temporarily archive unlucky data for later processing, trigger alerts, and analyze problems. Alternatively, the messages can stay on the original topic until the problem is solved.

With both data and metadata safe and sound, the Resubmitter can use the metadata to find wanted data and send it to a new topic. Upon receiving a resubmit request with the source and destination topics, time range, and custom message attributes, it fetches the indexer-provided metadata for the relevant messages, downloads them from files in the data lake, and sends them to the destination topic.

It also forms a simple file path structure which allows platforms and users with access to the data lake to query the data on their own based on the version and time of interest.

Use Cases

- Data reusability: Have you found a bug in your processing or would like to change something? Maybe a property was not calculated correctly? Just resubmit the original data back to the topic when you re-configure the pipeline.

- Adding new processing pipelines: The Resubmitter can simply be asked to send the existing messages to another topic, whether they be recent or many years old. The data isn’t going anywhere!

- Manageable data chunks: You can adjust the batching granularity to suit the frequency and size of your data. Are the payloads coming in by the thousands? Maybe you want larger batches to make the files more manageable. Once the volume of data is lower, Persistor will automatically perform the storing every once in a while to keep the storage fresh and up to date.

- Arranging the data by type: Add a message key to your data and Persistor will sort it into directories accordingly. The storage is easily navigable, so users interested in a specific key can have everything in one place without filtering through unrelated data. If your data changes from day to day, or even hourly, you can separate the messages by time, but otherwise, you can let them pile into the same directory and access them together when the need arises.

- Automatic dead lettering: Worry no more about where your data is going when it’s not going where it’s supposed to be going. A dead-letter topic is a cheap landing zone where all the issues you did not expect can be aggregated and analyzed together, and it’s easy to chain all sorts of services for analytics and alerting. With the power to which Persistor opens the way, the dead letter topic will mostly be collecting dust, but it’s there when the going gets tough.

- Alerting: A dead-letter topic makes it easy to configure automatic alerts for failure rates above certain thresholds. If you would like to improve your response time to pipeline errors, give Persistor a chance.

How can Persistor help you in your industry?

Persistor in E-commerce: In the fast-paced world of e-commerce, effective data management is the linchpin for sustainable growth and success. Persistor emerges as an indispensable solution, tailored to meet the intricate demands of online retail. Consider the seamless orchestration of order processing—Persistor ensures that every transaction is securely recorded, laying the foundation for transparent financial tracking and streamlined operations. Near real-time inventory management becomes a breeze, providing businesses with the agility to respond to market demands promptly.

Pharmaceuticals: Stay on top of research breakthroughs and manage the production of medicine on a large scale in your organization or exchange information across multiple organizations. Cutting-edge simulations and testing often squeeze every last bit out of processing resources. By persisting these valuable results and making it easy to access the data later on, you make sure that computational power and time spent don’t go to waste.

Healthcare: Aggregate patient and treatment information without fear of leaking confidential data. Perform processing based on department, time of year, and patient age groups, among many other things. Instead of getting all facilities to follow the same protocol when logging data, separate only messages related to one subject on the fly when doing new analysis, and keep track of changes as they happen.

Finance and Banking: In the intricate world of finance and banking, data is currency, and the Persistor is the guardian of this digital vault. It goes beyond mere transaction recording, offering a robust platform for secure data storage and retrieval. Real-time indexing ensures that financial institutions can navigate through vast datasets swiftly, making informed decisions. Persistor’s role extends to compliance, providing a secure haven for sensitive financial records while seamlessly adapting to the ever-evolving regulatory landscape.

Smart home appliances: In the dynamic realm of the Internet of Things (IoT), Persistor emerges as the linchpin for connected ecosystems. Determine the optimal settings for energy-efficient air conditioning, lighting or charging of devices based on time of year, weather and much more. Persistor makes this vision tangible by persisting and managing data. Since you can make a data lake virtually limitless, you can keep track of even the most talkative devices and run detailed comparisons with historical data by resubmitting it to another pipeline. By persisting the flow of traffic to the data lake, you also facilitate making informed business decisions in the future based on analysis performed on the files. Persistor is the silent conductor orchestrating the harmony of data in the IoT symphony.

Automotive telemetry: Respond to changes in vehicle performance and driver habits. If you discretize sensor data such as mileage and braking deceleration using message versions, you get data sorted into actionable categories. Resubmission of that data to another pipeline or analysis directly on the data lake highlights changes to machine behavior due to wear and tear or traffic and can make necessary alterations stand out.

Differentiation and Features

Intuitive usage and simple deployment – enjoy the benefits of a completely automated backup and retrieval system.

Resubmitting is fast and lightweight. It works the same in any processing environment and fits in anywhere with minimal differences in setup.

Our indexing engine allows you to efficiently filter data by multiple parameters, including publish time and message versions. It’s as easy as listing them all in a request.

Persistor can be deployed anywhere as long as the environment supports containers. Due to its modularity, you can even deploy the Persistor on your topic on-prem and host the indexing engine and resubmit features on a remote server. Multiple users and pipelines can therefore use the same metadata storage.

Metrics about throughput and hardware usage are exposed so you can track performance and decide whether you need to add additional topics to distribute the load.

Choose a directory structure, batching settings, and dead lettering options tailored to your preferences.

Results and Benefits

Cost-consciousness: Persistor can easily handle a large amount of data cheaply and efficiently. And when compared with the costs incurred and time lost when manually resending data and figuring out which data needs to go where, Persistor is a no-brainer.

One-size-fits-all solution: Persistor eliminates the need to context switch between provider-specific solutions for data backup. The same product can be deployed regardless of the broker in question and only a few changes to parameters are required.

Analytics: Data lake storage and resubmission to brokers brings you one huge step closer to powerful and diverse analytic processes via multiple avenues, to squeeze valuable insights from data that you otherwise might not notice.

Unchanged data: Persistor keeps the message content identical even if it’s been resubmitted many times, without the need to worry about data format consistency.

Easy to integrate: The entire Persistor workflow is easily deployed using declarative, simple-to-understand infrastructure as code solutions.

Modularity: Focus on backing up your data and worry about reusing it when it becomes necessary – or enable both features at once. It is really all up to what your and your client’s current needs are.

Tight dev-test process: It was never easier to send data samples anywhere in the blink of an eye. When debugging or performing end-to-end tests, choose a subset of your data to send into a pipeline for testing purposes.

Take Action Now: Make data reusability a breeze

Ready to experience the benefits of Dataphos Persistor for yourself? Head over to our website now if you want to learn more about Syntio and how we can help you in your data journey (Homepage) and if you want to learn more about Persistor and how to get started visit our documentation page (Persistor).

Both community and enterprise versions of Persistor are at your disposal, with the enterprise edition offering round-the-clock support, access to new feature requests, and valuable assistance for developing use cases to drive your business forward.

We strive to eliminate the need to constantly worry about data management on a low level so you can focus on what really matters – business decisions. We hope Persistor is a huge step in this direction and that you can make it a part of your toolbelt in no time. Thanks for taking the time to read about our new product, and we look forward to hearing about how Persistor has made a difference in your life!