Benoit Boucher

DATA ENGINEER

Introduction

Recently, we faced a challenge regarding a Google Cloud Function (referred to as CF hereafter). First, what is a CF? A CF, or more broadly Function As A Service, exists in most public cloud providers under different names: Alibaba Function Compute, AWS Lambda, Azure Functions, Google Cloud Function, IBM Cloud Functions, Oracle Function, and others, (see Figure 1) A CF is a service which allows customers to deploy and run their code without the complexity of building and maintaining any infrastructure. Code can be written in different languages such as .NET, Java, Ruby, Node.js, Go, or in our case, Python. In general, the execution duration of those functions is limited to a couple of minutes. In Google Cloud Platform, the CF can be triggered by a HTTP request, creation (or deletion) of a file in Google Cloud Storage, and a message published to a Pub/Sub topic, etc.

Figure 1: the Function AsA Service in different public cloud providers.

The challenge

Let’s try to summarize our challenge: we have a CF making a call to a REST API for each element of a list of identifiers. Then, parsing the result and ingesting the data (in Google Cloud Storage, BigQuery, or whatever). The list of identifiers is made up of a few thousand, and this CF has to run once per day. Ideal use case for a CF.

Over time, as the solutions grew bigger, the list of identifiers started to extend. The CF was on the verge of timing out as the execution duration has a maximum of 9 minutes. That is where we come in.

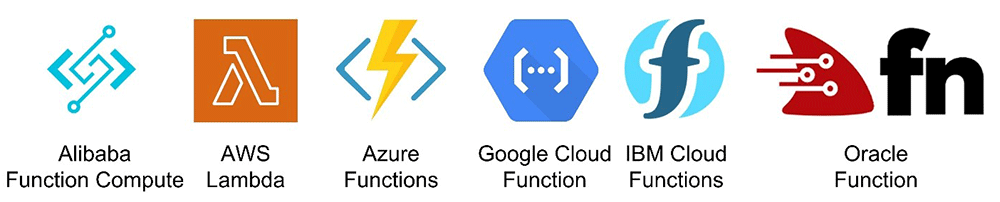

We started to decompose this CF in terms of time duration for each task. It turns out that more than 90% of the time was dedicated to the REST API (GET/POST) requests (sending requests, waiting for the response), I/O bound. While the remaining 10% of the time was due to the preparation of the urls, parsing the response, preparing the data for ingestion, and ingesting the data, CPU bound (see Figure 2).

Figure 2: schematic representation of the time required for each task.

The options

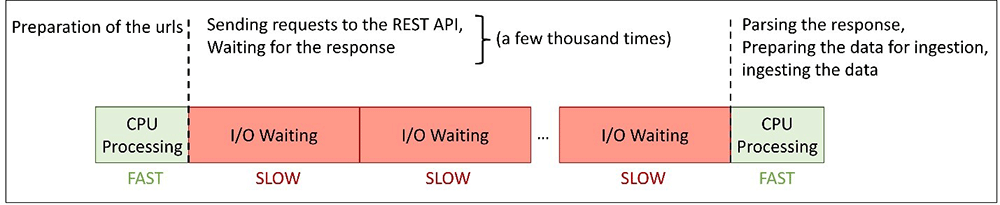

We started to rack our brains for this challenge. In Figure 3, you can find a few options that came to our minds. Note, there are certainly more options than those, and you may come up with different options yourself that you would find more convenient. But remember that the challenge described here is somewhat simplified in comparison to the “real” challenge we faced. Anyway, let’s discuss those options.

Figure 3: a few options to tackle the challenge described previously

Before that however, let’s stop for one second and explain what those services are (if you know them already, just skip this short paragraph):

- GCE (Google Compute Engine) is a service used to create and run VMs on Google infrastructure.

- Composer Airflow is a workflow orchestration service built on Apache Airflow. It’s written in Python. It allows you to author, schedule, and monitor workflows.

- Cloud Run is a managed compute platform that lets developers run containers that are invocable via requests or events.

① Setup an infrastructure to run the code: considering that we want to run our code once per day this would be overkill to set up an infrastructure only for that reason. Alternatively, we could setup a VM just to run our code and shut it down after, but this implies additional development to implement such solution. Let’s see if we can keep a serverless solution.

② Split the Cloud Function: for each element, call a different Cloud Function: while this would keep us away from the timeout of CF, this would also increase the number of invocations of our CFs. This may incur additional cost over time. Additionally, instead of maintaining a single CF, we would have to maintain several different CFs. Not ideal for a short-term solution.

③ Use Cloud Run to take advantage of its longer maximum timeout (60 minutes): the switch from CF to Cloud Run is relatively easy as we just need to containerize our python code. But let’s be honest, this doesn’t really solve our challenge because the time needed to run our code will continue to increase in the future. It will spare us a few weeks at most. Hence, it is good for a quick solution to avoid the timeout of the CF but for a real solution we will have to use another option.

④ Rewrite the code in Golang to take advantage of its concurrency feature: it must be said that due to the need of specific Python libraries, it was impossible to switch to another language. Although it sounds like a good solution we had to cross it off the list. Python is slow but maybe we can find a way to speed up our CF with Python as similarly as concurrency in Golang.

⑤ Rewrite the code in an asynchronous way using asyncio: asyncio is a library to write concurrent code. Asyncio is perfect fit for I/O-bound and high-level structured network code. This is exactly our use case. We need to rework our code a little bit – as we shall see after – but it seems manageable

To wrap up:

– ⑤ seems to be our best option,

– ② could be an alternative,

– ③ is a short-term solution but isn’t really solving our challenge,

– ① is a last resort and

– ④ is out.

Let’s try to use asyncio then!

But what exactly is asyncio?

Typically, for concurrency in Python there are 3 options: multiprocessing, threading or asyncio. Multiprocessing is recommended for CPU bound (for example: mathematical computations where there is no waiting time and the limit is the CPU itself) while the two latter are recommended for I/O bound (for example: network connections or file system where there is waiting time). As in general for I/O bound, asyncio is faster than threading, there is a general rule of thumb: “Use asyncio when you can, threading when you must.”

Figure 4: Ed Yourdon: “SIMULTANEUS CHESS EXHIBIT V. JUDIT POLGAR, 1992 – 22”

As described by Miguel Grinberg on PyCon 2017 (his talk is AVAILABLE on video platforms and it is worth watching), we can compare asyncio to a chess exhibition in which one player – the chess master Judit Polgár on the above picture in Figure 4 – is playing against multiple players. With this in mind, let’s do some assumptions with Miguel Grinberg:

– “Let’s say there are 24 people showing up for the event. So, there are 24 games. […] Judit Polgár […] is going to come up with a move on average in 5 seconds. The opponents are going to take 55 seconds. You get a round minute for a pair of moves. And let’s say that for the average game there are 30 moves […]”.

– “Imagine you’re going to do this the synchronous way: for each game it’s going to last 30 minutes. She needs to play 24 of these. So, she is going to be there playing for 12 hours. It’s pretty bad. For her especially”.

– “In reality, these events don’t run like that. […] What they do is that they use an asynchronous mode […]: she walks to the first game and makes her move – 5 seconds […] – and then she leaves the opponent on that table thinking. […] She immediately moves to the second table, and she makes a move on the second table. And she leaves that opponent also thinking and move to the third, and the fourth, and so on. She can go around the room and make a move on all 24 games in 2 minutes. By that time, she is back at the first game and the opponent at the first game had more than enough time to make a move. She can make her next move on that game without waiting. If you do the math, she can play all 24 games – and win them! – in 1 hour. Versus 12 on the synchronous case.”

This is how asyncio works, but Python code using asyncio must be modified a little bit. The code must explicitly say when we are waiting for a task to be completed. This is done using the keyword async def (instead of def) for your functions, together with the keyword await as a prefix when we want to wait until a task is completed. This means that asyncio must be used together with different libraries, that are able to tell when a task is in a waiting state. Hence, a library like requests must be substituted by another like aiohttp which is working similarly but has this additional feature. If you don’t do that you may introduce some waiting state into your code, and it will spoil all of your speeding up process, or worst it could starve the other task by not releasing the memory. Finally, if your code needs a specific library but this library does not exist, then you will have to switch from asyncio to threading. Hence, the previous quote: “Use asyncio when you can, threading when you must.”

A simple example

Enough talk. Let’s see how it looks. We can create a new use case to illustrate this. Like in our previous challenge, we will use a REST API and compare the synchronous way and the asynchronous way.

We can first take a REST API. There is this awesome API called OPEN FOOD FACT. You use the barcode that you can find on any food products, and you get information about it (mostly what is written on the product but also the environmental impact score, the processed food score, the nutritional quality score, and some applications are built up on top of it to tell you if you “should” buy this product or to suggest an alternative to it) Pretty neat, isn’t it?

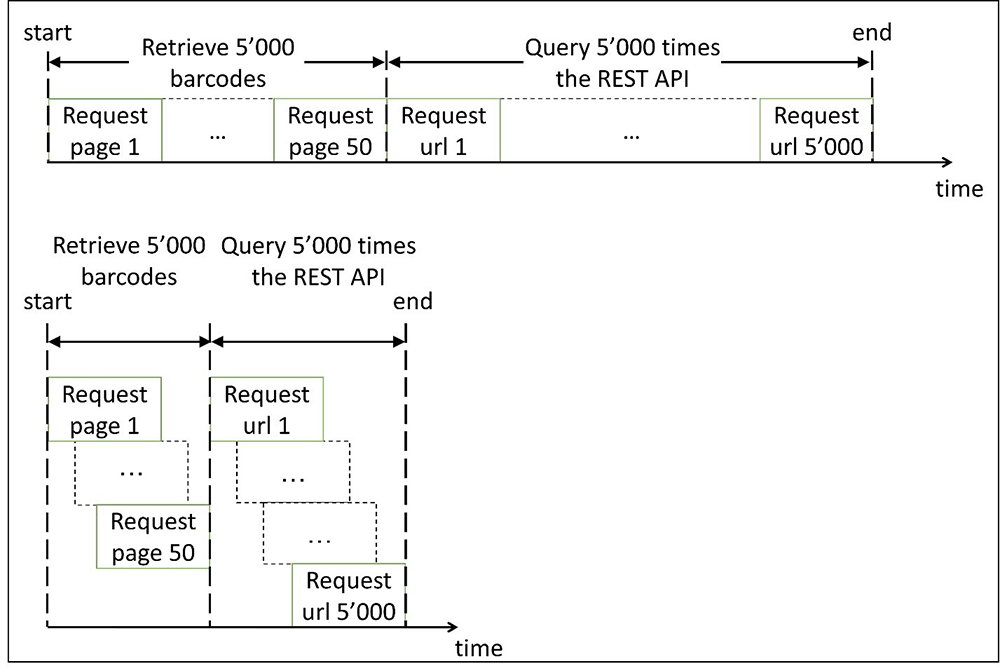

Next, we need some barcodes. Something like a few thousand. It seems that from the Open Food Fact WEBSITE, we can see the last modified products and their barcodes. 100 products per page. Let’s web scrap a couple of pages, 50 pages in fact, to build up a list of 5000 barcodes. And yes, this can be done in an asynchronous way too. Here, in Figure 5, is our plan:

Figure 5: the plan of our simple use case for synchronous process (top) and asynchronous (bottom) process.

Here are the pip commands we must run to install the needed libraries:

pip install requests

pip install aiohttp

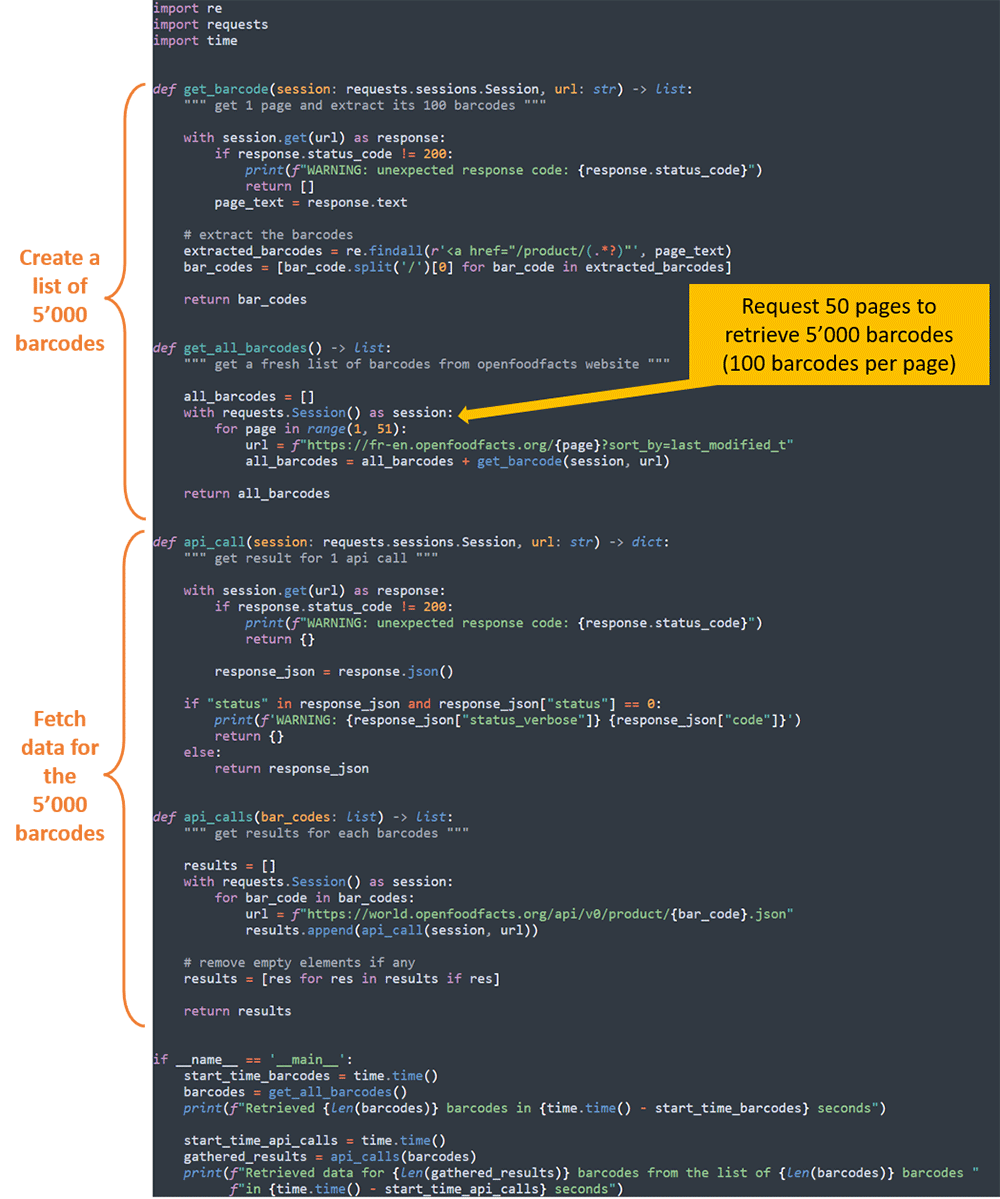

Here is the code for synchronous process:

Code 1: Pyton code for synchronous process.

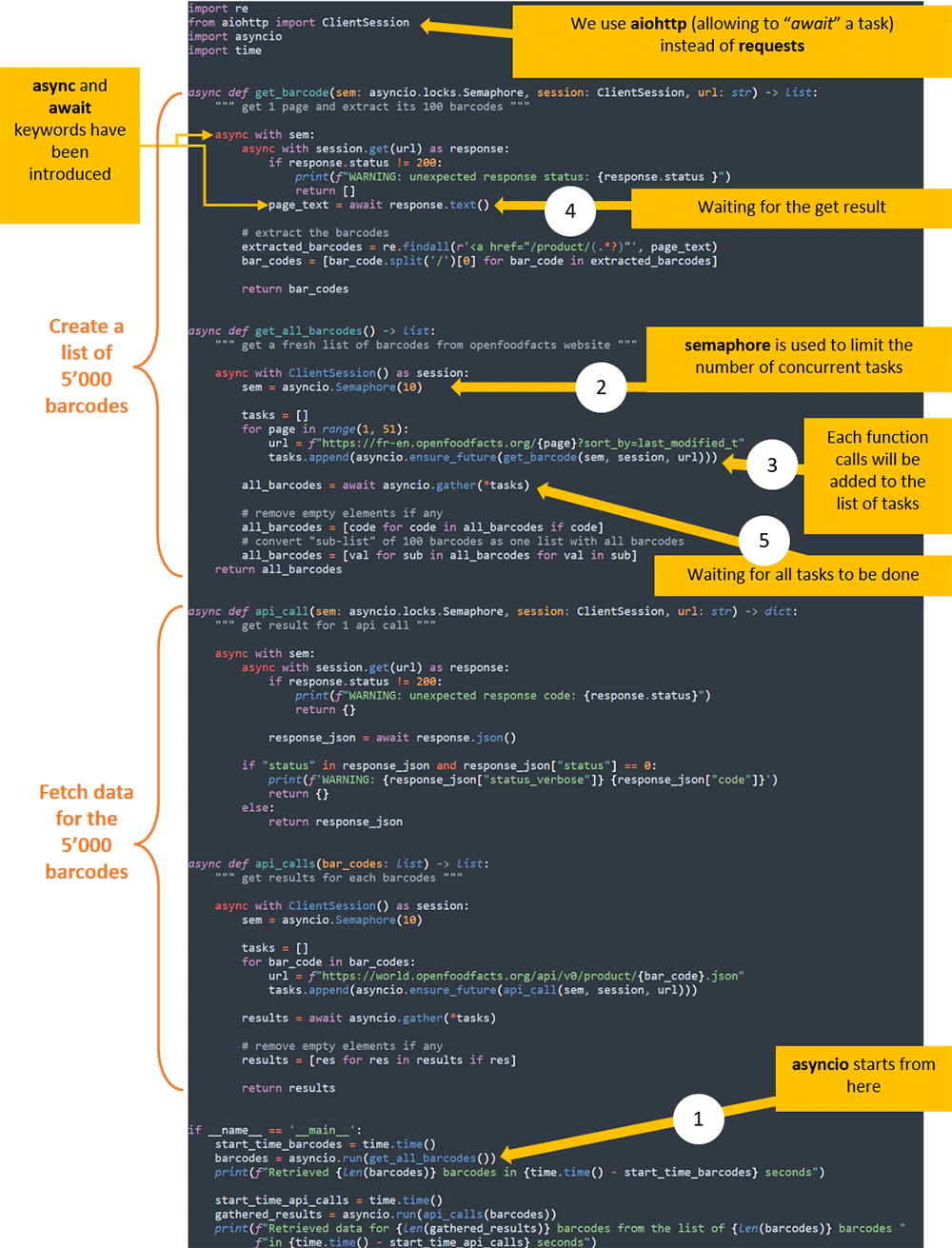

Here is the code for asynchronous process:

Code 2: Pyton code for asynchronous process.

Let’s run these codes and grab a cup of coffee during the running time. What kind of coffee do you want: 4056489028154 or 8000070200302?

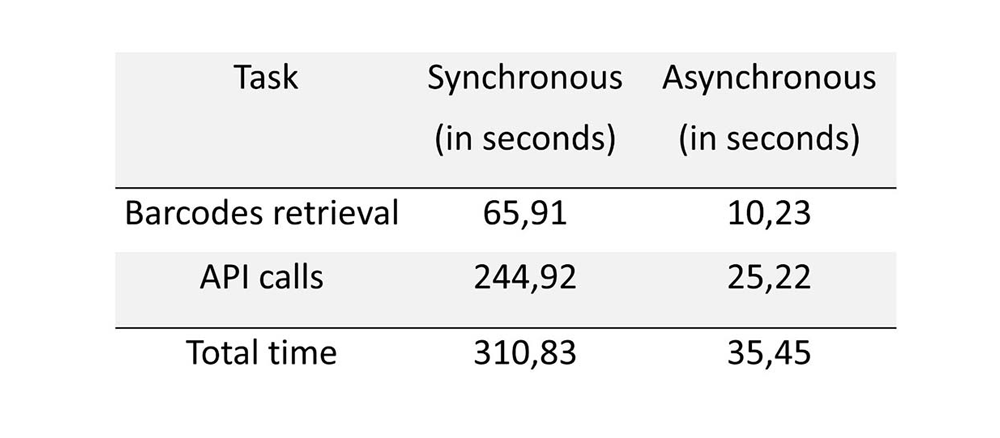

Here, in Table 1, are the results (if you rerun the exact same code, those numbers may change a bit, but we should expect to keep the same synchronous/asynchronous ratio):

Table 1: execution time of synchronous and asynchronous processes (in seconds).

As you can see, by reworking our simple script using asyncio we managed to speed up its execution time, which is now almost 10 times faster.

Downside of asyncio

stephalicious: “OVERLOAD“

Three side notes about running asyncio. You don’t want these things to happen when using asyncio:

- You don’t want to overload the API. That is, you want to limit the number of concurrent calls. You can do it with semaphore as shown in the previous code. A semaphore of 10 means that the 11th task will wait until one of the 10 first ends before it starts.

- You really don’t want to overload the API. Get in touch with your colleagues behind the API to check if they are doing throttling, check if you can safely request the API thousands of thousands of times. Especially if you don’t use only GET method.

- You don’t want to get an error during your devs. If something goes wrong, you will have as many error messages as you have concurrent tasks, and you don’t want to see that. Trick: start your devs with a small list of elements, 2 or 3 elements for example.

Using asyncio will increase the memory utilization. You may have to increase the memory of your CF to avoid a “memory limit exceeded” error. But let’s do the math, if you double the memory, it will basically double the price by millisecond, but in return using asyncio will divide the duration time by 10, meaning that at the end of the day you still cut the price by 5.

Finally, note that asyncio is a recent library and it has evolved rapidly. Documentation or code examples found on internet are sometimes outdated and you can encounter some issues when running your code. For example, you may have to replace “asyncio.run(” by an older way of using the library “asyncio.get_event_loop().run_until_complete(“ to be able to run the previous code on your Windows 10 (as of today, August 2021).

Conclusion

As we have seen, by reworking an I/O bound Python script using asyncio, it is possible to considerably speed up its execution time. Of course, scripts are not always that simple and introducing asyncio may not always be possible, but it is worth considering it when wishing to speed up I/O bound scripts.

Acknowledgments

Thanks to Ivan Čeliković to told us about asyncio, and Martina Kocet for helpful discussions around finding the best options.

Bibliography

- Brad Solomon – “ASYNC IO IN PYTHON: A COMPLETE WALKTHROUGH”, Real Python

- Jim Anderson – “SPEED UP YOUR PYTHON PROGRAM WITH CONCURRENCY”, Real Python

- Miguel Grinberg – “ASYNCHRONOUS PYTHON FOR THE COMPLETE BEGINNER”, PyCon 2017, Portland, Oregon