Ivan Celikovic

PRINCIPAL DATA ENGINEER

It seems nowadays everyone wants to work agile, deliver working and tested software in short time frames, continuously learn from each sprint and encourage co-operation with the ultimate goal to respond first to the market requirements.

Among the technology trends and practices that have emerged in the last couple of decades, Agile Development is probably the most talked about methodology. It seems nowadays everyone wants to work agile, deliver working and tested software in short time frames, continuously learn from each sprint and encourage co-operation with the ultimate goal to respond first to the market requirements.

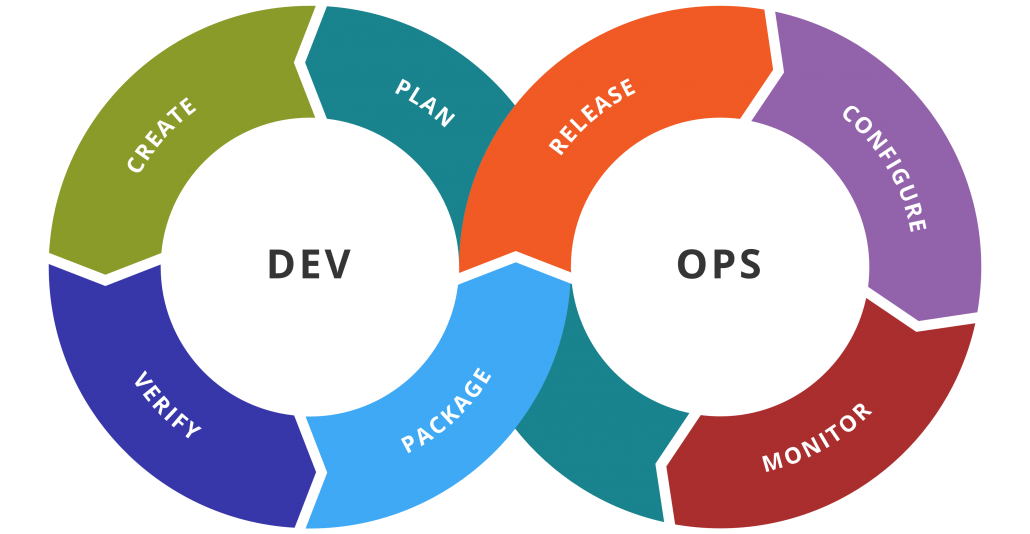

And whilst this is easier to accomplish in smaller developer teams, it causes quite a few problems in Enterprise Environments. Hence, DevOps was devised as an extension to Agile Software Development as a practice aiming to unify software development and operations. Its main goal is to instigate a better understanding and everyday collaboration between developers (all the people involved in developing the product) and operations (operations staff, systems engineers, DBAs, system administrators, release engineers, network engineers and security staff) in order to allow agile teams to automate building, testing and deployment of software code as much as possible all the while keeping the number of errors to a minimum. DevOps envelops the whole application lifecycle, from the idea that starts in someone’s head all the way to the product that goes to production and is monitored daily. For that purpose DevOps phases are linked in a looping Toolchain that tries to visualize the whole process.

By simplifying and automating operations and maintenance, operation teams gain more time to work on more important things and don’t have to handle daily repetitive tasks and developers get their code delivered quickly and get instant feedback. This results in a substantial acceleration of applications delivery.

However implementing DevOps in an already existing environment with a rigid mindset isn’t a small task. It is a very complex issue and can’t be done overnight. The whole system has to be thoroughly analyzed, the idea and its benefits have to be explained to all stakeholders and as with all things, it is best to start small. Once the process is flushed and you start getting value, you can start applying it everywhere.

In the last few years DevOps has gained momentum in coding development environments. Versioning programming code is something that has been done for years and with new version control systems it is even easier. There are many tools used in DevOps for building the code and for automatizing unit, smoke, regression and other kind of tests. Consequently, many organizations have implemented DevOps in their system, both out of the box solutions or customized ones.

But what about DevOps and DWH?

DevOps and DWH

When you think about basic tools used in DWH, the first things that come to mind are a data modeling tool and an ETL tool. Every member of a Data Warehouse department uses these products in their daily work and makes changes to the development environment. Those departments can number from a couple of developers to dozens or hundreds of them, depending on the company size and usually a lot of them work on the same objects. The “code” from ETL tools is usually a complex database holding all kind of metadata and is not very easy to version, while on the other hand Data Modeler scripts have to be handled very carefully because we can’t afford to drop a table and lose all the data. Testing ETL code is another thing that is very specific and usually has to be handled case by case. It can be done using minus statements that compare expected and actual target data or by checking the logs and calculating expected counts. You won’t find a lot of ETL testing tools, and those that exist require a lot of manual work and configuration to achieve a reasonable test that can be automatized. All of that and more is the reason you won’t find many articles on DevOps implementation in DWH environment.

Still, we tried to devise one such environment and the first version of the resulting solution works pretty well.

Our goal was to achieve both Continuous Integration and Continuous Delivery and make a big step towards Continuous Deployment. Although everything is automatized, we leave room for manual checks of the data resulting from ETL because as we said before, a universal way to test all results from ETL still doesn’t exist and that is why it is a good idea to leave user acceptance testing manual.

We focused on the following core components:

ETL Tool – since we do most our work on Oracle products, we chose ODI12c, specifically newer versions that support Git Integration

Data Modeling Tool – SQL Developer Data Modeler can also be integrated with Git and it is free, so it presented itself as an obvious choice

Database – our DWH finds itself on an Oracle 12c database

Version Control System – we chose to work with Git and from all the web-based Git repository managers (repositories can be in cloud or in an on premise server) we chose GitLab, although GitHub can be used almost identically as well

Automation Server – Jenkins is an open source automation server that almost everyone uses today and with its plugin architecture offers unlimited functionalities

Collaboration Tool – here we chose Trello since we already use it for project management and as a Kanban board

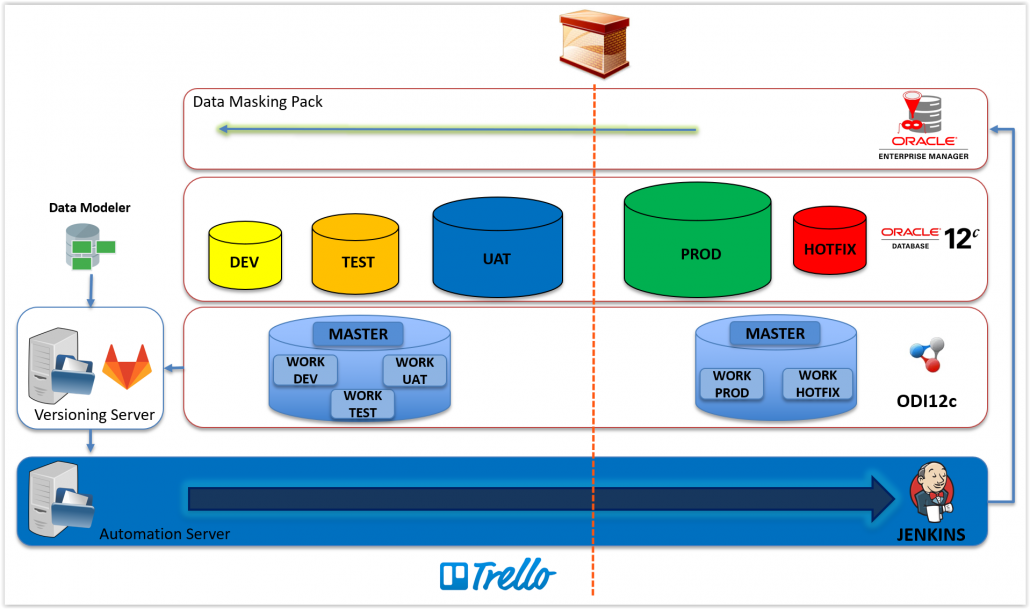

Our goal was to do our development on a single environment and version everything that we do; our ETL repository and Data model scripts. Another thing we had to think of because of the rising shadow of the EU General Data Protection Regulative is having GDPR compliant non production databases. Thankfully, as an extension to Enterprise Manager, Oracle offers the Data Masking pack which can subset and mask our database at source, without the data ever leaving production environment, and create a clone which we can use for development and testing purposes.

This is the architecture of our system:

So how does it work?

We have separated our production environment from the development and test environments. All of the development is done on DEV environment (besides hotfix interventions, which are done on a separate environment, put into production and then replicated to DEV).

With Oracle Data Masking pack we create masking libraries for our data and create workflows that mask and subset the whole DWH at source and create clones which we send to UAT, TEST and DEV environments (they differ in sizes, with a combination of goal based and condition based subsetting we can get the desired environments). These workflows can be scheduled daily/weekly/monthly to run at certain times with Jenkins.

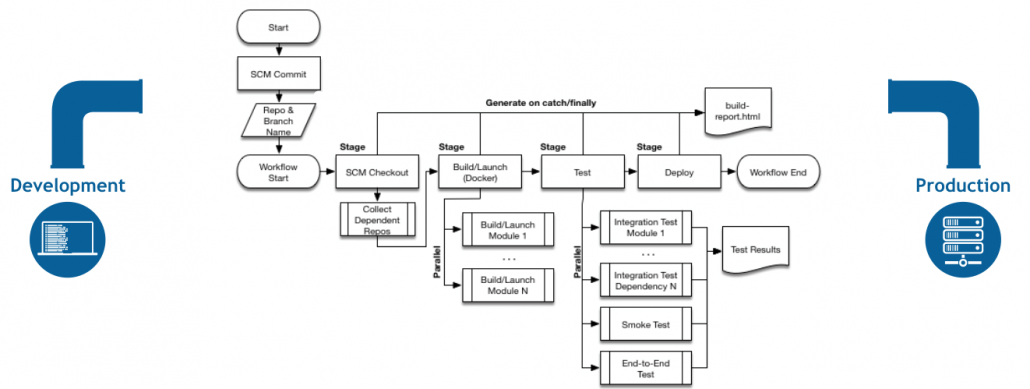

Our developers work on the data model and ETL code which are integrated with our central versioning repository. We won’t delve into branching strategies here and all of the possibilities in ODI, but in general separate developers (or development teams) should work on their own Git repository branches of ODI work repository and merge it to the Git master branch. Those branches should be either in a separate ODI work repository, in a separate database or both, so different teams don’t have conflicts during development (conflicts should be resolved during merge requests). Once our code is merged into the master branch it triggers our predefined Jenkins pipeline.

ODI12c allows us to create deployment archives; separate files for development and execution work repositories so we got both covered. In our case, only DEV and hotfix environments are development work repositories and the others are execution. This doesn’t have to be the case, all of the environments can have development work repositories as well.

Our Jenkins pipelines consists of stages for build and step by step deployment through environments. In those stages we call bash scripts for importing our code/execution file to the environment, (regenerate and) run the scenarios and check that they have finished properly and do some basic tests on the data they produced. There is an additional step after deployment and test on UAT where a manual confirmation is needed in order for the code to be deployed to production. If any step fails, the pipeline is suspended and notifications are sent to involved parties.

We expand our data model in a similar fashion. Once we are satisfied with the newest version, we create two scripts:

- An upgrade script which generates alter/add SQL to get the old version to the new version

- A downgrade script which creates a similar script but for returning our new version to the old one

Here we need to be more careful so there is a step where scripts have to be checked manually before being allowed to execute, because even the slightest mistake can mean dropping a table and losing data (we have backup, but restoring it takes time). This step can be partially automatized with a script that searches for drop commands and compiles a report so we know what to check. When we deploy to non production environment we execute the upgrade script, then the downgrade script, then the upgrade script again. If all goes well, we know that both scripts work correctly and we can rollback whenever we want. On production environment we execute only the upgrade script.

As you might have concluded yourself, Jenkins orchestrates everything here. It is integrated with Trello which handles issues and notifies us via Kanban boards and Outlook messages.

Next steps

With this architecture we automatized everything regarding our core development tasks. So what next?

As you may have noticed, the monitoring part is rudimental, our ultimate goal would be complete monitoring in one spot where we can analyze performance stats for tuning, logs, common errors and bottlenecks and generally have a whole picture of the system.

ETL testing is still in early stages…our goal is to try to completely automatize unit testing, component interface testing, regression testing and performance testing. It certainly isn’t an easy task, but developing a Data Quality module that would be integrated with DevOps and create triggers not only when deploying scenarios but in everyday workloads in production would be a great benefit.

Most Business Intelligence departments consist not only of Data Warehouse but also Reporting and Big Data departments. We could widen this to become a complete DataOps architecture where we would also version and automatize reports and Data Science development.

Docker and other container technologies are starting to become mainstream in DevOps in general and it could also be used here. We could use it for testing, creating self sufficient stateless docker databases which can be reused for testing our ETL and applications. We could package our apps in containers and pass them like that through multiple environments or package toolsets in containers and use them in Data science as a service.

All in all, DevOps and DWH do have a bright future together. Not only that, but there is so much potential for upgrades, integration and custom features that will help any Enterprise environment in their day to day work.

But DevOps is not only helping you reduce time to market and failure rate of new releases, it also helps develop a healthier mindset of collaboration, one in which all of the employees try to understand what others are doing. This leads to everyone taking into consideration all aspects of the software development lifecycle, contributing as best they can and a better and easily maintainable end product.