Simun Sprem, Nikola Tomazin

DATA ENGINEERS

Introduction

In today’s dynamic world ruled by data, it’s hard to keep track of all the things that are going on. With a growing number of devices that collect and send data, the importance of its governance is absolutely necessary. If You’re lucky, not keeping data in order only results in huge systems that collect unstructured data which has to be thoroughly analyzed in order to be useful. Another possible scenario is data not matching its supposed schema due to constant updates. This in turn pollutes the ingested data and even causes whole systems to collapse. The listed problems share a simple solution: Dataphos Schema Registry.

What is the Dataphos Schema Registry

Dataphos Schema Registry is a cloud-based schema management and message validation system.

Schema management consists of schema registration and versioning which allows developers schema standardization across the organization, and message validation consists of validators that validate messages for the given message schema. Its core components are a server with an HTTP RESTful interface that is used to manage and access the schemas and lightweight message validators.

The Message Validator ensures that your data stays in perfect shape. It actively validates every piece of data against its schema and swiftly directs valid data to its intended destination and flags any anomalies to a designated area (Dead Letter topic). This meticulous approach guarantees pristine data quality throughout your organization.

By using the Schema Registry to manage the schemas for the data, you can ensure that the data flowing through the pipeline is well-formed and compatible with all the downstream systems, reducing the likelihood of data quality issues and system failures, without affecting the overall throughput. Additionally, by providing a central location for schema definitions, you can improve collaboration and communication between teams working with the same data.

But what is the Dataphos Schema Registry composed of? Well, it is made up of dockerized parts allowing users to run it either on-premise or on the cloud. It is deployed with minimal effort; only by configuring a few necessary settings. It is modular, meaning it can run on any major cloud service using many different technologies (different brokers, databases, etc.). The suggested way of deploying Dataphos Schema Registry is on the cloud on a Kubernetes cluster.

How does it work?

Dataphos Schema Registry is composed of two major components: the Registry and the Message Validator, which use a database for storage of schemas and a message broker for receiving/sending messages. Since the Schema Registry is modular and supports most of the databases and message brokers, it’s up to the user to pick the ones they want to use.

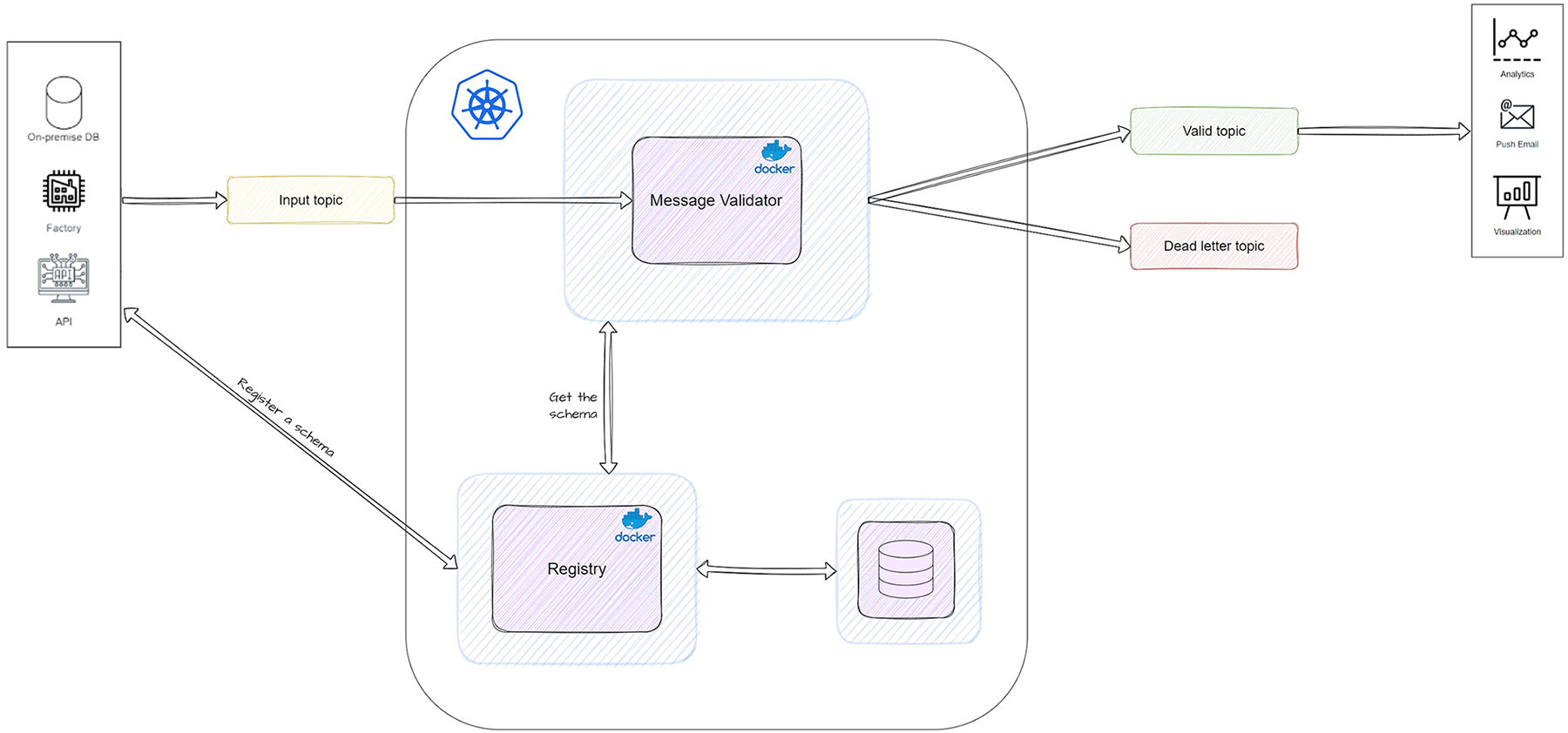

The following diagram represents the high-level Dataphos Schema Registry architecture:

Dataphos Schema Registry Architecture

The diagram shows the Registry and the Message Validator components as docker containers running inside of a Kubernetes cluster with the database set in the cluster as well. The message broker’s topics that are used are input topic, valid topic and dead letter topic and they are responsible for transferring data from the producer (on the left) to the consumer (on the right).

The steps in the pipeline are as follows:

- The source systems create a schema (either automated or manually) and register it in the Schema Registry from which they receive an ID and Version.

- The source systems produce data in a particular format, such as Avro, JSON, ProtoBuf, CSV, or XML. Before producing data, they insert the ID and version received in the previous step in the message metadata.

- When the data gets ingested by the streaming pipeline, it is actively validated against the schema definition to ensure that it conforms to the expected structure and data types.

- Depending on the validation result, the data will be either sent to the valid topic, where the consumers are subscribed, or to the dead letter topic, where the invalid data will reside and wait for manual inspection.

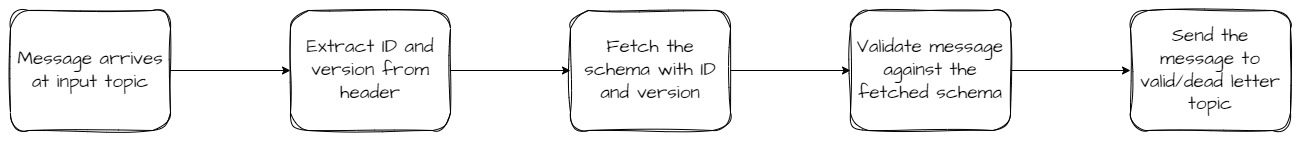

The visual of the described process is shown below.

Message Validation

Overview of the components

The Schema Registry is mostly written in GoLang to increase the component’s speed while making it as lightweight as possible. Because of this, the Schema Registry doesn’t influence the data throughput.

The following sections describe the components and their responsibilities in more detail.

Registry

The Registry component performs the following:

- Schema registration – enables users to register a new schema

- Schema canonicalization – schemas that are semantically the same, but with different order of the fields are treated as same

- Schema updating – adding a new version of an existing schema

- Schema compatibility – checks if the schema that’s being updated is compatible with the previous schema(s), depending on the compatibility mode.

- Schema validation – checks if the schema that’s being registered is of the correct format syntactically and semantically.

- Schema retrieval – returns the schema with the specific ID and version

- Schema deletion – deletes whole schema or just specified versions of a schema

- Schema search – search schemas by name, description, attributes, format, etc.

A list of REST API requests for those tasks can be found in the documentation.

The main component of the Schema Registry product is entirely independent of the implementation of the data-streaming platform. It is implemented as a REST API that provides handles (via URL) for clients and communicates via HTTP requests.

When the Registry gets an HTTP GET request, it retrieves the schema with the ID and version specified (if the schema exists) in the request URL. The schemas themselves can be stored in any type of database, whether in tables like in standard SQL databases, such as Oracle or PostgreSQL, or NoSQL databases like MongoDB. These databases can be either on-prem or hosted by a cloud provider.

When a new schema is being registered, the compatibility and validity version needs to be specified. Users can choose one from the following compatibility types: backward, backward-transitive, forward, forward-transitive, full, full-transitive and none. The validity types are full (syntax and semantic), syntax-only and full. These types are described in the following table:

| Compatibility Type | Changes allowed | Check against which schemas | Upgrade first |

| BACKWARD | Delete fields Add optional fields |

Last version | Consumers |

| BACKWARD_TRANSITIVE |

Delete fields |

ll previous versions |

Consumers |

| FORWARD | Add fields Delete optional fields |

Last version | Producers |

| FORWARD_TRANSITIVE | Add fields Delete optional fields |

All previous versions |

Producers |

| FULL | Add optional fields Delete optional fields |

Last version | Any order |

| FULL_TRANSITIVE | Add optional fields Delete optional fields |

All previous versions | Any order |

| NONE | All changes are accepted | Compatibility checking disabled | Depends |

Message Validator

Before the messages are sent to the system, their schema needs to be registered in the database, whether it is an entirely new schema or a new version of an existing one. Each of the messages being sent to the input topic needs to have its metadata enriched with the schema information, which includes the ID, version and the message format.

The Message Validator component performs the following:

- Reading messages from the input topic

- Message schema retrieval (and caching) from the Registry using the message metadata (header)

- Message validation using the retrieved schema

- Message transmission depending on its validation result

Message Validator workflow

The role of the Message Validator component is to route the messages being pushed from the input topic to their destination, and it does so with the help of the Registry component. It returns the schema with the ID and version (if it exists) specified in the message’s header from the database to the Message Validator. If the message can be successfully validated against the schema, it is routed to a topic for valid messages. If the data doesn’t fit the schema, if the schema is not registered in the database, or if there are syntax errors in the data, the messages are sent to a dead letter topic where the user can analyze the cause for failed validation.

Differentiation and Features

Schema Registry comes with many valuable features. Let’s take a closer look at those that set our product apart:

- Active message validation: While other Schema Registries only serve for managing and storing the schemas, the Dataphos Schema Registry provides active message validation and is perfect for businesses that have constantly changing and unreliable producers.

- Performance: Speed matters in data management. Even though it is a middleware, Schema Registry is engineered for high performance, enabling rapid data processing while minimizing any noticeable impact on latency, to deliver a seamless user experience.

- Compatibility and validity checks: Say goodbye to compatibility issues and invalid data. Our solution offers robust checks to ensure that your data schemas easily align with your systems.

- Cloud support: We understand that businesses operate across diverse cloud environments. Schema Registry is cloud agnostic. Designed with versatility in mind, it effortlessly integrates with Kubernetes, ensuring compatibility both in cloud deployments and on-premises setups.

- Data Mesh ready: Embrace the future of data with ease. Schema Registry is Data Mesh ready, allowing each team to have its dedicated message validator rather than relying on a centralized system.

- Alerting: When the schema changes or when a specified threshold of messages reaches the dead letter topic, our Schema Registry takes proactive measures by triggering email or Teams alerts. This ensures swift notification and allows teams to promptly address evolving schema structures or address potential issues indicated by the accumulation of messages in the dead letter topic.

- Wide range of message brokers and databases: Schema Registry works with a variety of message brokers (Apache Kafka, Google Pub/Sub, Azure ServiceBus, Azure EventHubs, and some less popular like NATS, Pulsar, etc.), providing compatibility with different platforms to meet diverse needs. Notably, it also supports protocol changes, allowing validation of messages from producers using one broker, such as Kafka, while facilitating the transmission of validated messages to a different broker, such as Pub/Sub. Moreover, the Schema Registry stores the schemas within various databases ranging from traditional solutions like PostgreSQL to cloud-specific databases like Firebase and Cosmos DB.

- Support for Multiple Data Formats: JSON, CSV, XML, Avro and Protobuf.

Benefits of using the Dataphos Schema Registry

- Standardization of system updates: The Schema Registry helps you define a standardized process of updating producers and consumers. If a producer wants to update the data format, it first needs to update its schema in the Schema Registry. By doing so, you no longer have to fear the system breaking due to message changes.

- Active validation component: Ensures data integrity by verifying the accuracy and completeness of incoming messages, reducing the risk of errors and malicious inputs. By validating messages, you enhance system security, protecting against potential vulnerabilities and unauthorized access. Additionally, message validation enhances overall system reliability, as it helps identify and handle unexpected data, promoting smoother operations and better user experiences.

- Cost-consciousness: Not having a centralized and structured schema repository causes data inconsistency because some producers update their schema without notifying the consumer, so the consumer lacks an understanding of the data. Also, if there are schema compatibility requests, the messages’ new version might not be compatible with the previous one. These problems, and many others, result in the main system collecting data that either has to be thoroughly cleaned (costing time and money) in order to be processed, or unusable at all.

- Ease of deployment: Since the beginning of the Schema Registry development, the user was kept in mind. Because of that, the Schema Registry deployment is as easy as running a few scripts. The only thing a user must do is specify the message broker and database type and their addresses and that’s it, the Schema Registry is ready to be deployed!

- Risk aversion: When messages arrive directly from producers to consumers, the newly evolved schema version might be incompatible with the schema stored at the consumer, and that can cause the consumer to process the data incorrectly. For example, the consumer might try to reference a column that was removed in the latest version, causing it to break. Also, a message might have a field that was changed from an integer to a string. When the consumer reads the field, it expects an integer which results in a type mismatch, crashing the consumer.

- Security: Schema Registry modules are designed to work with popular security protocols, such as TLS and Kerberos and also support encryption. Having these protocols at their disposal is integral because they allow users to authenticate with the Schema Registry and exchange messages in a safe and secure way.

- First step for DQ analysis: Schema Registry in its essence begins the process of increasing the Data Quality because instead of cleaning and adjusting the data in the system, it prevents that same data from entering by filtering invalid messages.

Try Schema Registry – Experience Structured Data!

Are you ready to experience the benefits of Dataphos Schema Registry? Head over to our website now if you want to learn more about Syntio and how we can help you in your data journey (Homepage ) and if you want to learn more about Schema Registry and how to get started visit the Schema Registry documentation page Schema Registry.

Both community and enterprise versions of Schema Registry are at your disposal, with the enterprise edition offering round-the-clock support, access to new feature requests, and valuable assistance for developing use cases to drive your business forward.

We’re excited about the potential of Schema Registry being able to help companies maintain clean and organized data storage, and we hope You are too. Thanks for taking the time to read about our new product, and we look forward to hearing about how Schema Registry has made a difference in your life!