dataphos

An enterprise-class, real time data platform enabling transactional,

operational and analytic business applications.

Runs anywhere, on any cloud or on-prem.

DATAPHOS ingests, prepares and serves in real time the highest quality, actionable data input to data consumers – transactional systems and analytics/data science users.

It enables easy creation of new value-added digital products.

DATAPHOS allows for focus on business outcome instead of underlying technology.

Advantages over Existing Solutions

and Market Practices

Not Dependent on Data Engineers

No need to invest in custom development, large data engineering teams or costly outdated data solutions.

Minimum Time to Effective Data Use

Out-of-the-box, ready-to-use components enabling fast deployment.

Data ingested, transformed and ready to use in a matter of days rather than months or years.

Best-in-Class Architecture

Based on years of experience with enterprise data architecture and building cloud data platforms across industries.

Avoids pitfalls and architectural mistakes of custom development that starts from legacy source systems as a base.

State-of-the-Art Engineering

Data decoupled from the systems that create it

Enables immediate access to data, without the need to modernize existing or legacy systems.

Results in far less dependency management – usually the biggest headache for any technology project.

Read once, consume many times

Data read only once, permanently stored in one place and available to multiple consumers for concurrent use.

Reduces load pressure on source systems. Resolves the problem of point-to-point integration.

Low-code, ready-to-use

Immediate deployment.

Minimizes cost and time to value for all data use.

Resource optimized and scalable

Superior performance: an order of magnitude improvement in data ingestion speed over CDC or ETL/ELT tools.

Low cloud/server resource usage. Horizontally and vertically scalable.

Security and data privacy

Data encrypted at publishing. Can be used in private networks and over public internet.

Supports PII data usage and right-to-forget.

GET DATAPHOS > Community Edition

Community Edition

dataphos-publisher

dataphos-schemaregistry

dataphos-persistor

Available for

Google Cloud Platform and Microsoft Azure

Free for Commercial Use

Supported by the Community

Enterprise Edition (Coming Soon)

dataphos-publisher

dataphos-schemaregistry

dataphos-persistor

dataphos-API gateway

dataphos-management console

dataphos-DML

dataphos-SSO

dataphos-universal consumer

Available for

Google Cloud Platform and Microsoft Azure

Enterprise Support 24×7

Implementation Services

New Feature Requests

Enterprise Components

Use Case Development

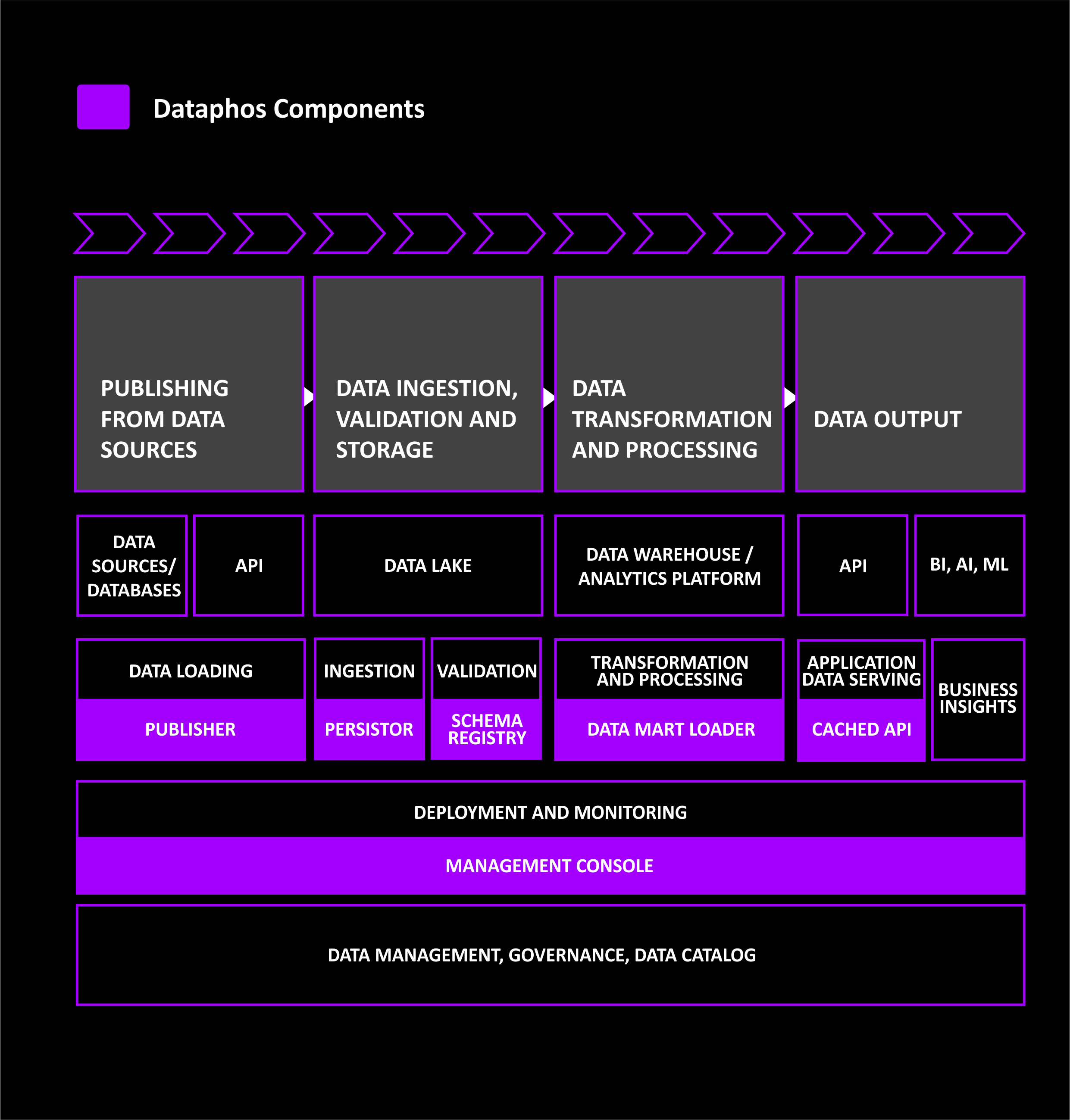

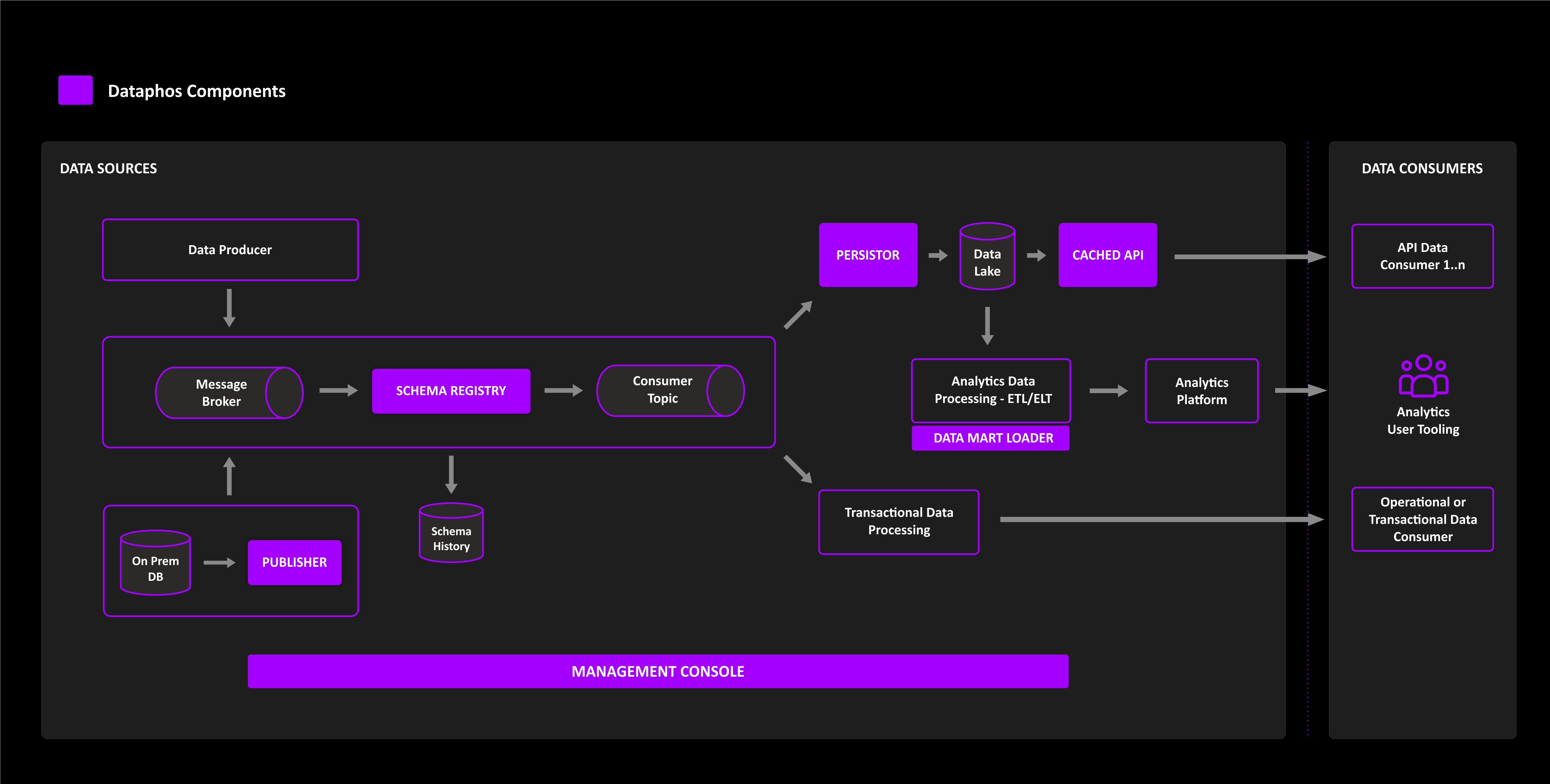

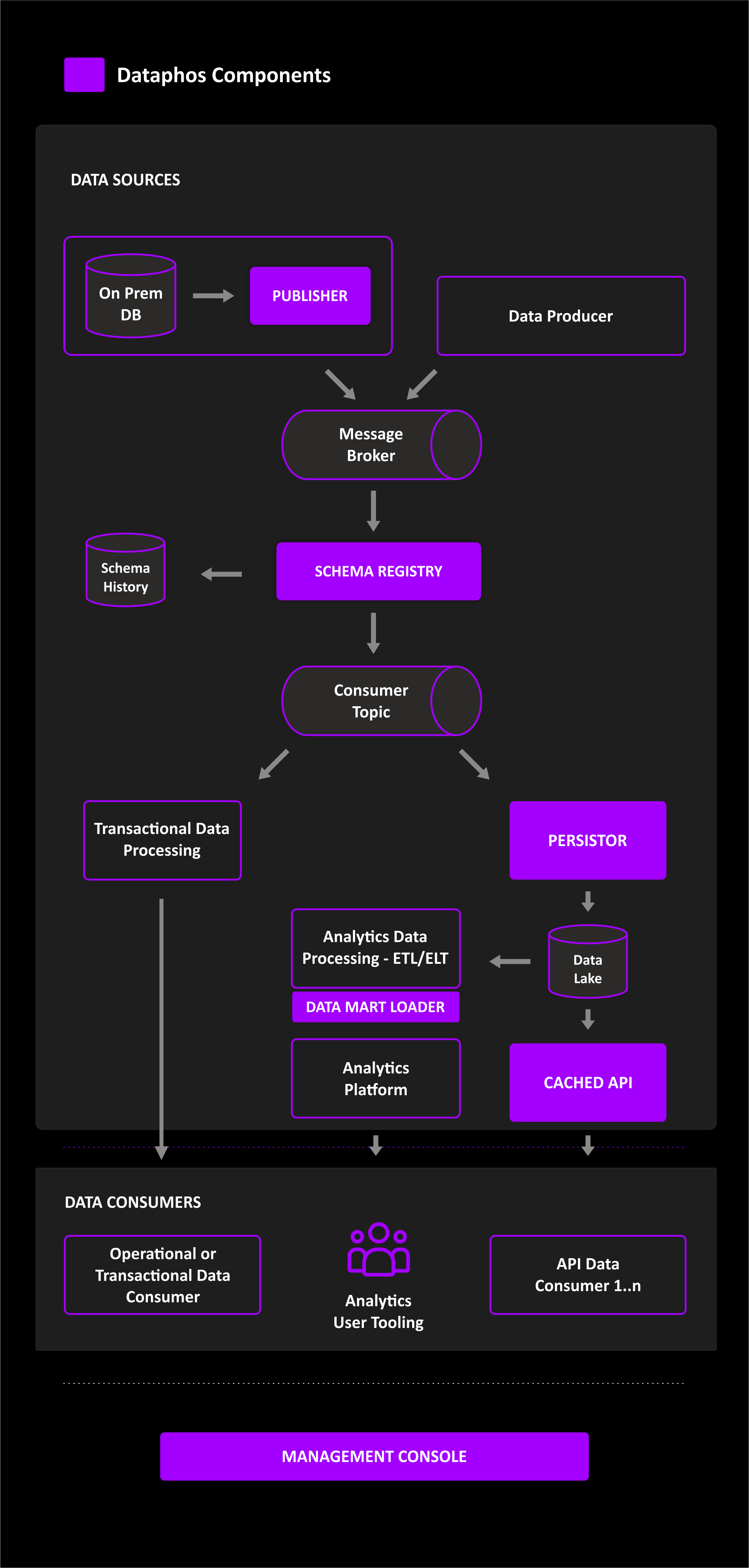

Dataphos Components

Publisher

Real time Business Object Publisher

Based on Create-Transform-Publish at source pattern. Uses RDBMS to transform data into structured business objects at source.

Data is formatted, serialized, compressed and encrypted. Significantly reduced network traffic.

Strong security: both public internet and private networks can be used

schema registry

Schema Registry Validator

Validates message schema, enables schema registration, versioning and recovery.

Interprets the incoming data flow and adjusts the schema automatically. Categorizes previously accumulated data in the new schema. All previous schema versions are stored; restoring a previous set is possible.

Supports both binary (AVRO, Protobuff) and text formats (JSON, XML, CSV)

persistor

Data Lake Builder

Permanently stores all data in the original format. Makes data available for subsequent processing and eliminates the risk of data loss. Fail-safe on the ingestion process.

Versioning with full structure history of ingested data. Automated data lake build (versioned).

Built-in indexing engine for tracing of a message location across the data lake.

Resubmits and replays permanently stored messages.

cached api

Serves data to consumers via an API REST endpoint.

Uses embedded in-memory system for getting the most recent data to consumers. Only when the requested dataset cannot be served from the cache system, it queries the underlying database.

data mart loader

Creates an analytic data model in an automated fashion.

Data mart builder patterns – different types of dimensions (Type 1, 2 and 7) and facts.

management console

Stand-alone management console used for configuring and deploying the platform via a UI.

Monitors performance. Product health dashboard with detailed product metrics and logs.

Architectural Paradigms and Technology

A unique low-code, true real-time data platform, written in the Go programming language.

Data mesh and event mesh architectural paradigms – including domain driven data design, data as a product and federated governance concepts. Fully compatible with decentralized data platform architecture.

Event-driven architecture and streaming data processing, avoiding complex orchestration.

Enables monitoring and observability of health and performance of deployed data pipelines and data flows.

Promotes and implements best data ingestion and data management patterns.

Built as a set of ready-to-use components designed as microservices with connectors and plugins – decoupling functionality whilst providing seamless data flow.

Data from the source available on the platform immediately, in real time, as consumable business objects, transformed at source.

Legacy source systems are no longer an obstacle: new target architecture is fully decoupled from the source systems.